0. Opportunities and Challenges of Multimodal Large Models in Personalized Medical Image Diagnosis

1. Introduction: The Evolving Landscape of AI in Medical Imaging

The integration of Artificial Intelligence (AI) into medical imaging represents a pivotal transformation in healthcare, fundamentally motivated by the need to enhance diagnostic accuracy, efficiency, and consistency across clinical practices . This revolution is particularly pronounced in radiology, a domain rich in data and signals, where AI's advanced pattern-detection capabilities can augment human expertise, thereby serving as a robust support tool for clinicians . The burgeoning volume of AI-focused research over the past decade underscores the critical importance for medical professionals, especially radiologists, to comprehensively understand AI's underlying principles and strategies for its safe and effective deployment .

Traditional unimodal AI approaches, predominantly employing convolutional neural networks (CNNs) for image analysis, have achieved substantial improvements in diagnosis by enhancing accuracy, speed, and consistency in the detection of various diseases . These CNNs, typically trained via supervised learning on radiologist-annotated datasets, process imaging data through successive layers to yield classifications with associated probabilities . While these models have demonstrated performance comparable to, and in some cases even superior to, human radiologists in narrowly defined tasks—such as identifying lung nodules or diagnosing pneumonia from chest radiographs—their inherent limitations include a constrained scope and a dependency on vast quantities of high-quality training and validation data .

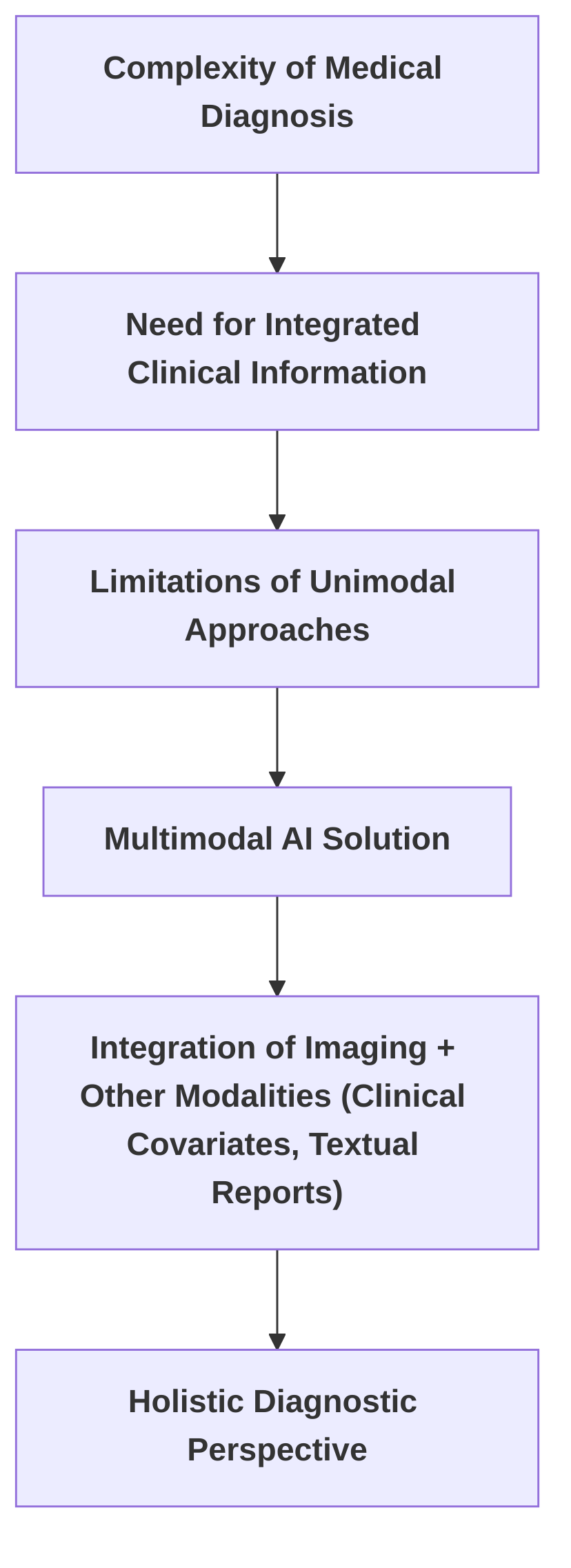

The escalating interest in multimodal AI directly addresses these limitations, acknowledging the intrinsic complexity of medical diagnoses, which frequently necessitate the integration of diverse clinical information beyond single imaging modalities . For instance, the nuanced interpretation of liver parenchymal alterations in conjunction with vascular abnormalities and secondary complications often proves challenging for conventional computer vision models, underscoring the demand for more comprehensive approaches . Multimodal AI aims to surmount these challenges by integrating imaging data with at least one additional modality, such as clinical covariates or textual reports, thereby providing a more holistic diagnostic perspective .

A comparative analysis of the broad motivations articulated in and reveals both convergent and divergent emphases. Both narrative reviews underscore the transformative potential of AI in medical practice. specifically traces the historical evolution of AI and its profound influence on radiology, highlighting the limited existing literature that effectively integrates both multimodal imaging and clinical covariates. This review's primary objective is to comprehensively examine multimodal AI in radiology by scrutinizing both imaging and clinical variables, assessing methodologies, and evaluating clinical translation to inform future research directions . In contrast, is driven by the rapid advancements in AI and Natural Language Processing (NLP), particularly the emergence of Large Language Models (LLMs) such as ChatGPT. This paper centers on how LLMs can streamline the traditionally time-intensive and error-prone manual interpretation processes in medical imaging, thereby significantly impacting healthcare quality and patient well-being . While both reviews advocate for more advanced AI applications, emphasizes the integration of diverse data types for superior diagnostic insights, whereas accentuates the potential of LLMs to enhance processing capabilities, interactivity, and data diversity in medical imaging due to their robust representational learning capabilities. Both implicitly acknowledge the limitations of existing machine learning methods, including data uniqueness, interpretability issues, and the high cost of acquiring high-quality labeled datasets .

Deep Learning (DL) represents a foundational advancement that critically underpins the progression towards multimodal AI and personalized medicine. DL has revolutionized numerous fields, including medicine, by offering powerful tools for pattern recognition and prediction, which are indispensable for the advancement of personalized medicine . The principles elucidated in —such as leveraging data-driven insights to analyze complex biological data, identify disease markers, and predict patient responses to treatments—establish the groundwork for more specialized advancements. DL's capability to process large and intricate datasets facilitates a deeper understanding and management of diseases at an individual level, thereby promoting more individualized care . This trajectory from the general impact of DL to the specific advancements of Multimodal Large Models (MLMs) in medical imaging diagnosis illustrates a natural evolution: as unimodal DL models approach their performance limits in complex diagnostic scenarios, the integration of diverse data modalities via MLMs becomes imperative for unlocking new frontiers in personalized and precision medicine. The global COVID-19 pandemic further highlighted the pressing need for rapid, accessible, and dependable diagnostic tools, demonstrating the capacity of deep learning and transfer learning with imaging data to provide automated "second readings" and assist clinicians in high-pressure environments . While promising, the expansive impact of AI in medical imaging also mandates vigilance against potential biases that could compromise patient outcomes, emphasizing the necessity for proactive identification and mitigation of AI bias .

1.1 Background and Motivation

The integration of Artificial Intelligence (AI) into medical imaging marks a transformative era in healthcare, driven by the imperative to enhance diagnostic accuracy, efficiency, and consistency . This revolution is particularly pronounced in radiology, a data-rich field where AI's pattern-detection capabilities can surpass human limitations for specific tasks, serving as a powerful support tool for clinicians . The rapid increase in AI-focused studies over the past decade underscores the critical need for medical professionals, especially radiologists, to comprehend AI's underlying principles and strategies for safe and effective implementation .

Traditional unimodal AI approaches, predominantly utilizing convolutional neural networks (CNNs) for image analysis, have significantly improved diagnosis by enhancing accuracy, speed, and consistency in detecting various diseases . CNNs, trained with supervised learning on radiologist-annotated data, process images through convolutional, pooling, and fully connected layers to output classifications with weighted probabilities . While these models have demonstrated performance comparable to, or even superior to, human radiologists in narrow tasks such as identifying lung nodules or diagnosing pneumonia from chest radiographs, their scope is often limited, requiring vast quantities of high-quality training and validation data .

The increasing interest in multimodal AI stems from the recognition of these limitations and the inherent complexity of medical diagnoses, which often involve integrating multi-dimensional clinical information beyond single imaging modalities . For instance, interpreting liver parenchymal changes alongside vascular abnormalities and secondary complications frequently confounds traditional computer vision models, necessitating a more comprehensive approach . Multimodal AI aims to overcome these challenges by incorporating imaging data with at least one other modality, such as clinical covariates or textual reports, thereby providing a more holistic view for diagnosis .

A comparison of the broad motivations presented in and reveals shared and distinct emphases. Both narrative reviews acknowledge the transformative potential of AI in medicine. specifically highlights the historical development of AI and its profound impact on radiology, emphasizing the scarcity of literature that effectively integrates both multimodal imaging and clinical covariates. The review aims to comprehensively explore multimodal AI in radiology by examining both imaging and clinical variables, assessing methodologies, and evaluating clinical translation to inform future directions . Conversely, is motivated by the rapid advancements in AI and Natural Language Processing (NLP), particularly the emergence of Large Language Models (LLMs) like ChatGPT. This paper focuses on how LLMs can streamline the traditionally time-intensive and error-prone manual interpretation processes in medical imaging, thereby substantially impacting healthcare quality and patient well-being . While both reviews advocate for more advanced AI applications, focuses on the integration of diverse data types for improved diagnostic insights, whereas emphasizes the potential of LLMs to enhance processing, interactivity, and data diversity in medical imaging due to their robust representational learning capabilities. Both implicitly acknowledge the limitations of existing machine learning methods, such as data uniqueness, interpretability issues, and the cost of acquiring high-quality labeled datasets .

Deep Learning (DL) serves as a fundamental advancement that underpins the progression towards multimodal AI and personalized medicine. DL has revolutionized numerous fields, including medicine, by providing powerful tools for pattern recognition and prediction, which are crucial for advancing personalized medicine . The principles discussed in —such as leveraging data-driven insights to analyze complex biological data, identify disease markers, and predict patient responses to treatments—lay the groundwork for more specialized advancements. DL's capacity to handle large and complex datasets enables a deeper understanding and management of diseases on a personal level, thus facilitating more individualized care . This progression from general DL impact to specific Multimodal Large Model (MLM) advancements in medical imaging diagnosis highlights a natural evolution: as unimodal DL models reach their performance ceiling in complex diagnostic scenarios, the integration of diverse data modalities via MLMs becomes essential for unlocking new horizons in personalized and precision medicine. The global COVID-19 pandemic further underscored the need for rapid, accessible, and reliable diagnostic tools, demonstrating the potential of deep learning and transfer learning with imaging data to provide automated "second readings" and assist clinicians in high-pressure scenarios . While promising, the broad impact of AI in medical imaging also necessitates vigilance against potential biases that could compromise patient outcomes, emphasizing the need for proactive identification and mitigation of AI bias .

1.2 Scope and Organization of the Survey

This survey, titled "Opportunities and Challenges of Multimodal Large Models in Personalized Medical Image Diagnosis," is structured to provide a comprehensive analysis of the evolving landscape of multimodal large models (MLLMs) within personalized medical imaging. The initial sections establish foundational concepts, including the historical progression of artificial intelligence (AI) in medical imaging and the emergence of deep learning (DL) as a transformative force . This groundwork is crucial for understanding the transition towards more complex, multimodal AI systems that integrate various data streams beyond singular imaging modalities.

Subsequent sections build upon this foundation by delving into the specifics of large language models (LLMs) and their role in medical image processing . This includes an exploration of their fundamental principles, such as the Transformer architecture and pre-training methodologies, and their advantages over previous models . The survey then progresses to examine the diverse applications of LLMs in radiology, encompassing aspects like prompt engineering and their potential to enhance transfer learning efficiency, integrate multimodal data, and improve clinical interactivity . Specific instances include the use of LLMs to augment radiomics features for classifying breast tumors, demonstrating how clinical knowledge can be integrated to improve diagnostic accuracy .

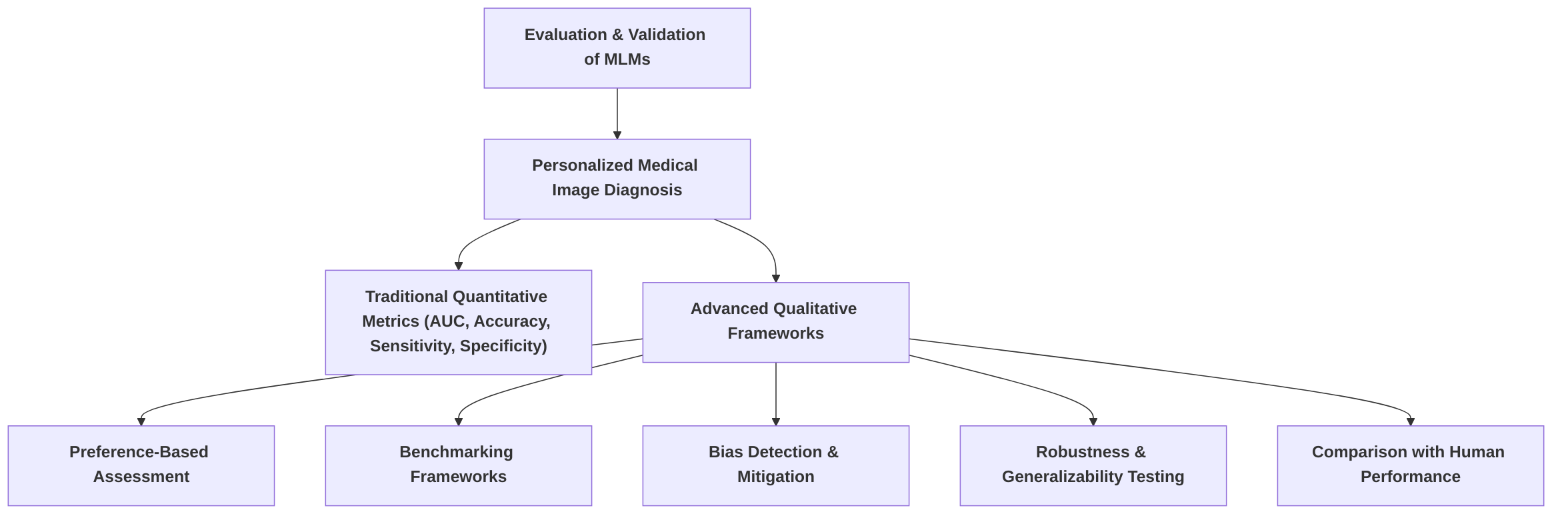

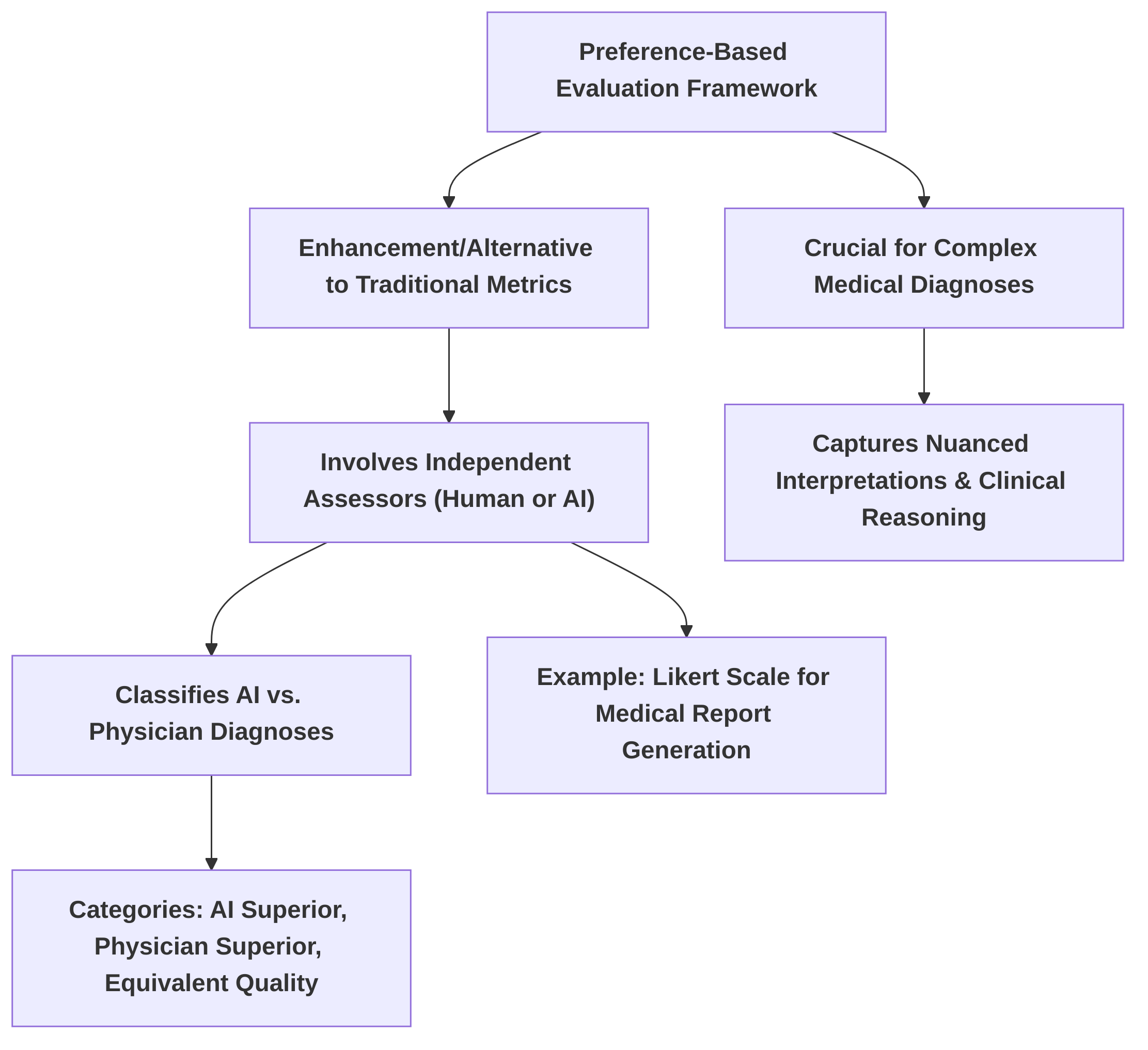

The core of the survey addresses the multifaceted opportunities and challenges presented by MLLMs in personalized medical image diagnosis. Opportunities are discussed through the lens of emerging frameworks such as graph neural networks (GNNs) and transformers, highlighting their potential for integrating imaging and clinical metadata . For instance, a parameter-efficient framework for fine-tuning MLLMs for tasks like medical visual question answering (Med-VQA) and medical report generation (MRG) showcases advancements in practical application . Furthermore, the survey covers the comprehensive evaluation frameworks for multimodal AI models, including data preprocessing, standardized model evaluation, and preference-based assessment, as demonstrated by the comparison of general-purpose and specialized vision models on abdominal CT images .

Concurrently, the survey critically examines significant challenges. These include inherent issues such as data scarcity, taxonomic inconsistencies, and biases within AI systems, which are highly pertinent to MLLMs despite their broader applicability in medical imaging . Specific challenges for LLMs in medical image processing, such as data privacy, model generalization, and effective clinician communication, are also addressed . The survey also touches upon broader ethical and regulatory considerations pertinent to the deployment of LLMs in clinical settings . By systematically exploring these opportunities and challenges, the survey aims to provide a logical and comprehensive understanding of the current state and future directions of MLLMs in personalized medical image diagnosis, informing future research and clinical translation.

2. Fundamentals of Multimodal Large Models in Medical Contexts

This section delves into the foundational aspects of Multimodal Large Models (MLMs) and Large Language Models (LLMs) within medical contexts, particularly focusing on their application in medical image diagnosis. It establishes clear definitions for both MLMs and LLMs, elucidating their distinctive capabilities and highlighting their complementary roles. The subsequent discussion systematically explores the prevalent architectural patterns and data fusion techniques observed in the literature, critically comparing and contrasting their suitability for personalization. Finally, the section showcases specific architectural innovations that advance the practical application of these models in clinical settings.

The field of medical diagnosis is undergoing a transformative shift with the advent of Multimodal Large Models (MLMs) and Large Language Models (LLMs). LLMs, primarily built upon the Transformer architecture, are distinguished by their ability to process and generate human language through extensive training on text corpora, capturing intricate statistical patterns and semantic representations . Their application in medical imaging extends to analyzing textual reports and extracting critical information, thereby augmenting image analysis with contextual data . In contrast, MLMs are broadly defined as systems designed to integrate and process diverse data types, such as medical images and clinical text, for comprehensive diagnostic tasks . These models enhance patient care by fusing imaging data with other modalities, like clinical metadata, to enable personalized and precise predictions . Their collective role in medical diagnosis involves synthesizing visual features from images with contextual information from reports to interpret complex medical scenarios effectively .

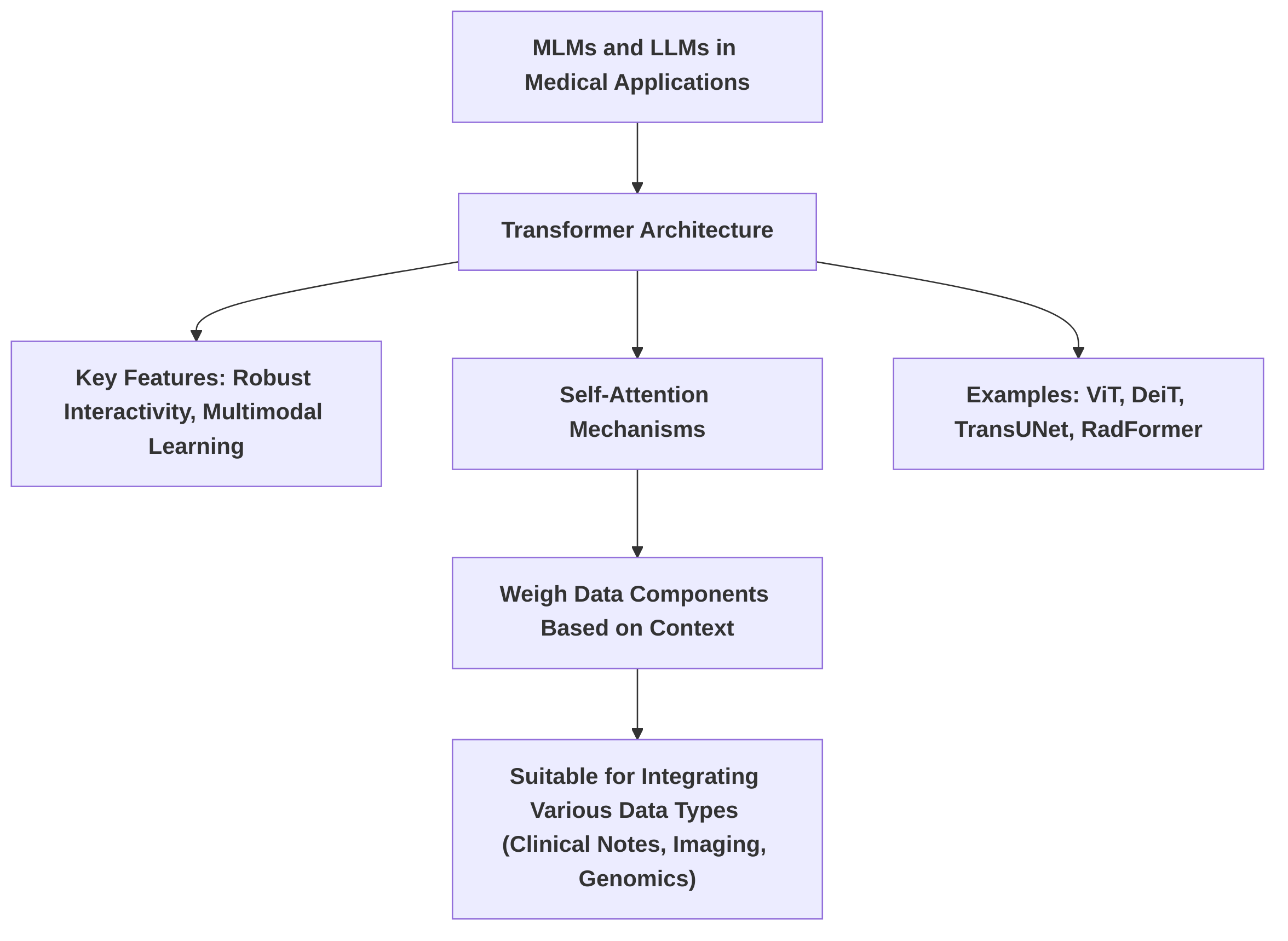

Common architectural patterns for both MLMs and LLMs in medical applications predominantly leverage the Transformer architecture, which has gained prominence in medical image processing due to its robust interactivity and multimodal learning capabilities . This architecture employs self-attention mechanisms to weigh data components based on context, making it highly suitable for integrating various data types, including clinical notes, imaging data, and genomic information . Examples include ViT, DeiT, TransUNet, and RadFormer . Data fusion techniques typically exploit these architectures to combine disparate data streams. An implicit fusion approach, for instance, involves using an LLM for text processing in conjunction with radiomic features extracted from mammograms, even if not explicitly detailed as an architectural component .

A comparison of architectural patterns and data fusion techniques in and reveals complementary approaches. emphasizes LLMs and their Transformer architecture, focusing on integrating image features with textual information through NLP techniques applied to medical reports. This represents a post-hoc fusion where textual insights enrich visual interpretations. Conversely, expands on multimodal AI, also highlighting Transformer-based models, but additionally introduces Graph Neural Networks (GNNs). GNNs excel at modeling non-Euclidean structures, explicitly representing complex relationships between modalities via graph structures, facilitating a more intrinsic fusion of data types like clinical notes, imaging, and genomic information for personalized predictions. While both Transformer and GNN approaches offer personalization capabilities through flexible attention mechanisms, the GNN approach described in appears inherently more amenable to personalization due to its capacity to explicitly model unique interdependencies within an individual patient's complex multimodal data.

Architectural innovations are further exemplified by models such as those discussed in . This work focuses on Multimodal Large Language Models (MLLMs adapted for medical multimodal problems as generative tasks. The emphasis on "Parameter-Efficient Fine-Tuning (PEFT)" signifies advancements in adapting large, pre-trained models to specialized medical imaging tasks without extensive re-training. PEFT methods, which involve introducing small, trainable parameters while keeping most pre-trained weights frozen, enable efficient adaptation and personalization to specific patient cases or medical conditions. These innovations are crucial for deploying MLMs in clinical settings where computational resources and data availability for full model training are often limited.

2.1 Defining Multimodal Large Models and Their Role in Medical Diagnosis

Multimodal Large Models (MLMs) and Large Language Models (LLMs) represent a significant evolution in artificial intelligence, particularly in the domain of medical imaging. LLMs are a subset of Natural Language Processing (NLP) models, predominantly built upon the Transformer architecture, trained on extensive text corpora to capture statistical patterns and semantic representations . Their core capability lies in comprehending, generating, and processing human language, which extends to analyzing medical reports and extracting crucial information using NLP techniques to augment image analysis . In contrast, MLMs are implicitly defined as systems capable of processing and integrating diverse data types, such as medical images and clinical text, for diagnostic tasks . Multimodal AI models enhance patient care by combining imaging data with at least one other modality, like clinical metadata, enabling personalized and precise predictions . The role of these models in medical diagnosis involves interpreting complex medical scenarios by synthesizing visual features from images with contextual information from reports .

Common architectural patterns observed in MLMs and LLMs for medical applications largely revolve around the Transformer architecture. This architecture, foundational to LLMs, is noted for its growing prominence in medical image processing due to its robust interactivity and multimodal learning capabilities . Transformer models are adept at handling sequential data and employ self-attention mechanisms that weigh data components based on context, making them suitable for integrating various data types such as clinical notes, imaging data, and genomic information . Examples of Transformer-based models applied in medical image analysis include ViT, DeiT, TransUNet, and RadFormer . Data fusion techniques, particularly in multimodal contexts, leverage these architectures to combine disparate data streams. For instance, the implicit use of an LLM for text processing combined with radiomic features extracted from mammograms exemplifies a fusion approach, even if not explicitly detailed as an architectural component .

Comparing the architectural patterns and data fusion techniques described in and reveals distinct but complementary approaches. primarily focuses on LLMs and their Transformer architecture, emphasizing their ability to integrate image features with textual information. This integration is achieved through NLP techniques applied to medical reports, thereby augmenting image analysis with clinical data for improved diagnostic accuracy. The paper highlights the versatility of LLMs in being fine-tuned for task-specific data or used as feature extractors, suggesting a post-hoc fusion where textual insights enrich visual interpretations. In contrast, presents a broader view of multimodal AI, also emphasizing Transformer-based models but additionally introducing Graph Neural Networks (GNNs). GNNs are highlighted for their capacity to model non-Euclidean structures in healthcare data, explicitly representing complex relationships between modalities through graph structures. This approach allows for a more integrated and intrinsic fusion of different data types, such as clinical notes, imaging data, and genomic information, leading to personalized and precise predictions. While both Transformer-based approaches can be amenable to personalization due to their flexible attention mechanisms, the GNN approach described in appears inherently more amenable to personalization. This is because GNNs can explicitly model the unique interdependencies and relationships within an individual patient's complex multimodal data, which is crucial for tailoring diagnoses and treatments.

Specific architectural innovations are exemplified by models like those discussed in . This paper describes Multimodal Large Language Models (MLLMs) as an evolutionary expansion of traditional LLMs, specifically adapted for medical multimodal problems as generative tasks. While the digest does not detail the specific architectural innovations, the focus on "Parameter-Efficient Fine-Tuning (PEFT)" suggests advancements in adapting large, pre-trained models to specialized medical imaging tasks without extensive re-training or modification of the entire model. This approach typically involves introducing small, trainable parameters while keeping the majority of the pre-trained weights frozen, allowing for efficient adaptation and personalization to specific patient cases or medical conditions. Such innovations are critical for deploying MLMs in clinical settings, where computational resources and data availability for full model training can be limiting.

2.2 Data Modalities and Integration Strategies

The integration of diverse data modalities is pivotal for advancing personalized medical image diagnosis, with various studies employing different data types and fusion strategies. Common medical data modalities observed across the reviewed literature include a dominant focus on medical imaging data, specifically X-ray, Ultrasound, CT scans, and mammography images . Beyond imaging, textual data, such as clinical notes, diagnostic reports, and medical records, are increasingly recognized as crucial for providing comprehensive diagnostic context . Some works also implicitly acknowledge the potential for integrating genomic data and laboratory results to further enrich diagnostic models .

Data integration strategies are broadly categorized into early, late, and hybrid fusion, each with distinct effectiveness and suitability depending on the specific application and data characteristics . Early fusion involves concatenating input data modalities before feature learning, often used when integrating imaging data with other modalities. Intermediate or joint fusion entails learning features independently from each modality and then combining them at an intermediate layer, while late fusion processes modalities independently until the final prediction stage. The choice of fusion technique is critical and depends on factors such as data source characteristics, model architecture, and the specific diagnostic application, with no universally optimal method identified .

In the context of COVID-19 detection, a study by utilizes X-ray, Ultrasound, and CT scans. The integration strategy in this paper is implicit, as each imaging modality is treated separately for classification tasks. While the paper does not explicitly detail a formal fusion strategy, the simultaneous use of these diverse imaging modalities effectively provides a richer set of diagnostic indicators, potentially mitigating the limitations of any single modality. This approach, though not a direct fusion, allows for comprehensive assessment by leveraging distinct visual cues from different imaging techniques. The pre-processing steps, including N-CLAHE for brightness and contrast standardization and data augmentation, are crucial for handling the significant variability in quality, size, and format across public datasets, thereby enhancing the diagnostic accuracy of models trained on these disparate sources .

Emerging trends indicate a growing emphasis on leveraging Large Language Models (LLMs) for multimodal data integration due to their inherent capabilities, especially through the Transformer architecture . LLMs can encode information from various modalities, including images, text, video, and audio, enabling joint processing and harnessing correlations between them. For instance, the ChatCAD system exemplifies how LLMs can enhance CAD networks by converting medical images into text content for LLM input, thereby combining natural language processing with image analysis for report generation and interactive dialogue . Similarly, in breast tumor classification, mammography images are integrated with radiomics features extracted from them, with an LLM fine-tuned to process these features, implicitly integrating them with learned clinical knowledge through prompt engineering . This illustrates a hybrid approach where image-derived quantitative data is fused with the linguistic processing power of LLMs. Another instance involves the concatenation of radiomic features with embedded textual features from diagnostic reports to form a multimodal input for classification tasks, underscoring the benefits of combining structured and unstructured data for improved diagnostic performance . The trend leans towards sophisticated integration methods that capitalize on the complementary nature of different data types, moving beyond traditional image-only analyses towards holistic patient assessments.

2.3 Mechanisms of Personalization in Multimodal Models

Personalized medicine, a core tenet of modern healthcare, seeks to tailor medical decisions and treatments to the individual patient, considering their unique characteristics, genetics, and lifestyle. Within the framework of multimodal artificial intelligence (AI), this vision is beginning to materialize through various mechanisms, though significant research gaps remain. The broader concept of deep learning opening new horizons in personalized medicine is highlighted by , which suggests that deep learning can analyze complex data to predict patient responses to treatments, leading to individualized care. However, this paper does not detail specific methods for achieving personalization within multimodal models .

Currently, direct mechanisms for personalization in multimodal models for medical image diagnosis are not extensively detailed across all reviewed literature. Many papers focus on the broader capabilities of large language models (LLMs) and multimodal AI in general medical image processing, such as integrating diverse data and enhancing clinical interactivity . For instance, while LLMs' ability to integrate patient-specific information like genetic data, medical history, and chief complaints is recognized as a pathway to more tailored diagnostics , the explicit mechanisms for personalization beyond general data integration are not thoroughly elaborated. Similarly, studies focusing on COVID-19 detection or general AI applications in diagnostic imaging prioritize broad applicability over individual patient tailoring .

One notable approach that contributes to personalization is Parameter-Efficient Fine-Tuning (PeFT) for Multimodal Large Language Models (MLLMs), as explored in . While the primary focus of this paper is on improving performance on generalized medical tasks through efficient fine-tuning, the adaptation of models to specific medical domains or datasets inherently moves towards personalization. PeFT methods, such as Low-Rank Adaptation (LoRA) or prefix-tuning, enable the adaptation of large pre-trained models to new, often smaller, datasets with minimal computational cost. This capability is crucial for personalization because it allows a foundational MLLM to be fine-tuned on data specific to a particular patient cohort, disease subtype, or even an individual patient, thereby enhancing its relevance and accuracy for that specific context. For example, by fine-tuning an MLLM on a dataset of images and clinical notes from patients with a rare disease, the model can learn to identify subtle patterns that might be missed by a general-purpose model, thus offering a more personalized diagnostic aid. This aligns with the vision of deep learning enabling more tailored diagnostic and treatment approaches, as outlined in .

Another indirect contribution to personalization can be observed in studies that enhance patient-specific representations. For instance, the enhancement of radiomics features using LLMs for classifying breast tumors indirectly contributes to personalization. Radiomics features are derived from medical images and are inherently patient-specific. By improving the classification accuracy through an enhanced feature set, the approach can lead to more personalized diagnostic outcomes, as the model's predictions are based on richer, more accurate individual patient data. The concept of "extensible learning" in this context suggests an adaptive capability, which is a step towards more personalized models. It is important to note that the findings from are not directly applicable to the personalization aspect, as their focus is on evaluating general diagnostic performance rather than tailoring diagnostics to individual patient characteristics.

Despite these advancements, significant research gaps persist in achieving deep personalization within multimodal AI for medical image diagnosis. A primary challenge lies in the current focus on general diagnostic improvements rather than explicit mechanisms for tailoring models to individual patient characteristics or incorporating comprehensive patient-reported outcomes. While approaches like PeFT offer a promising direction, their application for truly individual-level personalization requires further development.

Future research directions should focus on developing sophisticated methods for integrating longitudinal patient data, including sequential imaging studies, electronic health records (EHRs), genomic data, and patient-reported outcomes, to capture the dynamic progression of diseases and individual responses to treatments. This would allow multimodal models to not only provide a diagnosis at a single point in time but also to predict disease trajectories and recommend tailored treatments that evolve with the patient's condition. Furthermore, research is needed on creating adaptive learning frameworks that can continuously update and refine models based on new patient data, ensuring that diagnostic and treatment recommendations remain optimally personalized. Exploring federated learning or personalized federated learning paradigms could also enable patient-specific model adaptation while preserving data privacy, a crucial consideration in healthcare. Such advancements would propel multimodal AI beyond broad diagnostic tools towards truly personalized medical solutions.

3. Opportunities: Applications and Benefits in Personalized Medical Image Diagnosis

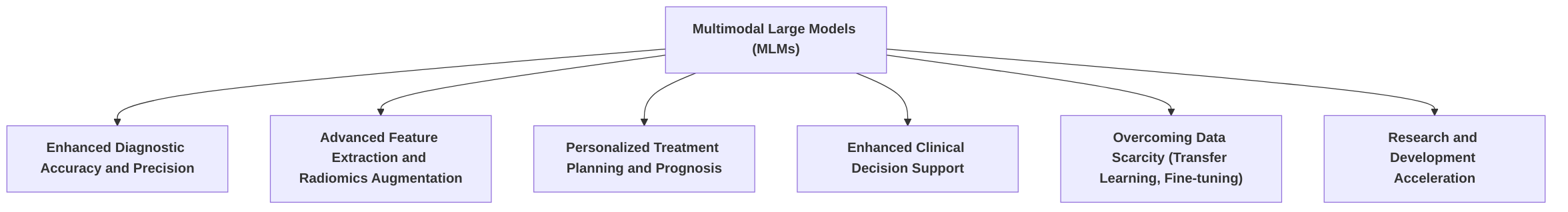

Multimodal Large Models (MLMs) represent a significant advancement in personalized medical image diagnosis, offering substantial opportunities for enhancing various facets of clinical practice and research. By integrating diverse data modalities—ranging from medical imaging (e.g., X-ray, CT, MRI, Ultrasound) to clinical metadata (e.g., patient history, laboratory results, genetic information)—MLMs enable a more comprehensive and precise understanding of a patient's condition, moving beyond the limitations of unimodal approaches .

This section systematically explores the opportunities presented by MLMs in personalized medical image diagnosis, structured into five key sub-sections. First, "Enhanced Diagnostic Accuracy and Precision" details how the integration of multimodal data leads to improved diagnostic outcomes, often surpassing unimodal models and human experts in specific tasks. It presents case studies and quantifies performance gains, highlighting the robustness achieved by compensating for individual modality limitations . Despite these advancements, a key research gap lies in demonstrating real-world clinical utility through prospective validation studies.

Second, "Advanced Feature Extraction and Radiomics Augmentation" discusses how large models transcend traditional radiomics by identifying complex patterns and relationships in medical images. It explores methods like "enhancing radiomics features via a large language model" for tasks such as breast tumor classification, analyzing commonalities in feature extraction, and identifying research gaps in feature interpretability . Future research should focus on developing more robust and clinically validated radiomics augmentation methods with enhanced interpretability.

Third, "Personalized Treatment Planning and Prognosis" examines how MLMs enable more personalized treatment strategies and accurate prognosis predictions through the integration of diverse patient information. It touches upon methodologies for risk stratification and outcome prediction, highlighting the potential of MLMs to integrate longitudinal imaging data for tracking disease progression . Research gaps exist in translating these predictive capabilities into actionable clinical recommendations, necessitating frameworks for MLM-based treatment personalization.

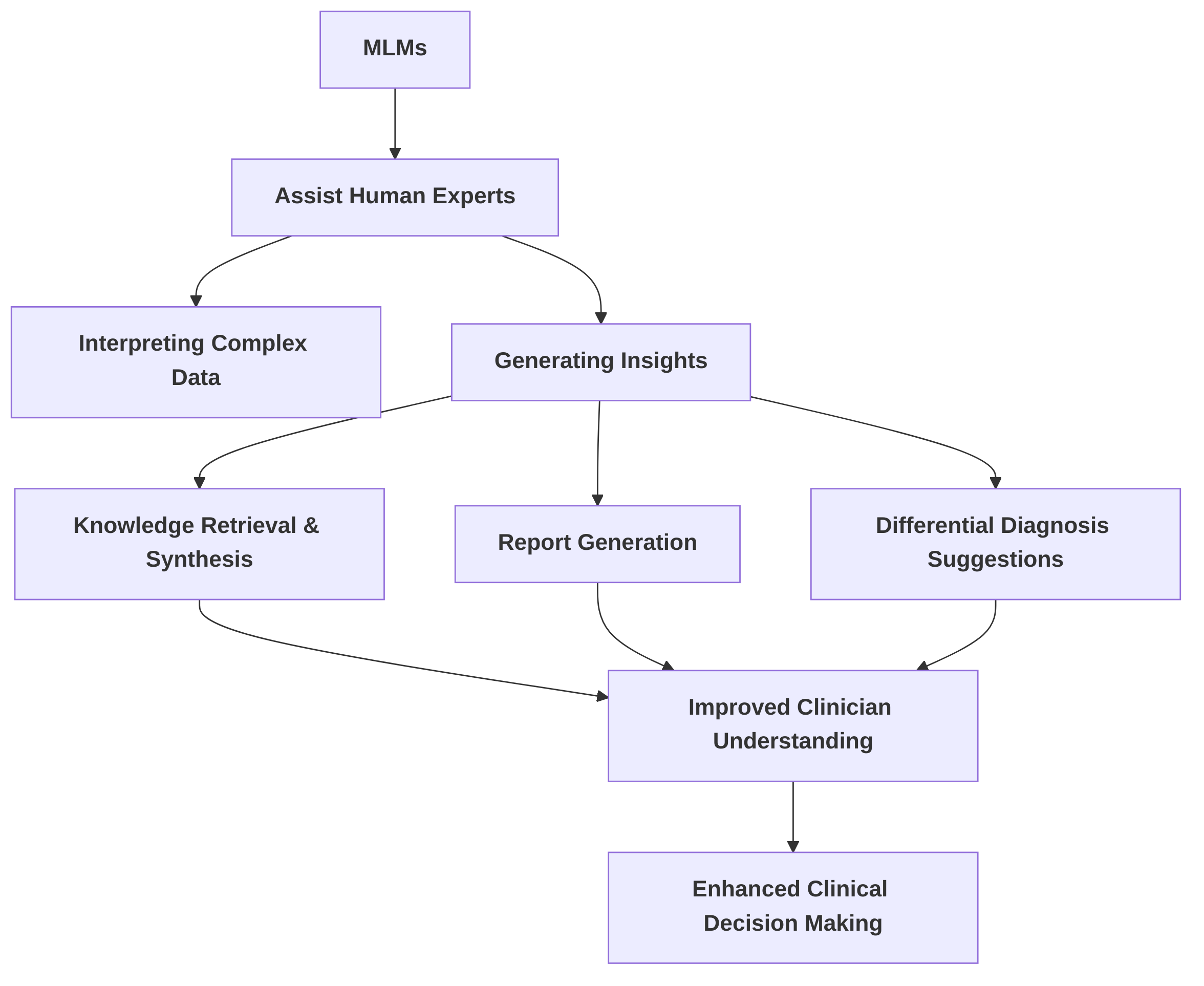

Fourth, "Enhanced Clinical Decision Support" explores the role of MLMs in assisting human experts in interpreting complex data and generating insights. It summarizes how these models facilitate knowledge retrieval, synthesis, report generation, and differential diagnosis suggestions . A significant challenge remains in seamlessly integrating these support systems into existing clinical workflows, underscoring the need for user-friendly interfaces and robust validation studies.

Finally, "

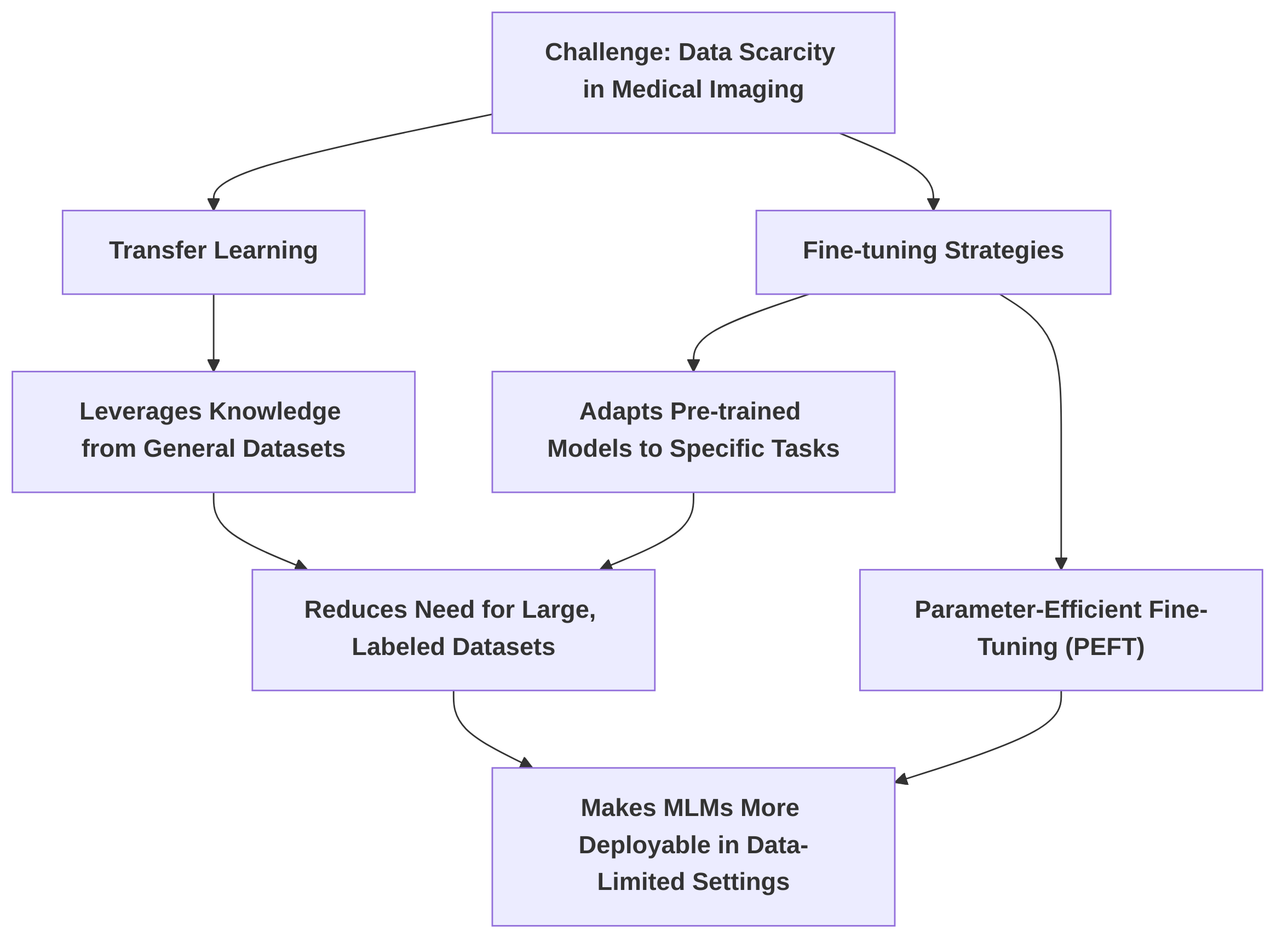

Overcoming Data Scarcity through Transfer Learning and Fine-tuning" addresses a critical challenge in medical imaging: data scarcity. This sub-section details how transfer learning and various fine-tuning strategies, including parameter-efficient methods like those proposed in , mitigate this issue, making MLMs more deployable in data-limited clinical settings . Future work should focus on optimizing fine-tuning strategies for diverse medical imaging tasks to enhance generalizability and efficiency.

Collectively, these opportunities underscore the transformative potential of MLMs in personalized medical image diagnosis. The analytical power of these models also holds promise for accelerating research and development (R&D) in medical research by identifying complex patterns and correlations across various data types. The success of general-purpose MLMs like Llama 3.2-90B in medical diagnostics suggests a promising direction towards leveraging larger, more versatile foundation models, potentially with further architectural innovations . However, translating these advancements into widespread clinical utility requires addressing persistent research gaps related to real-world validation, interpretability, seamless integration into workflows, and optimization of fine-tuning strategies for diverse medical tasks and specific R&D areas like drug discovery or clinical trial optimization.

3.1 Enhanced Diagnostic Accuracy and Precision

Multimodal Large Models (MLMs) have demonstrated a significant enhancement in diagnostic accuracy and precision by integrating diverse data modalities, often surpassing the capabilities of unimodal approaches and human experts in specific tasks . The fusion of medical images with clinical metadata, such as patient history, laboratory results, and genetic information, has been identified as a critical factor in achieving these performance gains .

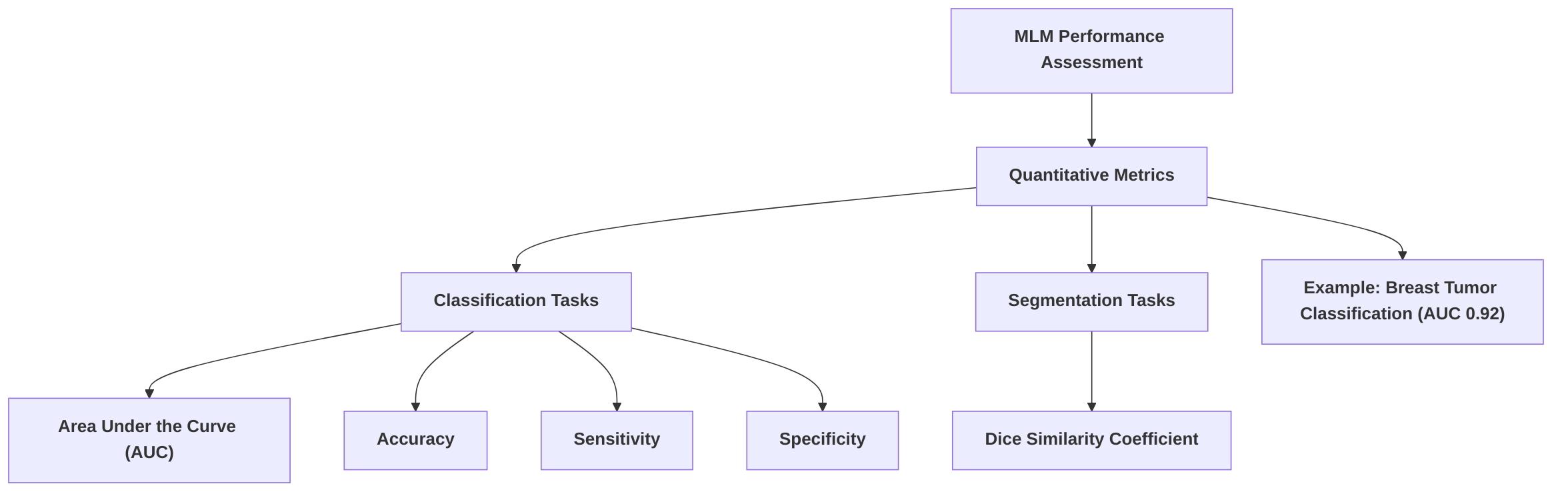

Case studies illustrate the impact of multimodal integration. For instance, in the classification of benign and malignant breast tumors, an LLM-enhanced radiomics approach, combining textual information from diagnostic reports with mammography features, achieved a superior AUC of 0.92, outperforming radiomics-only (AUC of 0.86) and text-only (AUC of 0.79) methods . Similarly, transformer-based multimodal models have consistently outperformed unimodal approaches in various prediction tasks, including the diagnosis of Alzheimer's disease with high AUCs and improved predictions for heart failure and respiratory diseases . The integration of imaging and clinical data enhances diagnostic precision by providing a more comprehensive basis for clinical decision-making, allowing models to identify subtle patterns and correlations that might be missed by single-modality analysis .

Beyond specific disease classification, general-purpose MLMs have demonstrated remarkable superiority over human diagnoses. Evaluations have shown Llama 3.2-90B outperforming human performance in 85.27% of medical imaging tasks, with GPT-4 and GPT-4o exhibiting similar superiority rates of 83.08% and 81.72%, respectively . This advantage is particularly evident in complex scenarios such as abdominal CT interpretations, where MLMs can concurrently evaluate multiple anatomical structures and track disease progression, offering a more comprehensive diagnosis than human experts . This improvement is attributed to the models' ability to process vast quantities of data and discern intricate patterns that may be imperceptible to human diagnosticians.

Multimodal models also enhance diagnostic robustness by compensating for the limitations inherent in individual modalities . For instance, in COVID-19 detection, multimodal imaging data, incorporating X-ray, Ultrasound, and CT scans, yielded robust classification results, with Ultrasound notably achieving 100% sensitivity and positive predictive value for COVID-19 versus pneumonia classification . This complementarity ensures that even if one modality provides ambiguous or limited information, other modalities can provide corroborating or supplementary data, leading to a more reliable diagnosis. The common benefits cited for multimodal integration across studies include improved sensitivity and specificity, leading to more accurate disease detection and characterization .

Despite these significant advancements, research gaps persist in demonstrating the real-world clinical utility of these models. While many studies highlight improved accuracy, there remains a need for explicit statistical comparisons with baseline unimodal models and human diagnoses in prospective clinical settings to rigorously quantify the added value of multimodality . Future research should prioritize prospective validation studies with diverse patient populations and a wider spectrum of medical conditions to ensure the generalizability and robust application of multimodal AI models in varied clinical environments. This will also involve comparing performance across different image acquisition protocols and demographic groups to confirm reliability in real-world scenarios.

3.1.1 Superiority over Human Diagnosis in Specific Tasks

Multimodal large models (MLMs) have demonstrated significant advancements in diagnostic accuracy, occasionally surpassing human diagnostic capabilities in specific medical imaging tasks. A comprehensive evaluation of general-purpose MLMs revealed remarkable performance improvements over human diagnoses . For instance, Llama 3.2-90B exhibited superiority in 85.27% of evaluated cases, with only 1.39% rated as equivalent to human performance. Similarly, GPT-4 and GPT-4o demonstrated AI superiority in 83.08% and 81.72% of cases, respectively . These models' advantage is particularly pronounced in complex scenarios, such as abdominal CT interpretations, where they can simultaneously evaluate multiple anatomical structures, track disease progression, and integrate diverse clinical information for a more comprehensive diagnosis than human experts .

Beyond general-purpose MLMs, more specialized deep learning models, such as Convolutional Neural Networks (CNNs), have also shown superior diagnostic performance in narrowly defined tasks. For example, CNNs have outperformed radiologists in the diagnosis of pneumonia from chest radiographs. Their performance in lung nodule identification and coronary artery calcium quantification has been found to be comparable to human experts, indicating AI's potential to excel in specific, well-defined diagnostic areas .

The primary reasons for this observed superiority stem from AI's inherent capabilities in processing vast quantities of data and identifying subtle patterns that may be imperceptible or easily overlooked by human diagnosticians. The ability of MLMs to integrate diverse data modalities—such as medical images and clinical notes—enables a more holistic and nuanced diagnostic assessment. This data processing capacity allows AI to learn complex relationships and indicators of disease that might elude human perception, especially when dealing with high-dimensional data.

Despite these promising results, several research gaps remain regarding the generalizability of these findings. While AI models show significant superiority in specific tasks and complex scenarios, their performance across a wider spectrum of medical conditions and diverse patient populations requires further investigation. Many current studies, such as those focusing on COVID-19 detection or enhancing radiomics features, often do not include direct comparisons against human diagnoses, instead focusing on comparisons with other AI models or conventional methods . Furthermore, while LLMs like ChatGPT have demonstrated high proficiency in tasks like radiology board-style examinations or assessing the methodological quality of research, these do not directly translate to superior clinical diagnostic performance in specific medical imaging tasks . Therefore, future research needs to focus on rigorously testing the generalizability of multimodal AI models across varied pathologies, image acquisition protocols, and demographic groups to ensure their robust and reliable application in diverse clinical settings.

3.2 Advanced Feature Extraction and Radiomics Augmentation

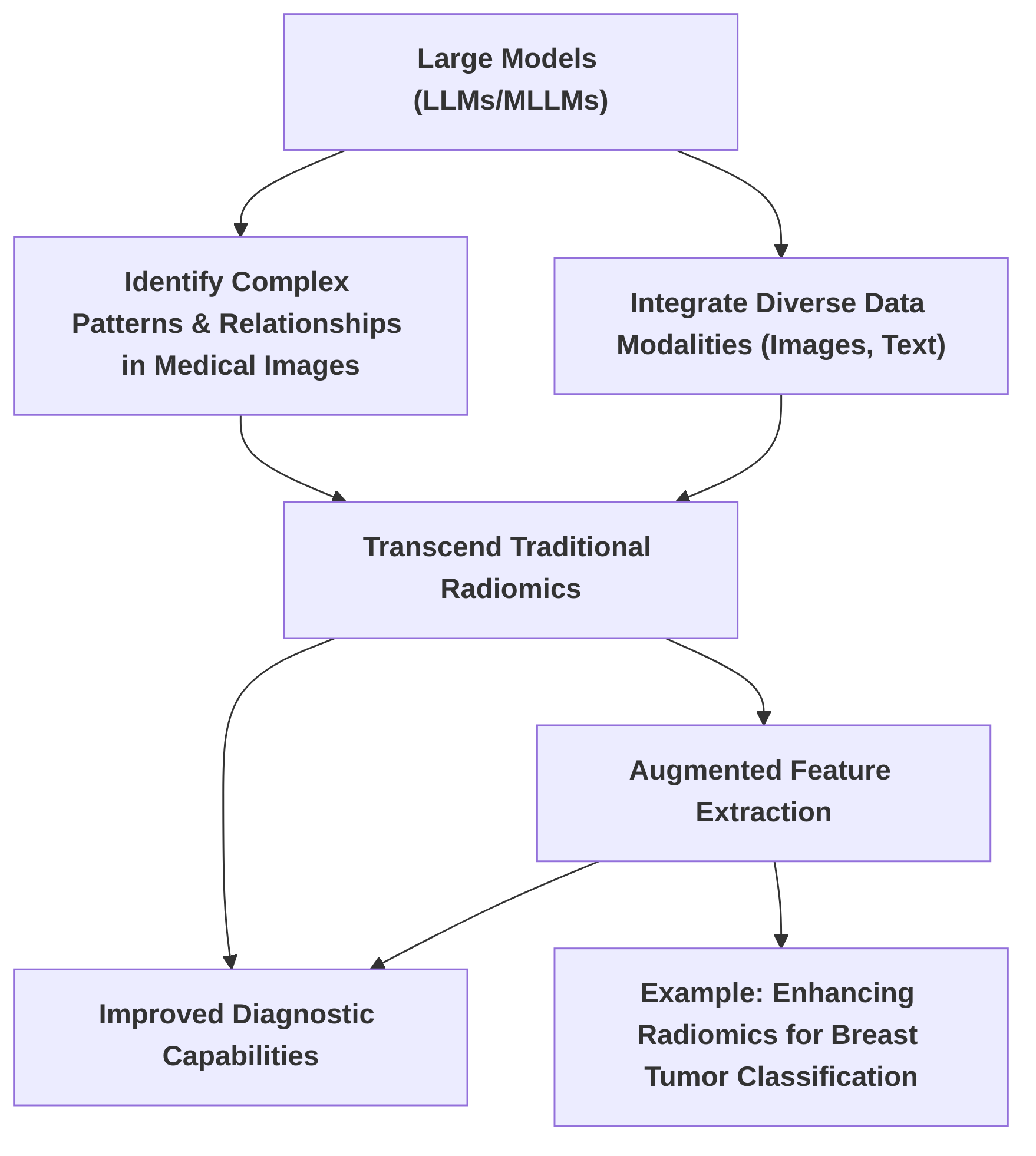

Large models, particularly Large Language Models (LLMs) and Multimodal Large Models (MLLMs), are poised to significantly advance beyond traditional radiomics by identifying complex patterns and relationships in medical images, thereby enhancing diagnostic capabilities. While traditional radiomics extracts quantitative features from medical images, large models offer the potential for more sophisticated analysis through their ability to process and integrate diverse data modalities, including textual diagnostic reports and imaging data .

A notable approach in this domain is "enhancing radiomics features via a large language model," as demonstrated in the context of classifying benign and malignant breast tumors in mammography . In this methodology, an LLM processes textual diagnostic reports to generate embeddings, which are then fused with traditional radiomic features extracted from mammography images. This integration enriches the discriminative power of the features, leading to improved classification performance . The core principle involves leveraging the LLM's understanding of clinical knowledge, obtained through prompt engineering and fine-tuning, to augment selected radiomics features, enabling extensible learning across datasets by explicitly linking feature names with their values .

Commonalities in feature extraction techniques across large models include the utilization of advanced architectures like Transformers. The Transformer architecture, with its self-attention mechanisms, excels at deconstructing images into local features and apprehending interrelations among them. This capability enhances image recognition and analysis accuracy by effectively processing and integrating diverse data, including implicitly aiding in extracting richer features from medical data by combining imaging and clinical metadata . While many studies on deep learning in medical imaging, such as those focusing on general diagnostic impact or CNN classification capabilities, acknowledge feature extraction as a foundational step, they often do not explicitly detail how large models augment traditional radiomics beyond standard approaches . However, the inherent ability of general-purpose large multimodal models to process and integrate image and text data inherently enhances feature extraction and interpretation, offering a more comprehensive understanding of pathological findings compared to specialized vision models alone .

Despite these advancements, significant research gaps remain, particularly concerning the interpretability of these sophisticatedly extracted features. While large models demonstrate improved diagnostic performance, the mechanisms by which they synthesize information from various modalities and augment radiomics are often opaque. Future research should focus on developing more robust and clinically validated radiomics augmentation methods that prioritize interpretability. This includes creating transparent models that can explicitly articulate the clinical significance of the features they extract and the rationale behind their diagnostic decisions. Furthermore, validating these augmented radiomics methods across diverse patient populations and imaging modalities is crucial to ensure their generalizability and clinical utility, paving the way for their seamless integration into personalized medical image diagnosis workflows.

3.3 Personalized Treatment Planning and Prognosis

Multimodal Large Models (MLMs) hold significant promise for revolutionizing personalized medical care by integrating diverse patient information to enable more tailored treatment strategies and accurate prognosis predictions. The core strength of these models lies in their ability to synthesize information from various modalities, such as medical images, clinical records, genetic data, and patient histories, thereby providing a holistic view of a patient's condition. This integrated understanding is crucial for moving beyond population-level treatment guidelines towards individualized interventions.

While several papers acknowledge the potential of AI in predictive analytics for prognosis and treatment planning, specific methodologies for risk stratification and outcome prediction using multimodal large models are not extensively detailed across the reviewed literature. For instance, some studies broadly state that deep learning can facilitate more personalized diagnostic and treatment approaches and predict patient responses to treatments . Similarly, the integration of imaging and clinical data by multimodal AI models is suggested to lead to more personalized and precise predictions that can inform patient care, with examples like multimodal transformers used for survival prediction in intensive care or disease diagnosis by unifying information across modalities . Large Language Models (LLMs) are also noted for their potential to predict disease progression and support clinical decision-making , and to provide tailored medical counsel and treatment regimens by mimicking clinician diagnostic and therapeutic processes through multimodal data integration and contextual memory . However, the specific architectures or algorithmic frameworks that enable these granular predictions, such as detailed methods for risk stratification or the generation of precise, tailored therapeutic strategies based on integrated multimodal data, remain underexplored in the current digests. An isolated example mentions the improved prediction of overall survival in glioblastoma patients from MRI data using CNNs, but this is a limited illustration of AI's broader prognostic capabilities and does not specifically involve multimodal large models or comprehensive personalized treatment planning .

A promising future direction for MLMs in personalized medicine involves leveraging their capabilities to integrate longitudinal imaging data, such as multiple scans obtained over time, in conjunction with extensive clinical records. This approach is supported by the models' inherent ability to 'track disease progression', allowing for a more dynamic and accurate assessment of patient trajectories. By capturing the evolution of a disease and the patient's response to interventions, MLMs could provide highly refined prognostic insights and inform adaptive treatment planning. For instance, MLMs could analyze successive tumor volume changes from MRI scans, correlate them with specific drug regimens and genetic markers, and predict optimal future treatment modifications.

Despite these promising capabilities, significant research gaps exist in translating the predictive power of MLMs into actionable clinical recommendations. Current discussions often highlight the potential without detailing concrete frameworks or pipelines for how these sophisticated predictions can be seamlessly integrated into clinical workflows and directly inform physician decision-making for treatment personalization. Future work should therefore focus on developing robust methodologies that bridge this gap. This includes creating interpretable MLM outputs that clinicians can trust, designing user interfaces that facilitate the application of MLM-derived insights, and conducting rigorous clinical validation trials to demonstrate the efficacy and safety of MLM-guided personalized treatment strategies. Furthermore, research should explore ethical considerations and regulatory pathways for deploying such advanced predictive models in real-world clinical settings.

3.4 Enhanced Clinical Decision Support

Multimodal Large Models (MLMs) are increasingly recognized for their potential in enhancing clinical decision support by assisting human experts in interpreting complex medical data and generating actionable insights. Large Language Models (LLMs), a component of MLMs, can improve diagnostic accuracy, predict disease progression, and analyze extensive medical datasets, thus offering suggestions for potential diagnoses, differential diagnoses, and treatment options . This capability extends to integrating with existing radiology systems, providing preliminary assessments, and answering radiology-related queries . While some studies focus on the general diagnostic capabilities of AI, such as Convolutional Neural Networks (CNNs) providing probability outputs for conditions like pneumonia or pleural effusion, these implicitly function as a form of decision support . Similarly, advancements in Deep Learning (DL) have improved the accuracy, speed, and consistency of medical imaging diagnosis, offering more reliable information to clinicians .

LLMs also serve as powerful tools for knowledge retrieval and synthesis, crucial for clinicians to stay updated with the latest research and best practices . Their robust interactivity enables natural language dialogues, allowing doctors to query specific imaging data for closer examination . Furthermore, LLMs can provide natural language explanations and reasoning for diagnostic results, enhancing transparency and clinician understanding of the model's decision-making process . Systems like ChatCAD exemplify this, facilitating dialogue about disease, symptoms, diagnosis, and treatment, thereby empowering informed treatment choices . The MedSAM model is also noted for its potential in real-time explanations and addressing patient inquiries, further solidifying its role as an asset for clinical decision support .

Common functionalities of MLMs in decision support include report generation and differential diagnosis suggestions. Multimodal AI models, by integrating disparate forms of medical data such as clinical notes, imaging, and genomic information, can provide personalized predictions and recommendations . This integration allows them to assist in interpreting medical images and suggesting differential diagnoses . For instance, fine-tuned MLMs have demonstrated potential in Med-VQA (Medical Visual Question Answering) and Medical Report Generation (MRG), which indicates their utility as advanced clinical decision support tools by aiding in image interpretation and report creation . The improved classification accuracy for breast tumors using a multimodal approach, though not explicitly framed as a comprehensive decision support system, provides more robust diagnostic information derived from mammography, serving as a component within such a system . Similarly, models providing classification results for COVID-19 detection can serve as a "second pair of eyes" for medical professionals, assisting in diagnosis and criticality assessment . Some general-purpose multimodal models have even demonstrated diagnostic assessments that surpass human performance by integrating complex information from CT images and reports, thereby assisting clinicians in making more accurate diagnoses .

Despite these advancements, research gaps persist in the seamless integration of these support systems into existing clinical workflows. Many current studies do not explicitly detail how MLMs function as comprehensive clinical decision support systems, specifically concerning generating detailed reports or suggesting differential diagnoses based on complex medical images . While LLMs are being evaluated for their ability to assess research quality , their direct application in clinical diagnosis decision support still requires more specific demonstration. Future work should therefore focus on developing user-friendly interfaces that facilitate intuitive interaction with these complex models and rigorously validating their impact on clinical outcomes through large-scale, prospective studies.

3.5 Overcoming Data Scarcity through Transfer Learning and Fine-tuning

Data scarcity remains a pervasive challenge in medical imaging, where acquiring large, expertly annotated datasets is often resource-intensive and time-consuming. Transfer learning and fine-tuning emerge as critical strategies to mitigate this limitation, enabling the adaptation of pre-trained models to specialized medical domains with limited labeled data .

Transfer learning leverages knowledge gained from training on vast, generalized datasets (e.g., ImageNet) and applies it to specific medical tasks. For instance, in the context of COVID-19 detection from multimodal imaging data, pre-trained Convolutional Neural Networks (CNNs) with ImageNet weights, such as VGG19, demonstrated reasonable performance despite limited COVID-19 datasets. The effectiveness of VGG19, attributed to its better trainability on scarce datasets compared to more complex models, underscores the utility of transfer learning in challenging, data-constrained scenarios . Similarly, pre-training and subsequent fine-tuning strategies employed by Large Language Models (LLMs) facilitate transfer learning, which not only expedites model training but also substantially reduces annotation costs, a crucial factor when expert annotation is scarce . Studies have indicated that combining transfer learning with self-training can achieve performance comparable to models trained on significantly larger quantities of labeled data . The development of MedSAM, a fine-tuned version of the Segment Anything Model (SAM) specifically for medical image segmentation, exemplifies the potential of fine-tuning LLMs in medical imaging, demonstrating improved performance over the default SAM through a simple fine-tuning method .

While full fine-tuning, which updates all model parameters, can yield high performance, it demands substantial computational resources and large datasets. In contrast, parameter-efficient fine-tuning (PeFT) methods offer a compelling alternative. For example, the "parameter efficient framework for fine-tuning MLLMs" directly addresses the challenge of adapting large, pre-trained models to specialized medical domains with limited labeled data. This framework's emphasis on efficiency translates to better resource utilization, making model deployment feasible with less data. One such PeFT technique, Low-Rank Adaptation (LoRA), has been utilized to fine-tune LLMs for tasks such as classifying benign and malignant breast tumors in mammography. This approach effectively adapts pre-trained LLMs to specific tasks by training significantly fewer parameters, thereby enabling robust performance in data-limited scenarios . The ability of these models to reuse common features between training and unseen datasets, facilitated by explicit linking of feature names and values, further highlights their extensible learning capabilities, allowing adaptation to new datasets without exhaustive retraining .

The practical implications of these techniques for deploying Multimodal Large Models (MLMs) in clinical settings with varying data availability are significant. By reducing the reliance on massive, domain-specific datasets, transfer learning and PeFT democratize the application of advanced AI in healthcare. This allows for faster deployment of diagnostic tools in areas where data collection is challenging or patient populations are small. For instance, the ability of UniverSeg to achieve task generalization without additional training by learning task-agnostic models further enhances the utility of these approaches .

Despite these advancements, several research gaps remain in the optimization of fine-tuning strategies for diverse medical imaging tasks. A key area for future research involves developing more generalized and efficient fine-tuning methods that can adapt to a wider array of medical imaging modalities and diagnostic objectives without extensive re-engineering. This includes investigating adaptive fine-tuning approaches that dynamically adjust parameters based on the specific characteristics and volume of the target medical dataset. Further research is also needed to systematically compare the trade-offs between various PeFT methods (e.g., LoRA, prompt tuning, adapter-based methods) across different medical imaging tasks and model architectures, to establish best practices and guidelines for optimal deployment in real-world clinical scenarios.

3.6 Research and Development Acceleration

The analytical power of multimodal large models (MLMs) presents a significant opportunity to accelerate research and development (R&D) in personalized medical imaging and broader medical research by identifying complex patterns and correlations across diverse data types. Deep learning's capacity to analyze intricate datasets can enhance the understanding of diseases and potentially expedite discovery processes . Specific applications include improving diagnostic accuracy through the integration of imaging features with textual information, as demonstrated by the use of Large Language Models (LLMs) in classifying breast tumors . Such integration represents a foundational step towards developing more potent tools for feature extraction and interpretation, thereby accelerating the development of AI models for diagnosis .

MLMs can also streamline various research tasks. LLMs, for instance, facilitate the identification of high-quality research papers, detection of subtle correlations within data, and generation of critical insights . They can automate routine tasks such as text generation, summarization, and correction, leading to substantial time savings in research workflows. In radiology, these models can assist in the development of machine learning models and support code debugging for medical image analysis . Furthermore, LLMs improve transfer learning efficiency, enable better integration of multimodal data, and enhance clinical interactivity, contributing to cost-efficiency in healthcare . The development of models like MedSAM and UniverSeg, which aim to create universal tools for segmenting various medical objects, signifies a thrust towards accelerating research in image processing and advancing medical artificial general intelligence .

The success of general-purpose MLMs, such as Llama 3.2-90B, in comprehensive evaluations of multimodal AI models for medical imaging diagnosis , points towards a future where larger, more versatile foundation models are leveraged for medical diagnostics. This direction is further supported by the introduction of efficient fine-tuning methods for MLMs, which make these powerful models more accessible and adaptable for specific medical imaging research questions, including Visual Question Answering (VQA) and report generation . The development of an efficient evaluation framework for multimodal AI models further accelerates research by enabling rapid and systematic benchmarking, thereby identifying promising avenues for future development . Additionally, cutting-edge approaches like transformers and Graph Neural Networks (GNNs) can integrate diverse data types—including clinical notes, imaging, and genomics—to enhance patient care through personalized predictions, thereby accelerating research by facilitating more sophisticated analyses .

Despite these advancements, research gaps persist in the direct application of MLMs to specific R&D areas like drug discovery and clinical trial optimization. While some studies broadly acknowledge deep learning's potential to accelerate discovery , they often lack specific examples of how MLMs accelerate personalized medical imaging R&D, such as identifying novel biomarkers or streamlining clinical trial participant selection . Current literature largely focuses on diagnostic applications and image processing improvements rather than the direct impact on broader R&D initiatives . For instance, while LLMs can enhance the reliability of published research through quality assessment , this is an indirect contribution to R&D acceleration. Future research should focus on developing explicit frameworks and methodologies for leveraging MLMs to identify new disease insights, accelerate drug discovery pipelines by predicting molecular interactions or drug efficacy, and optimize clinical trial design through more precise patient stratification and outcome prediction. This requires bridging the gap between current diagnostic applications and the broader R&D landscape, potentially through architectural innovations that enhance the interpretability and predictive power of MLMs in these complex domains.

4. Challenges: Limitations and Obstacles in Implementation

The successful deployment of multimodal large models (MLMs) in personalized medical image diagnosis is hindered by several significant challenges, encompassing data availability and quality, model complexity and interpretability, inherent biases and ethical considerations, substantial computational resource requirements, and complex regulatory and clinical integration hurdles. This section delves into these limitations, highlighting current obstacles and identifying critical research gaps and future directions to foster the practical and equitable adoption of MLMs in healthcare.

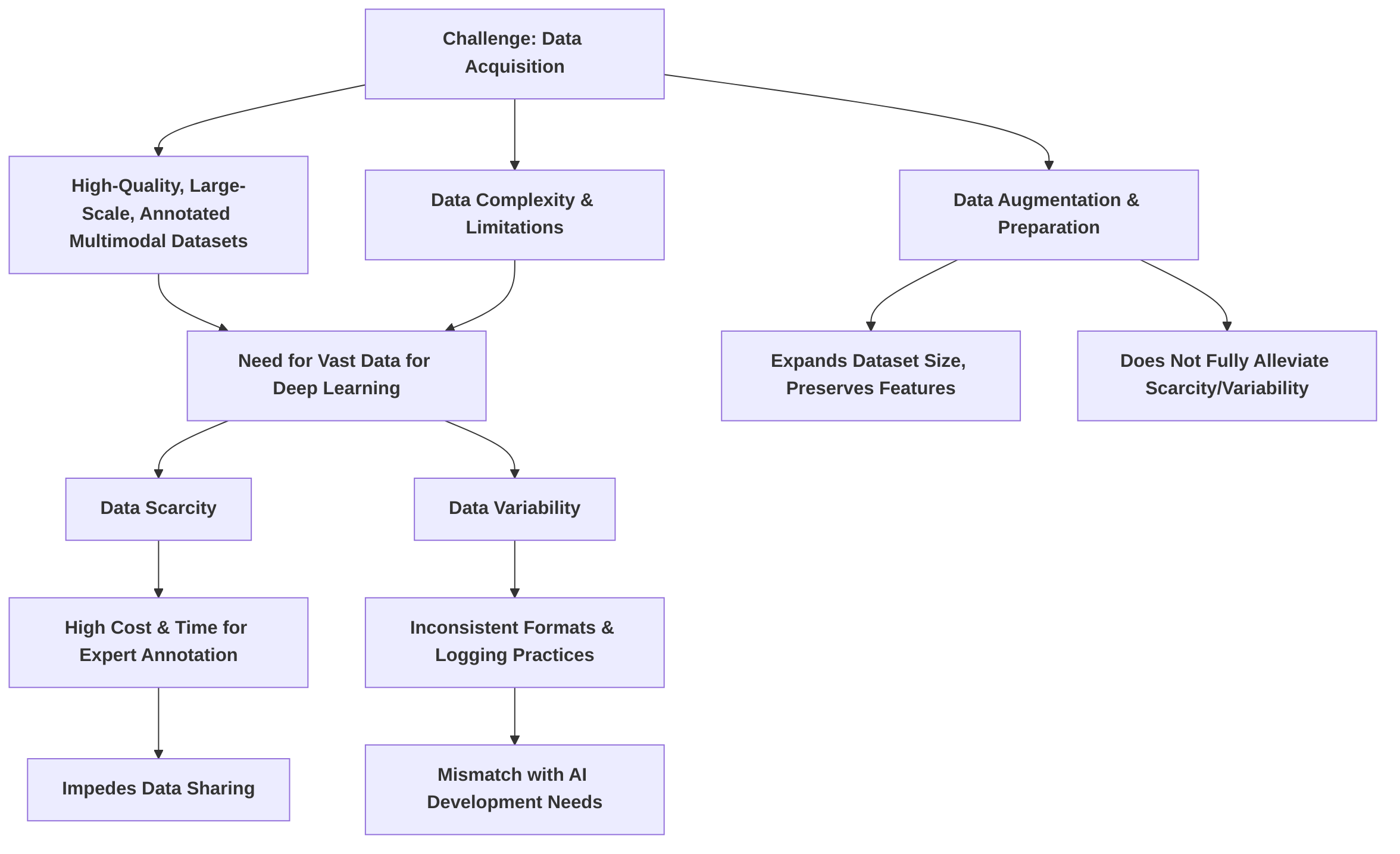

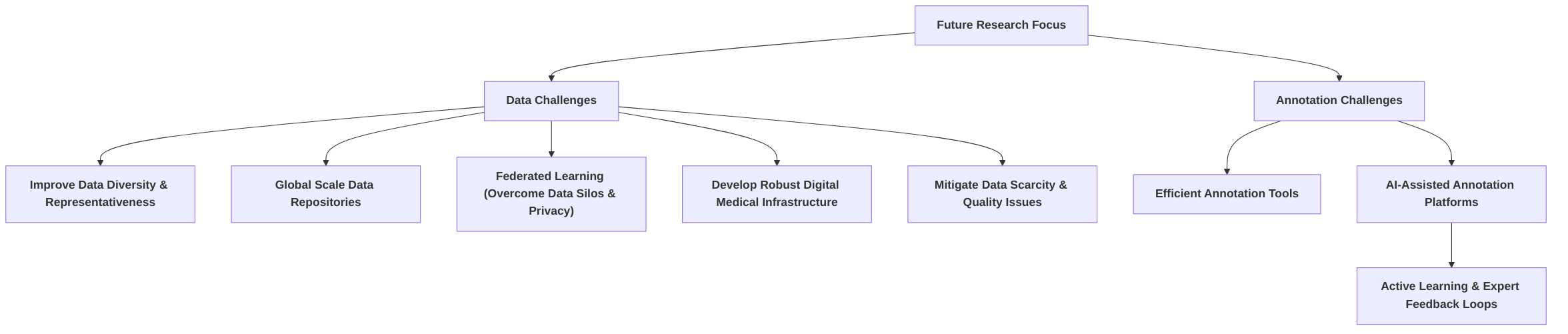

A foundational challenge is the acquisition of high-quality, large-scale, and meticulously annotated multimodal datasets. Medical data is inherently complex and often limited in quantity, contrasting sharply with the vast datasets typically required for training deep learning models . The process of data augmentation and preparation, while crucial for expanding dataset sizes and preserving diagnostic features, as demonstrated by the ability to augment 500 cases to 3,000 , does not fully alleviate the issues of underlying data scarcity and variability . Furthermore, technical difficulties arise in fusing heterogeneous data from disparate modalities and sources, due to inconsistent storage formats and varying logging practices across institutions, leading to a mismatch between current healthcare data management and AI development needs . Data scarcity is perpetuated by the high cost and time associated with expert annotation, compounded by inter-reader variability and equipment differences, leading to significant impediments in data sharing . Future research must focus on developing scalable annotation pipelines, advanced data augmentation techniques, and leveraging unsupervised or self-supervised learning to reduce reliance on extensive manual annotations.

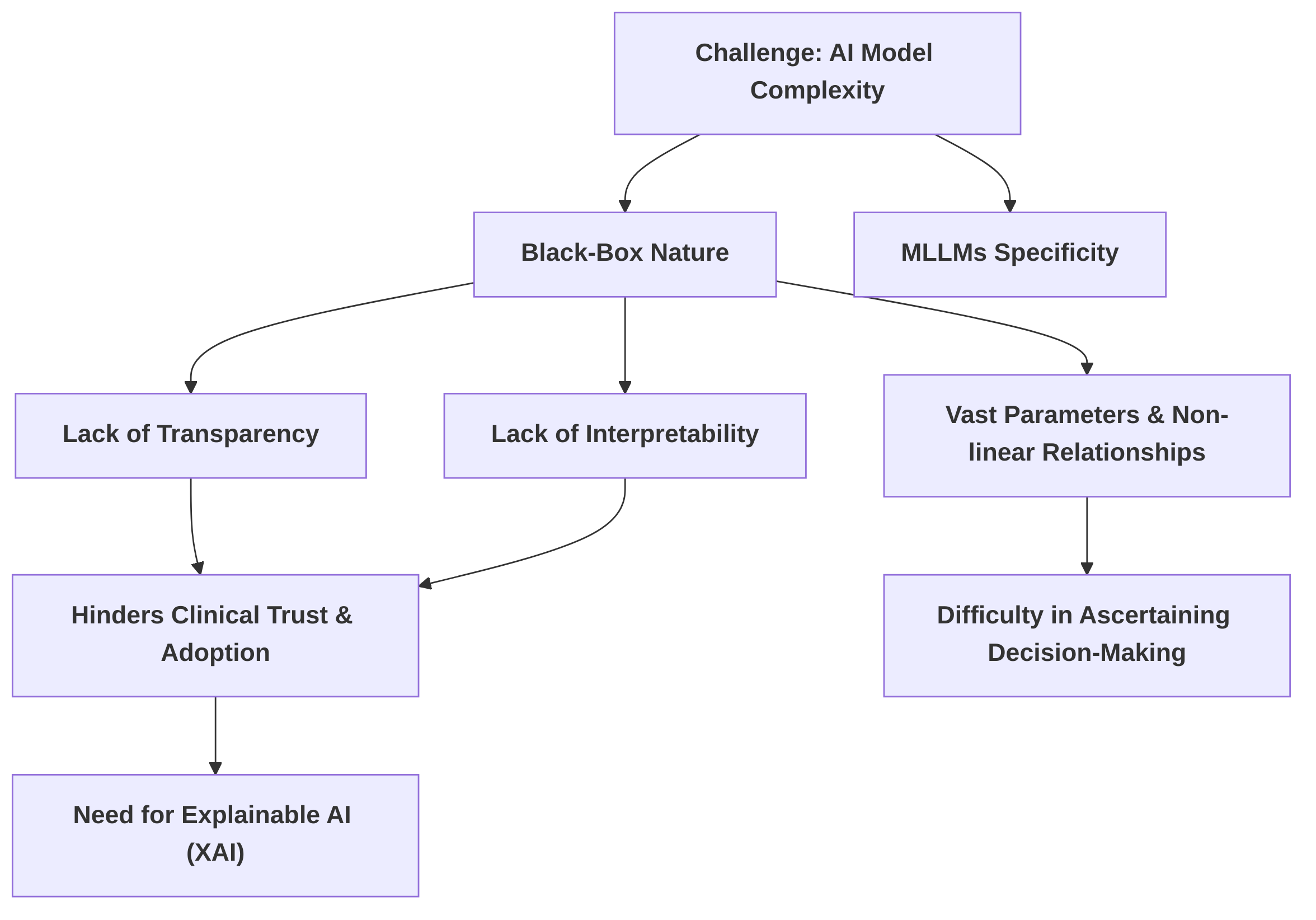

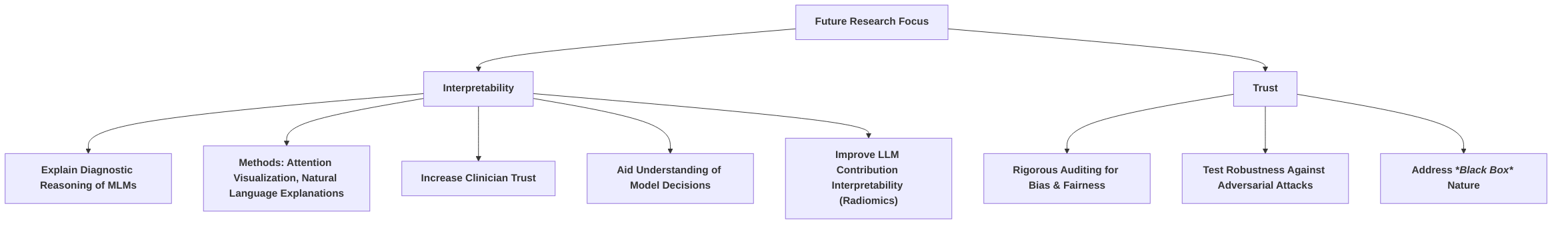

Another critical challenge pertains to the inherent complexity and "black-box" nature of contemporary AI models, particularly MLLMs, which impedes their transparency and interpretability . This lack of interpretability, where the intricate decision-making processes remain opaque, directly hinders clinical trust and adoption . The problem stems from the vast number of parameters and intricate non-linear relationships within these models , where increased predictive power often comes at the expense of interpretability . The critical need for Explainable AI (XAI) in medical diagnosis is widely recognized, with interpretability highlighted as a significant research gap for LLMs in clinical applications given their impact on patient safety . Future research should focus on robust, clinically relevant XAI methods for multimodal medical data, integrating insights from cognitive psychology and human-computer interaction to bridge the gap between complex AI decisions and human clinical reasoning.

Furthermore, the pervasive issue of bias in AI medical imaging systems carries significant implications for personalized diagnosis, potentially leading to inequitable healthcare outcomes. Sources of bias are multifaceted, originating from study design, datasets, modeling, and deployment phases, encompassing issues such as demographic imbalance, variations in image acquisition, and annotation bias . Homogeneous training data, often biased towards specific demographics or geographic regions, risks generating biased decisions and reduced generalizability . Despite the growing recognition of these issues, many studies in the field, such as , often overlook bias, fairness, and broader ethical considerations, representing a significant research gap for MLMs. The ethical landscape extends to patient privacy, model accountability, and equitable access to care, necessitating stringent data governance and transparent systems for risk management . Future research must prioritize the development of robust bias auditing frameworks and fairness-aware training algorithms, alongside standardized methods for detecting and mitigating bias in multimodal medical data.

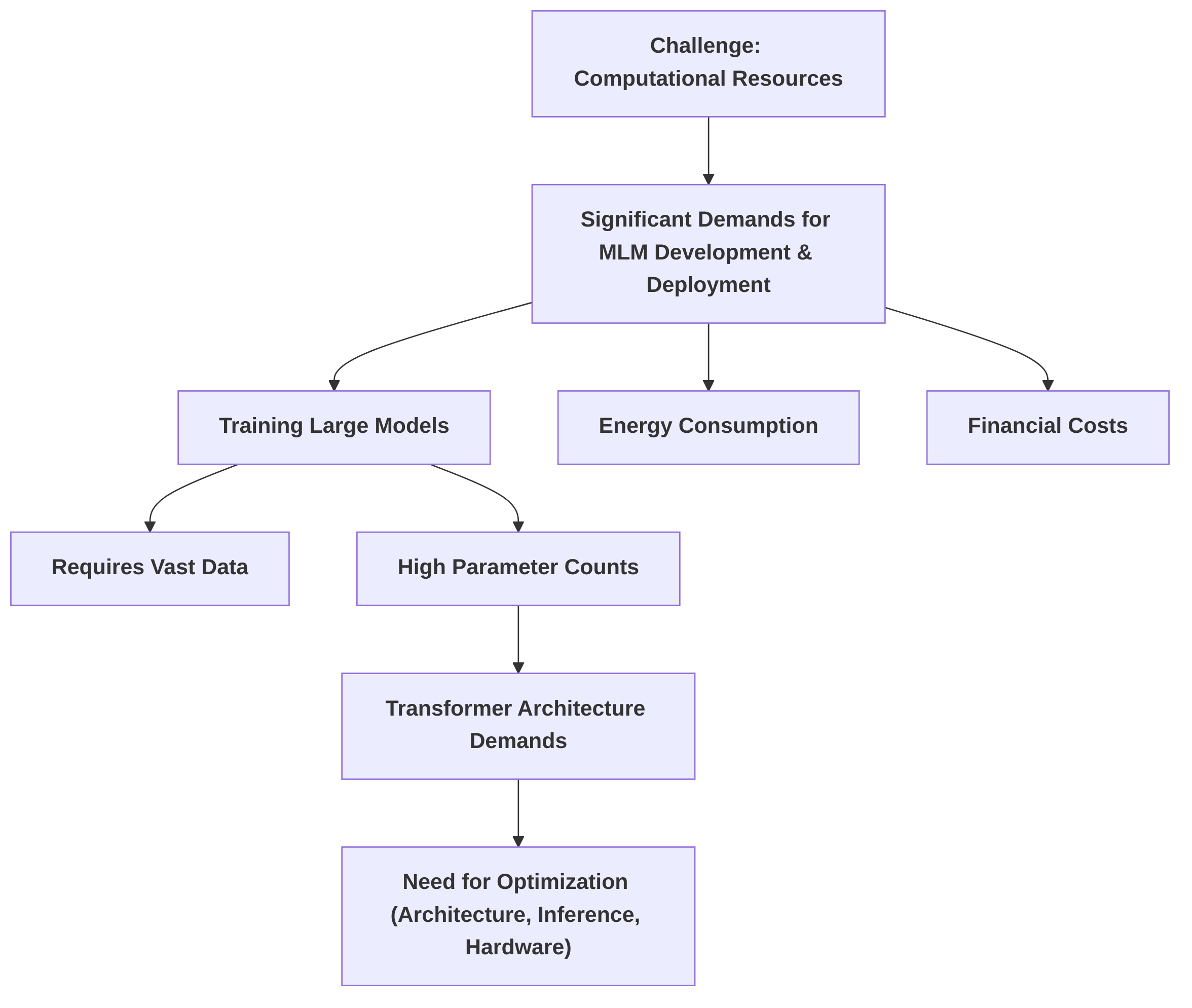

The development and deployment of MLMs also demand significant computational resources . The energy consumption and financial costs associated with training large models can be substantial . To mitigate these challenges, parameter-efficient fine-tuning (PEFT) techniques have emerged as a promising solution, significantly reducing computational costs compared to full model fine-tuning . Techniques like LoRA and the broader application of transfer learning contribute to more economical model development and deployment. However, a comprehensive comparison of trade-offs across different PEFT methods remains an area for further investigation. Research gaps exist in optimizing computational efficiency for resource-constrained environments, necessitating the development of more efficient model architectures and advanced distributed training strategies.

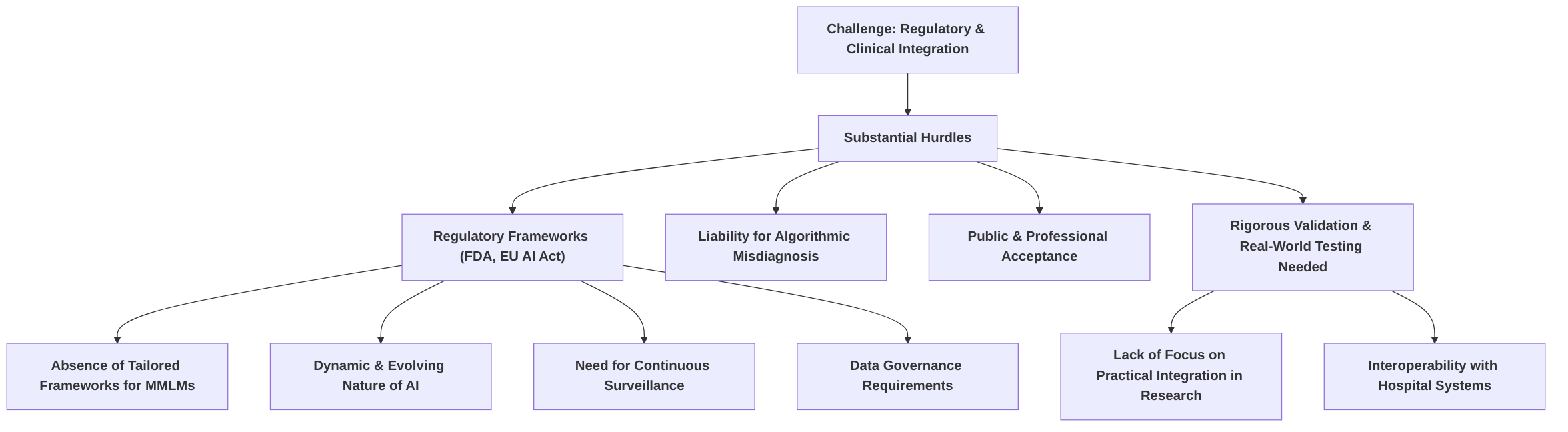

Finally, the regulatory and clinical integration of MMLMs faces substantial hurdles. While regulatory bodies like the FDA have approved some AI-based medical devices , fundamental questions persist regarding liability for algorithmic misdiagnosis and public acceptance. The absence of comprehensive and adaptable regulatory frameworks specifically for the dynamic nature of MMLMs complicates approval processes, demanding continuous surveillance and strict data governance for high-risk systems . Rigorous validation and real-world testing are critical for ensuring safe and effective clinical adoption , yet many current research efforts prioritize technical performance over practical integration or regulatory considerations . Future research must address these gaps by establishing clear regulatory pathways, developing standardized validation protocols, exploring regulatory sandboxes for iterative testing, and focusing on user-friendly interfaces and seamless integration into existing hospital information systems to ensure MLMs complement human expertise .

4.1 Data Availability, Quality, and Annotation

A significant hurdle in the development of multimodal large models for personalized medical image diagnosis is the acquisition of high-quality, large-scale, and well-annotated multimodal datasets. The inherent complexity of medical data, combined with the stringent requirements for AI model training, exacerbates this challenge. Deep learning algorithms, particularly those with a high number of parameters, necessitate vast amounts of data for effective training, often in the order of millions of samples, which contrasts sharply with medical datasets typically numbering in the hundreds to tens of thousands .

The complexities of data augmentation and preparation are critical for effective model training. While techniques such as de-identification, anomaly handling (e.g., image artifacts, text inconsistencies), and controlled spatial, intensity, and text augmentations can expand dataset sizes and preserve diagnostic features, as demonstrated by the expansion of an initial 500 cases to 3,000 in one study , these methods do not fully address the underlying challenges of acquiring diverse, high-quality multimodal medical data. For instance, in the context of COVID-19 detection, publicly available datasets were noted to be small and of highly variable quality, necessitating minimal data curation to avoid non-expert bias and the application of preprocessing pipelines like N-CLAHE to mitigate issues related to brightness, contrast, and noise .

Technical difficulties in fusing data from different modalities and sources further compound the problem. The heterogeneity and variable quality of multimodal medical datasets pose substantial challenges, leading to a mismatch between existing healthcare data storage practices and the specific requirements for AI development . Data is frequently stored in formats unsuitable for AI research, such as scanned PDFs, and logging methods vary considerably among physicians. This inconsistency makes effective data curation arduous and increases the risk of models being overtrained on limited, "AI-friendly" datasets, which can introduce database bias where models learn from specific settings, time periods, and patient populations, potentially leading to biased decisions in different clinical environments .

Data scarcity persists due to several factors. High-quality annotated datasets are a significant investment and a crucial resource . The need for expert consensus in annotation is paramount, yet inter-reader variability can introduce annotation bias stemming from subjective human labeling. Moreover, reference standard bias can affect label accuracy and reliability, while preprocessing techniques might inadvertently emphasize certain features, leading to further bias . The high cost and time involved in expert annotation, coupled with inherent differences in equipment between hospitals, make managing large-scale hospital imaging data particularly challenging. The relatively low adoption rate of Picture Archiving and Communication Systems (PACS), around 50-60%, indicates significant impediments to effective data sharing across institutions, further exacerbating data scarcity and contributing to the high cost of storing and operating hospital data .

Research gaps are evident in developing scalable and efficient data annotation pipelines. Current practices are often labor-intensive and expensive, necessitating innovative solutions. Future research should focus on advanced data augmentation techniques that are more sophisticated than simple transformations, ensuring the generated data maintains clinical relevance and diversity. Furthermore, a critical area for future investigation is the exploration of unsupervised or self-supervised learning methods. These approaches hold promise for significantly reducing reliance on extensive, manually annotated datasets by leveraging the vast amounts of unlabeled medical data available, thereby mitigating the current data scarcity and annotation challenges. This necessitates an industry-wide shift in how medical data is collected, stored, and managed to align with the demands of AI development .

4.2 Model Complexity, Interpretability, and Explainability

The inherent complexity of contemporary artificial intelligence (AI) models, particularly multimodal large models (MLLMs), presents a significant challenge to their clinical adoption: a lack of transparency and interpretability . This "black-box" nature, where the internal decision-making processes are opaque, directly hinders trust and inhibits integration into clinical workflows . The problem stems from the fundamental architecture of these models, characterized by vast numbers of parameters and intricate non-linear relationships, making it difficult to ascertain the precise features a neural network utilizes for classification or to explain how parameters attain their trained values . While simpler models, such as VGG16/19, may exhibit greater trainability and consistency with limited datasets, more complex architectures often sacrifice interpretability for enhanced predictive power .

The critical need for Explainable Artificial Intelligence (XAI) in medical diagnosis is increasingly recognized. Several studies highlight interpretability as a paramount requirement for LLMs in clinical applications, given their direct impact on patient safety . Despite this, the opacity of these models remains a significant research gap . The inherent complexity of LLMs, as demonstrated by their use in tasks like enhancing radiomics features for tumor classification, does not inherently come with detailed explanations of their diagnostic outputs .

The underlying reasons for this "black-box" nature are multi-faceted. The backpropagation mechanism, a cornerstone of deep learning, optimizes model parameters without providing explicit insight into the causal relationships between input features and output predictions . Furthermore, the sheer scale of MLLMs, encompassing billions of parameters, creates an intricate web of interdependencies that defy human comprehension. This complexity can also make bias detection cumbersome, as the internal workings are not readily accessible for scrutiny . The potential for AI outputs to be "confidently wrong" underscores the critical need for true interpretability to avoid misleading clinicians .

Research efforts in XAI aim to make AI more transparent. Proposed techniques to improve interpretability include attention and gradient visualization, adversarial testing, and natural language explanations . These methods are crucial for detecting weaknesses and providing more precise results. However, challenges persist in developing robust and clinically relevant XAI methods specifically for multimodal medical data. Future research should focus on integrating techniques from cognitive psychology and human-computer interaction to enhance the explainability and trust in these models. This interdisciplinary approach could lead to more intuitive and understandable explanations, bridging the gap between complex AI decisions and human clinical reasoning, thereby fostering greater clinical acceptance and improved patient outcomes.

4.3 Bias, Fairness, and Ethical Considerations in Personalized Diagnosis

The pervasive issue of bias in artificial intelligence (AI) medical imaging systems poses significant implications for personalized diagnosis, potentially leading to inequitable healthcare outcomes. Addressing these biases necessitates a comprehensive understanding of their fundamental issues, detection, avoidance, and mitigation strategies . The broader ethical landscape surrounding the deployment of these models in healthcare further complicates their integration . It is noteworthy that current research, such as that presented in , often overlooks bias, fairness, and ethical considerations, highlighting a critical research gap for multimodal large models (MLMs).

Bias in AI medical imaging systems stems from various sources within multimodal medical data, impacting fairness and equitable access to care. Key sources include demographic bias (e.g., gender, age, ethnicity), representation bias, sampling bias, aggregation bias, omitted variable bias, measurement bias, and propagation bias . For instance, reliance on specific, often curated, datasets like MIMIC can lead to models that are overfitted to particular settings, time periods, and patient populations, risking biased decisions and reduced generalizability . Such homogenous training data can cause AI algorithms to unequally weigh certain diagnoses based on socioeconomic status, race, or gender . Furthermore, the origin of training data, often predominantly from Western countries and in English, can lead to reduced representation of other regions and societal components, introducing novel sources of bias, especially in Large Language Models (LLMs) . The lack of diversity within development teams can further exacerbate these biases .