0. Creative and Ethical Dilemmas of Generative AI in Narrative Game Design: A Review

1. Introduction: The Evolving Landscape of Generative AI in Game Design

The rapid advancement of generative artificial intelligence (GenAI) is reshaping numerous creative industries, profoundly influencing domains ranging from visual arts to literature and music. Within this transformative landscape, game design stands out as a particularly fertile ground for AI's application, promising unprecedented levels of innovation and efficiency . This review aims to systematically analyze the multifaceted implications of GenAI in narrative game design, exploring the core question: "Enhancing Creativity or Constraining Innovation?" .

In the context of this survey, "narrative game design" refers to the intricate process of crafting interactive storylines, character arcs, world-building elements, and dialogue systems that collectively form the player's immersive experience. This encompasses the development of dynamic and adaptive narratives, where player choices significantly influence the progression and outcome of the story, as well as the creation of believable and emotionally resonant non-player characters (NPCs) whose behaviors and backstories evolve in response to player interaction . It moves beyond static, pre-programmed narratives to embrace real-time adaptation and personalized experiences .

The increasing integration of AI into game development marks a significant paradigm shift from traditional manual coding and pre-scripted mechanics to sophisticated, data-driven approaches . Early applications of AI in games primarily focused on foundational elements such as Non-Player Character (NPC) behavior and control, as well as basic Procedural Content Generation (PCG) to reduce development costs and offer novel experiences . PCG, initially employed since the 1980s in games like Rogue, has seen its potential dramatically expanded with the advent of generative AI since the mid-2010s .

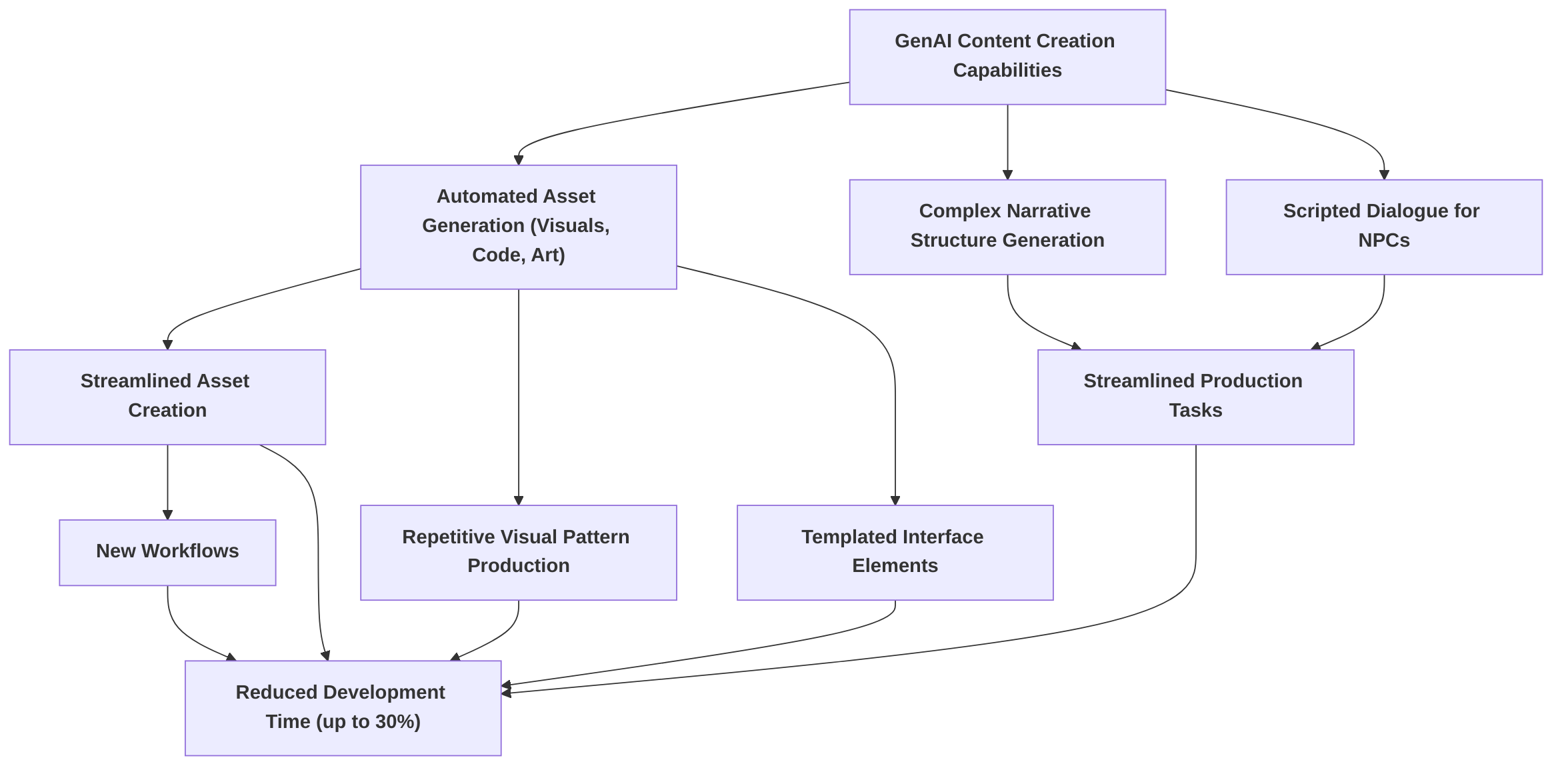

The transformative potential of GenAI in game development is evidenced by its capacity to automate various aspects of content creation, ranging from generating entire game assets, code, and art to complex narrative structures and scripted dialogue for NPCs . For instance, AI tools can streamline asset creation, production tasks for repetitive visual patterns, and templated interface elements, enabling new workflows and potentially reducing development time by up to 30% . This automation not only accelerates ideation and prototyping but also contributes to reduced development costs .

Beyond efficiency, GenAI fosters dynamic, player-driven experiences that fundamentally alter game dynamics and player engagement. AI-driven engines enable real-time content creation, offering "choose your own adventure" formats with numerous variations, where stories and environments adapt dynamically to player choices and playstyles . This leads to increased engagement and replayability, as each playthrough can be unique and personalized, enhancing emotional connections with characters by adjusting their behaviors based on player interactions . For example, in Detroit: Become Human, AI reportedly assists in crafting complex character arcs that shift based on player decisions . The market for generative AI in gaming is projected for substantial growth, indicating its crucial role in the industry's future, with 70% of players favoring games that respond to their skill levels .

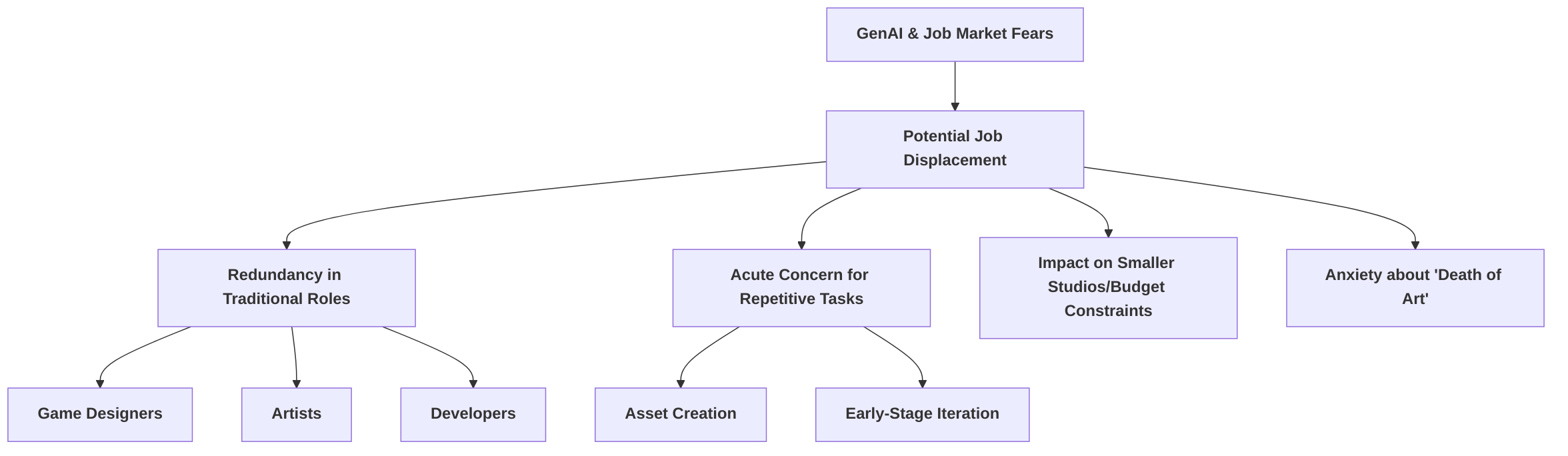

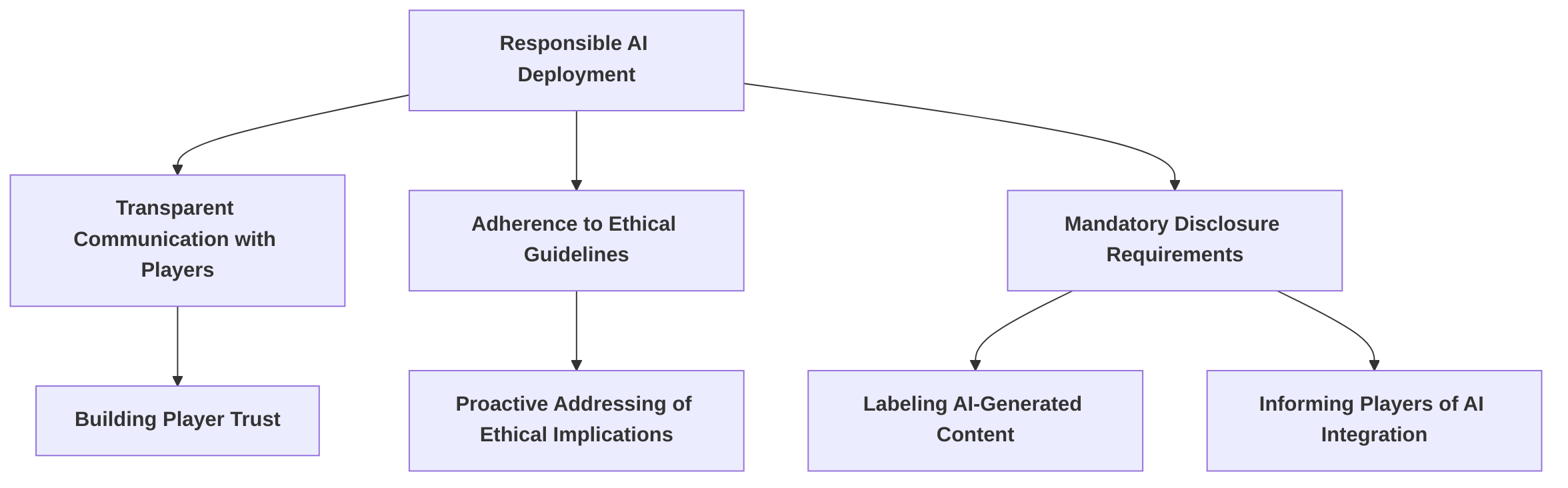

Despite these promising advancements, the integration of GenAI is not without its challenges. The shift towards AI-generated content raises significant ethical and creative concerns, including issues of intellectual property theft, potential job displacement for human creators, and a perceived loss of creative control . A notable 84% of indie game developers report moderate to serious concerns regarding these issues . Furthermore, ethical dilemmas extend to data privacy and the potential for bias in AI-generated content, with 60% of developers expressing concerns about bias . Transparency, ownership, and the challenges of artificially induced emotions also present critical areas for ethical consideration . A significant technical challenge identified is the scarcity of vast amounts of training data required for domain-specific game content, which hinders the advancement of PCG research .

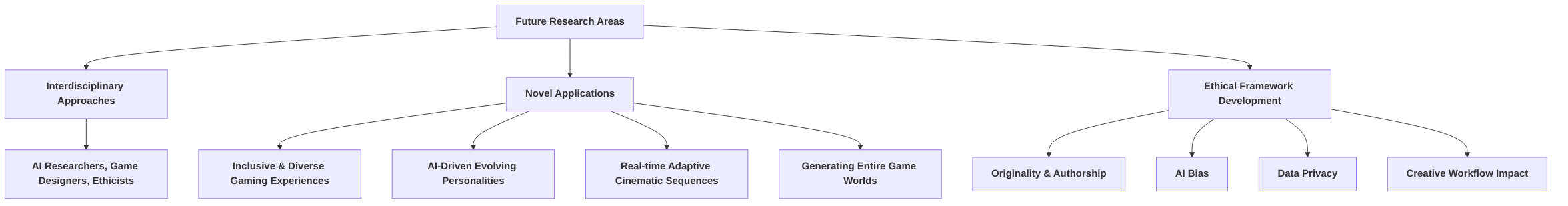

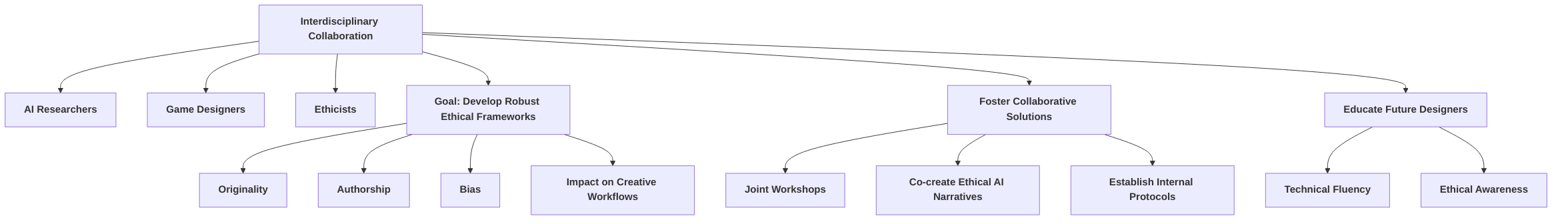

This review's objectives are threefold: first, to systematically analyze the creative challenges and opportunities presented by generative AI in narrative game design, exploring how GenAI acts as both a "creative co-pilot" and a potential threat to artistic integrity . Second, it aims to scrutinize the ethical dilemmas arising from AI's adoption, including data privacy, content ownership, and the impact on player experience . Finally, the review will identify critical areas for future research, contributing to the development of responsible and sustainable AI applications in the gaming industry . By addressing these dimensions, this survey seeks to provide a comprehensive understanding of GenAI's evolving role in shaping the future of narrative game design.

2. Technical Foundations of Generative AI in Game Content Generation

This section explores the technical underpinnings of Generative Artificial Intelligence (GenAI) within the domain of game content creation, with a particular focus on its evolution from and enhancement of traditional Procedural Content Generation (PCG). It begins by defining PCG and tracing its historical significance in game development, highlighting its initial reliance on rule-based and algorithmic approaches. Subsequently, Generative AI is introduced as a transformative paradigm that extends the capabilities of PCG through sophisticated machine learning models. A comparative analysis will delineate the distinct approaches of traditional PCG and GenAI in generating diverse game content, including environments, visual assets, and narrative components .

The discussion then delves into various GenAI models, such as Generative Adversarial Networks (GANs), Large Language Models (LLMs), and Diffusion Models, elaborating on their specific architectures and functionalities. A key focus will be on articulating how each of these models is particularly suited for different aspects of narrative game design, drawing distinctions between their efficacy in generating textual narrative components (e.g., dialogue, plotlines) versus visual or environmental assets (e.g., character design, environmental storytelling) . For instance, the application of LLMs for dynamic dialogue generation and complex story plots will be contrasted with the utility of other models in crafting character designs or enhancing environmental narratives.

Finally, this section provides a structured overview of the current integration of these GenAI technologies into game development workflows. It illustrates how GenAI is actively being leveraged to create novel narratives and more dynamic Non-Player Characters (NPCs), thereby significantly contributing to advanced storytelling in games. This comprehensive technical foundation sets the stage for subsequent discussions on the broader impact, ethical considerations, and future trajectory of GenAI in narrative game design .

2.1 Evolution of PCG: From Traditional Methods to Generative AI

The lineage of Procedural Content Generation (PCG) in game design showcases a profound evolution, moving from rudimentary algorithmic approaches to sophisticated Generative Artificial Intelligence (GenAI) systems. This progression has fundamentally reshaped how game content is created, offering unparalleled possibilities for complexity and emergent storytelling. Early PCG, exemplified by games like Rogue (1980), relied on fundamental randomization algorithms and fixed rule sets to ensure consistency and playability . These foundational techniques, while innovative for their time, often imposed limitations on the diversity and organic nature of the generated content. For instance, games such as Spelunky and The Binding of Isaac utilized algorithmic approaches for level generation, demonstrating the early efficacy of PCG in creating varied, replayable experiences within predefined structural constraints .

The limitations of traditional PCG methods, primarily their reliance on human-designed rules and the constrained randomness, paved the way for the integration of AI. The 2000s marked a significant boom in AI research and application, gradually introducing more dynamic and less static content generation methods . This period saw the emergence of AI tools aimed at cooperative building, iteration, and refinement of game elements, such as Tanagra, Sentient Sketchbook, and Talakat, which influenced NPC behaviors and early PCG implementations . However, these still largely operated within predefined frameworks, meaning content variation was bound by the explicit rules encoded by designers.

A pivotal shift occurred with the advent and widespread adoption of machine learning (ML) and, more specifically, deep learning from the mid-2010s onwards . This technological leap significantly enhanced PCG capabilities in terms of the fidelity, diversity, and adaptiveness of generated content . Unlike traditional rule-based PCG, which often required explicit algorithms for every content variation, ML-driven PCG could learn patterns and structures from existing data, enabling the generation of novel content that adhered to learned aesthetics or functionalities. For example, the application of algorithms like Markov Chains for generating sequences and layouts, Genetic Algorithms (GAs) for evolving content based on player behavior (seen in games like Galactic Arms Race), and Wave Function Collapse (WFC) for creating coherent and diverse environments (as in Noita) marked a significant advancement, moving beyond simple randomness to more informed and constrained generation .

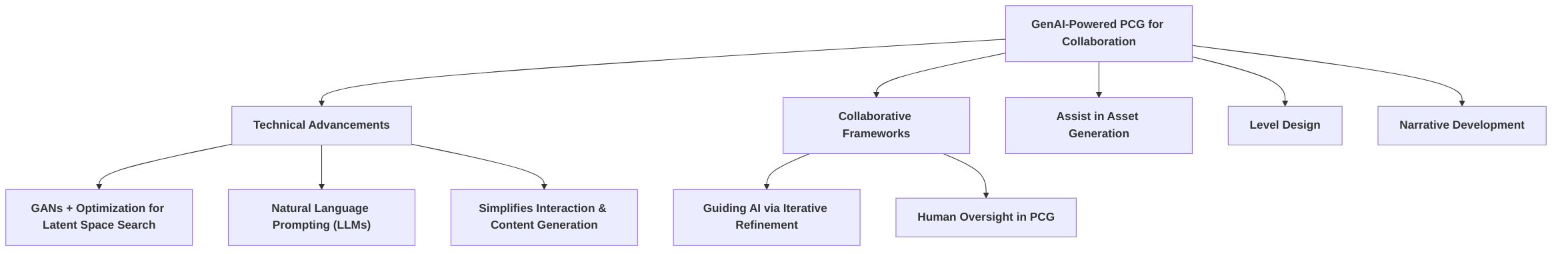

Generative AI (GenAI) represents the latest and most transformative paradigm shift in PCG. It distinguishes itself by enabling machine learning models to not only learn from existing designs but also to generate entirely new content in a similar style, often with the ability to adjust for specific parameters like difficulty or narrative context . This allows AI to move beyond fixed rules to a more adaptive, learned, and creative approach to content creation . This advancement is highlighted by games like Minecraft and No Man's Sky, which leverage PCG to create vast, unique, and evolving worlds, significantly reducing manual design effort while offering unique player experiences . GenAI-driven PCG can generate diverse game content, ranging from environments and character behaviors to complex narrative elements .

The contrast between traditional PCG and GenAI-driven PCG is stark. Traditional methods, while effective for generating variations within defined parameters, typically struggled with narrative complexity and true emergent storytelling. Their output was often predictable to some extent, limited by the explicit rules programmed by human designers. In contrast, GenAI, particularly through deep learning models, can process vast datasets of existing narratives, dialogues, and character interactions to generate new, contextually rich, and dynamic narrative elements . This capability extends to creating adaptive NPCs that respond to player actions and evolving story branches, fostering truly emergent storytelling experiences that are difficult, if not impossible, to achieve with traditional methods . The ability of GenAI to create "adaptive worlds" that evolve with each new game session exemplifies this leap, moving beyond simple randomized dungeons to vastly more sophisticated and personalized experiences .

The technological leaps underpinning this evolution are multifaceted. They include advances in computational power, the availability of large datasets, and innovations in machine learning algorithms, particularly in neural networks and generative adversarial networks (GANs) and transformers. These advancements have enabled the development of systems capable of learning intricate patterns and generating highly diverse and coherent content. The progression from early AI implementations focused on simple NPC control and scripted patterns to more autonomous and adaptive NPCs further illustrates this profound shift .

Despite the significant progress, research gaps remain in fully understanding the long-term impact of this evolution on game design practices. While existing literature provides comprehensive classifications of ML methods in PCG and traces the general evolution , a dedicated in-depth survey specifically on generative AI for PCG has been noted as a missing element, distinguishing current research from prior surveys on general PCG or machine learning for PCG . Future research needs to explore the implications of increased automation on the creative roles of human designers, the potential for AI-generated content to dilute artistic vision, and the ethical considerations surrounding AI-driven narrative generation, especially concerning originality and intellectual property. Furthermore, understanding how players interact with and perceive truly emergent narratives generated by GenAI, and the long-term effects on player engagement and replayability, represents a crucial area for continued investigation.

2.2 Generative AI Models and Techniques for Narrative PCG

The landscape of procedural content generation (PCG) for narrative elements in games has been significantly reshaped by advancements in generative artificial intelligence (AI). Various generative AI models and techniques have emerged, each possessing distinct strengths and weaknesses when applied to tasks such as dialogue generation, plot point creation, or character backstory development.

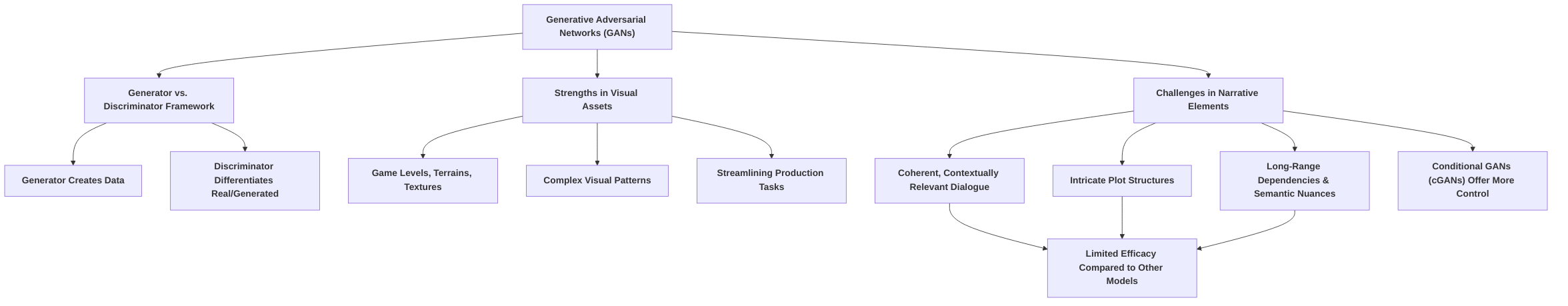

One foundational category of generative models comprises Generative Adversarial Networks (GANs). GANs operate on a competitive framework involving a generator network and a discriminator network . The generator attempts to produce data that is indistinguishable from real data, while the discriminator learns to differentiate between real and generated content . While GANs are widely recognized for their prowess in generating visual assets like game levels, terrains, and textures , and even complex visual patterns for streamlining production tasks , their direct application to narrative elements, such as generating coherent and contextually relevant dialogue or intricate plot structures, presents challenges. While conditional GANs (cGANs) offer more controlled output generation , their capacity to handle the long-range dependencies and semantic nuances inherent in complex narrative arcs remains less explored compared to other models specifically designed for sequential data.

Diffusion Models represent another significant class of generative AI. These models function by gradually transforming noise into structured outputs through a learned reversed diffusion process . They have demonstrated considerable capability in generating coherent actions and materials , and are implicitly utilized in image generation tools like DALL·E for creating diverse visual content relevant to game assets . While promising for visual aspects of narrative (e.g., character appearance based on backstories), their direct utility in generating textual narrative components like dialogue or plot points is less pronounced compared to models specifically designed for natural language processing. Their strength lies more in generating high-fidelity outputs given a constrained input, which might limit their flexibility for open-ended narrative generation without significant prompt engineering.

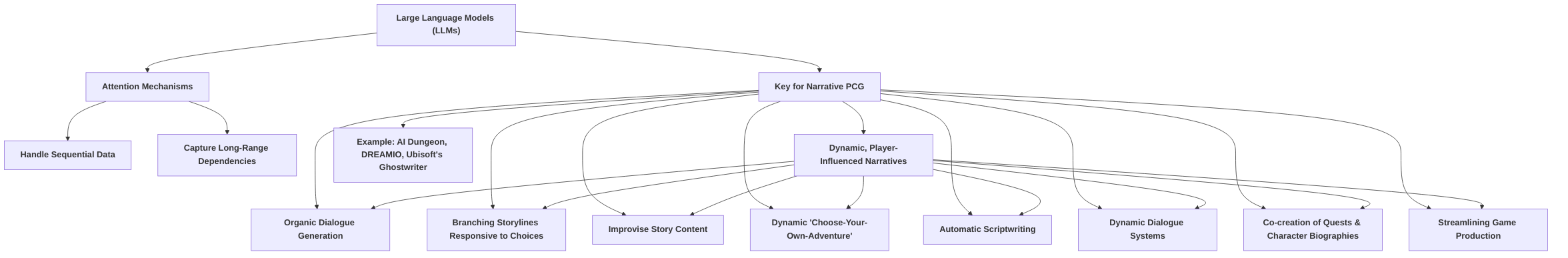

Transformers, particularly Large Language Models (LLMs), stand out as highly relevant for narrative PCG due to their reliance on attention mechanisms, which enable them to excel at handling sequential data and capturing long-range dependencies . Models such as GPT and GPT-3, DALL·E (for text-to-image), ChatGPT, and potentially multimodal models like Google's Gemini, are pivotal in enabling dynamic, player-influenced narratives . These models can generate organic dialogue, craft branching storylines responsive to player choices , improvise story content, and create dynamic "choose-your-own-adventure" narratives, as exemplified by AI Dungeon and DREAMIO . LLMs also demonstrate utility in automatic scriptwriting, dynamic dialogue systems, and the co-creation of quests and character biographies, significantly streamlining game production workflows . Ubisoft's "Ghostwriter" project, for instance, leverages AI for auto-generating non-player character (NPC) barks, showcasing its practical application for dialogue and lore generation . The general strength of LLMs lies in their ability to generate creative, coherent, and diverse stories instantly from natural language prompts .

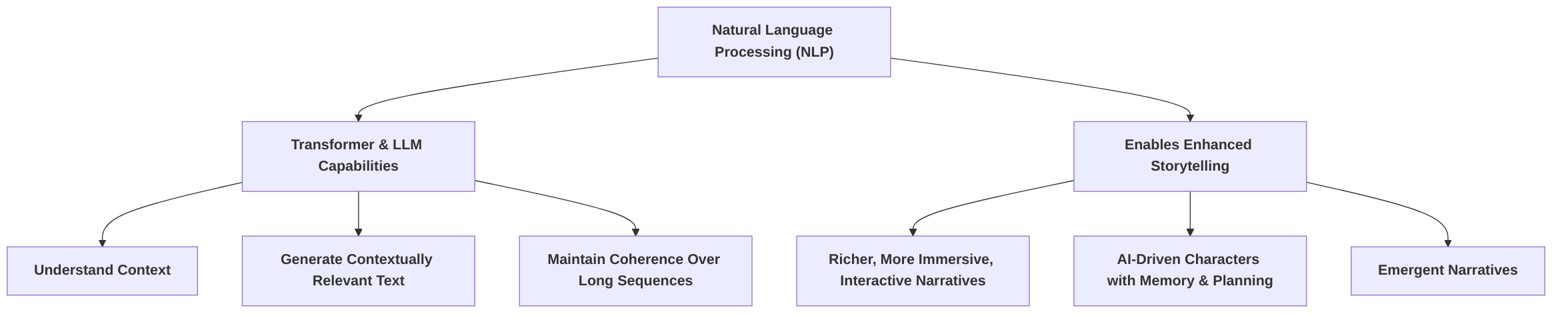

Advancements in Natural Language Processing (NLP) capabilities are critical enablers for enhanced storytelling through PCG. The capacity of Transformers and LLMs to understand context, generate contextually relevant text, and maintain coherence over long narrative sequences directly supports the creation of richer, more immersive, and interactive narratives . This allows for AI-driven characters with memory and planning capabilities, leading to emergent narratives .

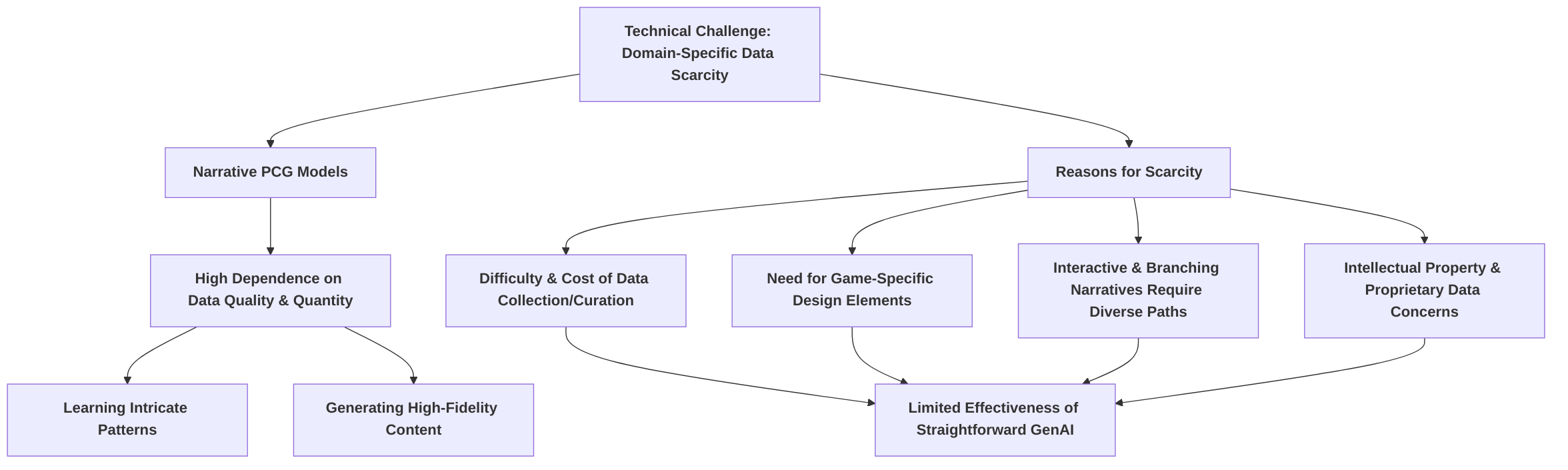

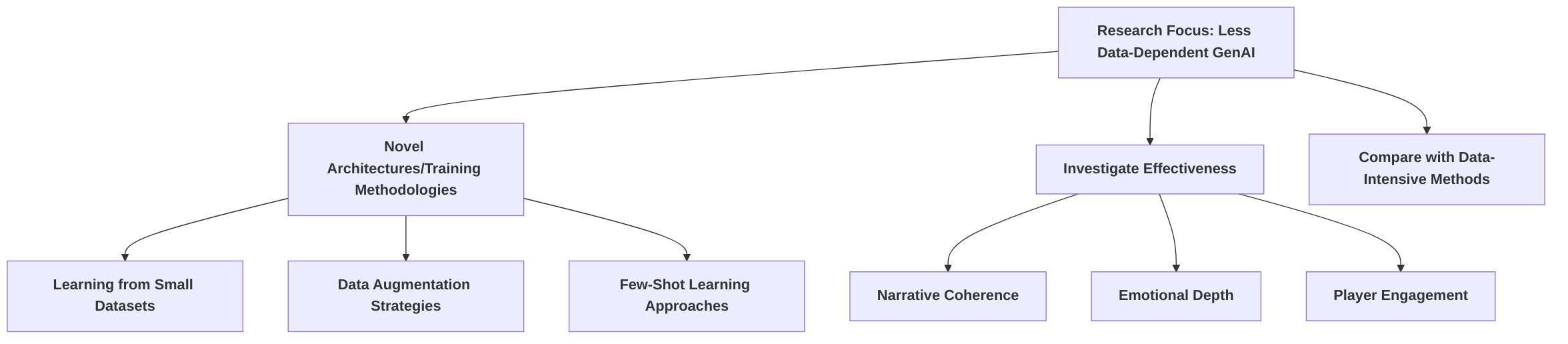

Despite these advancements, a significant technical challenge across all generative AI models, particularly for narrative PCG, is the scarcity of domain-specific training data . This limitation restricts the effectiveness of straightforward generative AI approaches because these models are highly dependent on the quality and quantity of data they are trained on to learn intricate patterns and generate high-fidelity, relevant content. The underlying causes of this data scarcity are multifaceted. Firstly, high-quality narrative data, especially that which is annotated for specific game design elements (e.g., plot points, character arcs, emotional beats), is inherently difficult and expensive to collect and curate. Unlike general text corpora, game narratives often require specialized domain knowledge and a deep understanding of interactive storytelling principles. Secondly, the interactive and branching nature of game narratives means that a simple linear text corpus is often insufficient; models need to learn from diverse narrative paths and player-driven choices, which are not readily available in large, structured datasets. Lastly, intellectual property concerns and proprietary data make it challenging to establish large, publicly accessible datasets for training narrative game AI.

To rectify these shortcomings and address the identified data scarcity challenges, several specific, actionable future research directions are essential. Firstly, exploring few-shot learning techniques is crucial . This involves developing models that can generate high-quality narrative content with minimal examples, potentially leveraging pre-trained general-purpose LLMs and fine-tuning them on small, targeted game-specific datasets. Secondly, novel data augmentation strategies for narrative generation are needed. This could involve techniques like synonym replacement, sentence rephrasing, or even generating synthetic narrative data using simpler rule-based systems or existing small datasets to expand the training pool. Thirdly, the development of transfer learning methodologies that effectively bridge the gap between general narrative datasets and specific game narrative requirements could mitigate data limitations. This might involve training models on vast amounts of general text and then adapting them with smaller, more focused game narrative datasets. Finally, fostering collaborative efforts among game developers, researchers, and academic institutions to create and share anonymized, high-quality narrative datasets could significantly accelerate progress in this field. This would establish benchmarks and foster competition, ultimately leading to more robust and versatile generative AI models for narrative PCG.

3. Creative Opportunities of Generative AI in Narrative Game Design

Generative AI (GenAI) is fundamentally revolutionizing narrative game design by enabling the creation of dynamic and personalized storytelling experiences, significantly enhancing player immersion and replayability . This transformative impact extends across various facets of game development, from enhancing narrative depth and character interactions to streamlining content generation workflows for both AAA and indie game studios.

The following subsections will delve into specific creative opportunities afforded by GenAI. Firstly, "Enhancing Narrative Depth and Branching Storylines" will explore how GenAI facilitates the creation of complex, non-linear narratives and emergent storylines that dynamically adapt to player choices, fostering unparalleled player agency and diverse gameplay scenarios . This section will also compare and contrast different technical approaches, such as rule-based systems and data-driven methods (e.g., LLMs and Reinforcement Learning), employed to achieve these dynamic narratives .

Secondly, "Dynamic Character and NPC Creation" will analyze how GenAI is transforming Non-Player Characters (NPCs) from static entities into intelligent, responsive agents capable of context-aware dialogue and evolving behaviors . This subsection will provide a comparative analysis of the distinct approaches to NPC development, ranging from aesthetic generation using GANs to cognitive and behavioral modeling with RL and Behavior Trees, and their integration with LLMs for nuanced conversational depth . It will also address the critical balance between AI-driven spontaneity and designer control to maintain narrative coherence.

Finally, "Streamlining Content Generation and Iteration" will synthesize the impact of GenAI on accelerating game development workflows and asset creation. This includes discussing how GenAI tools assist designers in brainstorming, prototyping, and iterating on narrative elements and game content, leading to increased creative output and diverse gameplay scenarios . The section will also cover how dynamic and adaptive environments are created by Generative AI, enhancing immersion and reducing development time . It will conclude by summarizing the manifold benefits, such as enhanced replayability and personalized experiences, for studios of all sizes, emphasizing improvements in prototyping and visualization capabilities .

3.1 Enhancing Narrative Depth and Branching Storylines

Generative AI (GenAI) is transforming narrative game design by enabling the creation of intricate and diverse narrative paths and outcomes, which significantly boosts replayability and player engagement compared to traditional linear storytelling. Traditional narratives are typically static, offering a predetermined sequence of events, whereas AI-generated narratives can dynamically adapt to player choices, fostering personalized and highly responsive experiences . This adaptability is achieved through various mechanisms, from simple branching dialogue to complex, emergent storylines influenced by AI agent decisions and player interactions .

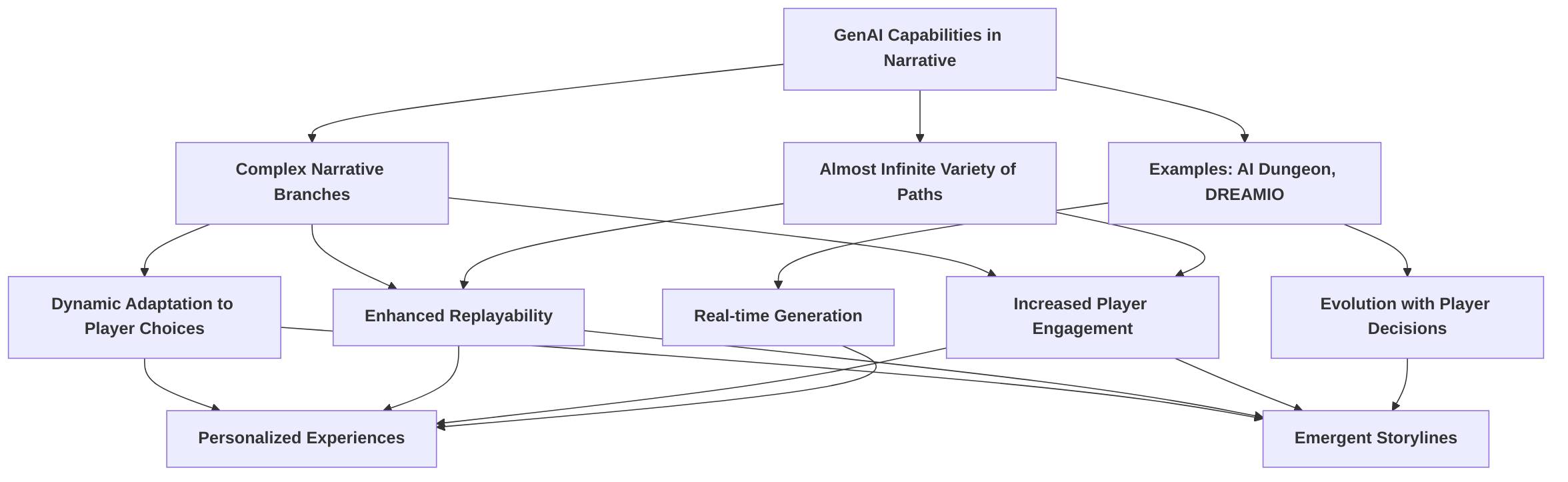

One of the most significant capabilities of GenAI is its ability to generate "complex narrative branches" , leading to an "almost infinite variety of narrative paths" . Games like AI Dungeon exemplify this by generating "literally infinite narrative" in real-time based on player input, while DREAMIO: AI-Powered Adventures utilizes Large Language Models (LLMs) to create choose-your-own-adventure stories that evolve with player decisions . This dynamic progression not only allows for greater player agency but also ensures that each playthrough can be unique, thereby enhancing immersion and replayability . Furthermore, GenAI can generate dynamic dialogue and lore books that react to player choices, contributing substantially to narrative depth .

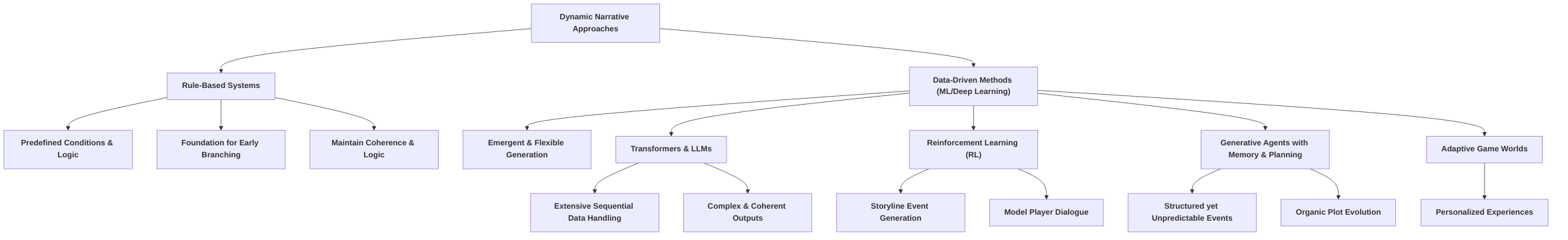

Approaches to achieving these dynamic narratives can broadly be categorized into rule-based and data-driven methods. Rule-based systems, though not explicitly detailed in all provided digests, are foundational to early forms of branching narratives, where predefined conditions and logical structures dictate story progression. Examples like Prom Week, which utilizes Natural Language Processing (NLP) and Graph Models, demonstrate how these systems can manage branching storylines and real-time character interactions by employing architectures such as Recurrent Neural Networks (RNNs) or Transformer networks to maintain coherence and narrative logic .

In contrast, data-driven methods, particularly those leveraging machine learning and deep learning, offer more emergent and flexible narrative generation. Transformers, for instance, are highlighted for their ability to handle extensive sequential data, enabling the creation of highly complex and coherent narrative outputs . LLMs, as seen in AI Dungeon and DREAMIO, allow for spontaneous narrative generation in response to player input, creating "literally infinite narrative" possibilities . Reinforcement Learning (RL) has also been applied to "Storyline events generation" and to model player dialogue, further enhancing narrative complexity and player-driven progression . Generative agents endowed with memory and planning capabilities can create narratives with structured yet unpredictable events, where the plot organically evolves based on player choices and AI agent decisions . This dynamic interaction extends beyond just narrative, as AI engines like GameNGen can adapt the entire game world, including challenges and level layouts, to player preferences in real-time, creating a highly personalized gaming experience .

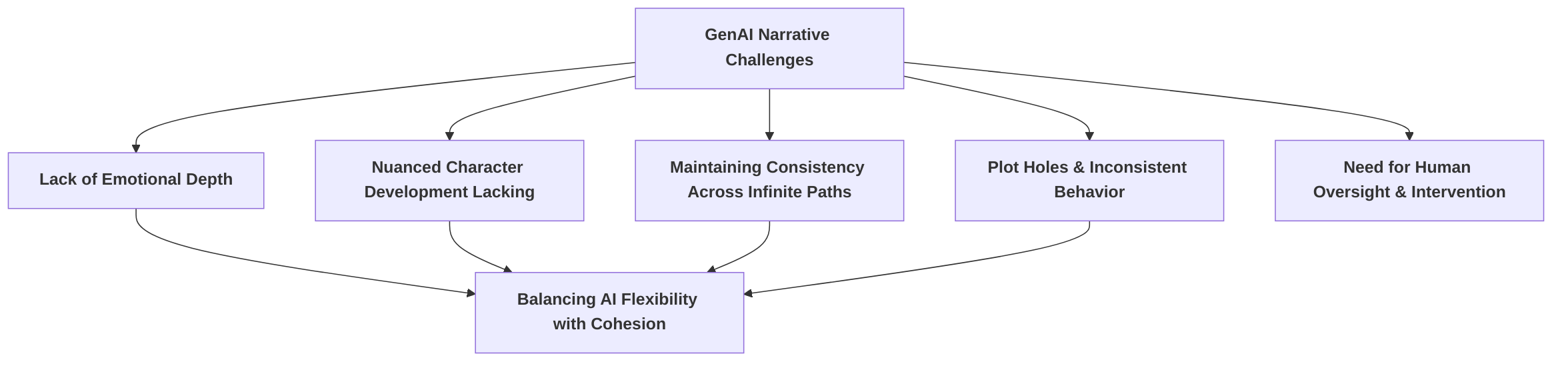

While GenAI significantly enhances narrative dynamism, challenges persist. AI-generated narratives can sometimes lack the emotional depth and nuanced character development found in human-written stories . Furthermore, maintaining consistency in character behavior and plot progression across an "almost infinite variety of narrative paths" is a notable challenge . The goal is to balance AI flexibility with the necessity of a cohesive and emotionally resonant storyline .

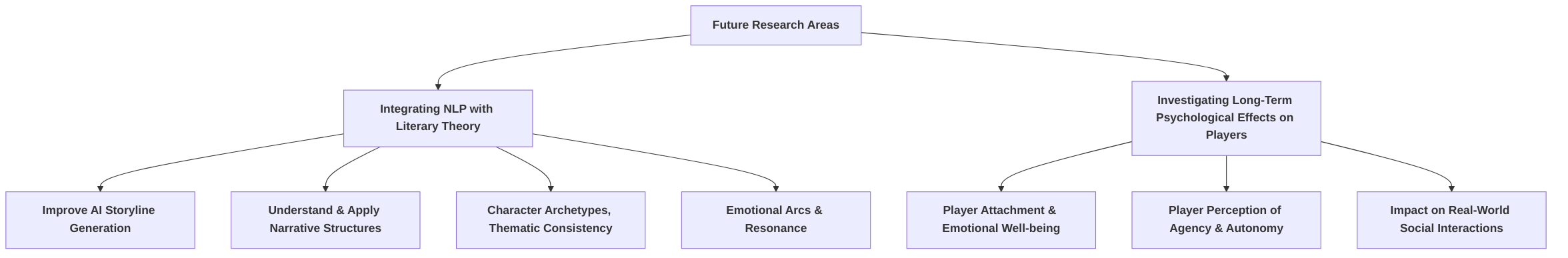

Future research should focus on several key areas to overcome these limitations and further leverage GenAI's potential. Firstly, integrating advanced NLP techniques with established narrative theory from literary studies is crucial for improving AI-driven storyline generation . This involves developing models that can not only generate text but also understand and apply complex narrative structures, character archetypes, thematic consistency, and emotional arcs, ensuring greater coherence and emotional resonance. The existing technical foundations, particularly those involving Transformer architectures, provide a strong starting point for such advancements .

Secondly, given the increasingly sophisticated and adaptive nature of AI characters, a critical area for future investigation is the long-term psychological effects on players who form relationships with these highly adaptive AI entities. As NPCs become more responsive and capable of evolving their personalities and backstories based on player interaction , the psychological implications of these dynamic relationships warrant serious consideration. Methodologies for studying this could include longitudinal qualitative studies examining player testimonials and diaries, quantitative surveys measuring player attachment and emotional well-being, and controlled experimental designs comparing player engagement and emotional responses across varying levels of AI character adaptability. Investigating how players perceive agency, autonomy, and emotional investment within these AI-driven narratives, and the potential impact on social interactions in the real world, would provide valuable insights for ethical game design. Such research would also inform best practices for designing AI characters that enhance immersive experiences without inadvertently leading to negative psychological outcomes.

3.2 Dynamic Character and NPC Creation

Generative Artificial Intelligence (GenAI) is fundamentally transforming the landscape of game design by enabling the development of more lifelike and responsive Non-Player Characters (NPCs), moving beyond static, pre-scripted entities to intelligent agents that significantly enrich the game world . This evolution contributes substantially to the immersive quality of narrative games by fostering dynamic interactions and emergent storytelling possibilities .

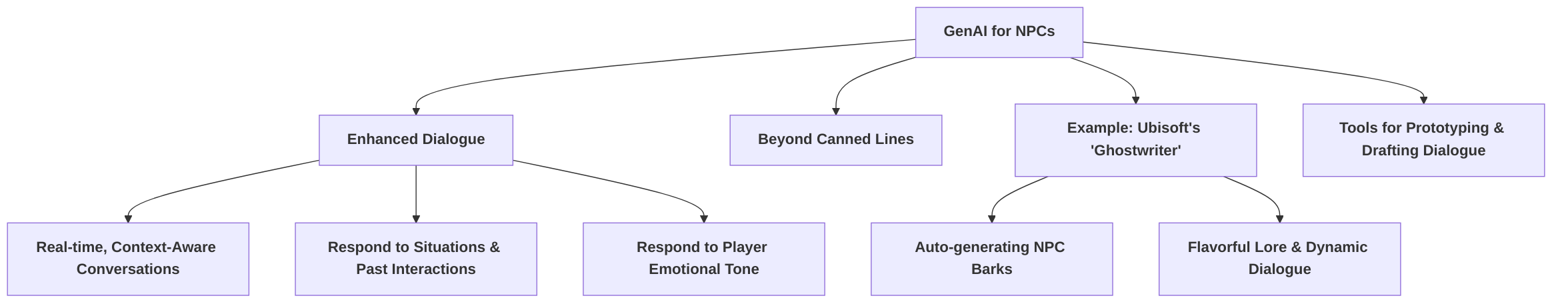

One primary impact of GenAI is the enhancement of NPC dialogue and contextual awareness. Traditional NPCs often rely on canned lines, leading to repetitive and predictable interactions . In contrast, GenAI, particularly through the application of Large Language Models (LLMs), allows NPCs to engage in real-time, context-aware conversations, generating dialogue that responds to current situations, past interactions, and even player emotional tone . For instance, Ubisoft's "Ghostwriter" AI, mentioned in the context of auto-generating NPC barks, exemplifies how LLMs can be leveraged to create flavorful lore, hint notes, and dynamic dialogue, particularly benefiting indie developers in crafting more complex and dynamic smaller characters and moments . Tools designed to assist developers in prototyping, visualizing, and drafting "scripted dialogue and barks for NPCs" further underscore this capability .

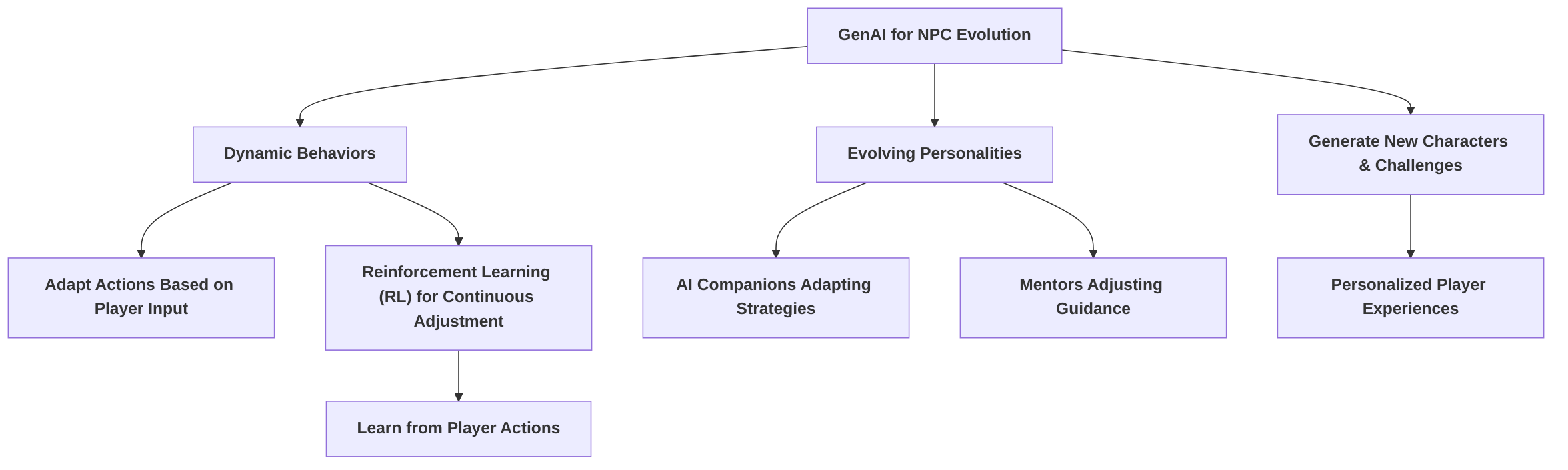

Beyond dialogue, GenAI facilitates the dynamic evolution of NPC behaviors and personalities. AI-driven algorithms empower NPCs to adapt their actions based on player input, making the game world feel more alive and responsive . Modern AI models employ reinforcement learning (RL) to enable NPCs to continuously adjust their behaviors and in-game challenges, actively learning from player actions . This extends to NPCs exhibiting unique personality traits that can evolve over time, such as an AI companion adapting combat strategies or a mentor adjusting guidance based on player progression . The ability to generate new characters and challenges based on individual playstyles and decisions further personalizes the experience .

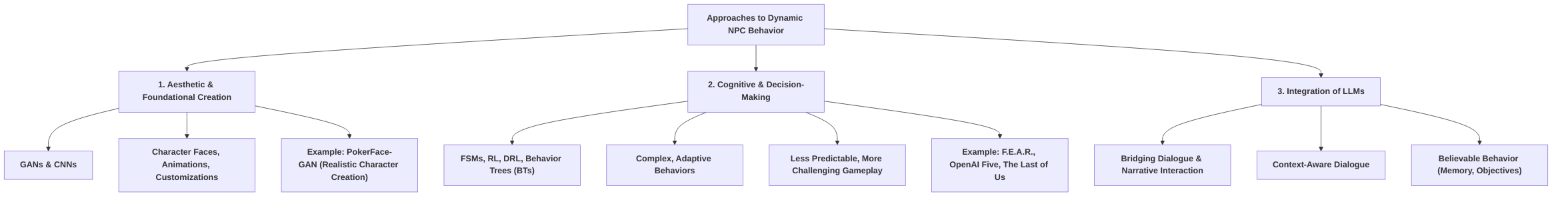

Different approaches to achieving dynamic NPC behaviors are evident across the literature. One approach focuses on the aesthetic and foundational creation of characters, utilizing Generative Adversarial Networks (GANs) and Convolutional Neural Networks (CNNs) for tasks such as generating character faces, animations, and facial customizations . For example, PokerFace-GAN is cited for its ability to automatically create 3D game characters by predicting facial parameters for identity, expression, and pose, aiming for high similarity to input photos . While this contributes to "realistic character creation" and visual diversity, its direct impact on dynamic behavior is foundational rather than behavioral in itself .

Another approach emphasizes the cognitive and decision-making aspects of NPCs. This includes the application of techniques such as Finite State Machines (FSMs), Reinforcement Learning (RL), Deep Reinforcement Learning (DRL), and Behavior Trees (BTs) . The paper discussing AI's role in making NPCs more adaptive highlights examples like F.E.A.R. using RL for enemy tactics and OpenAI Five's DRL in Dota 2 for advanced strategies . Behavior Trees, in particular, provide a hierarchical and modular structure for NPC decision-making, beneficial for complex open-world games, as seen in The Last of Us . These techniques allow for complex, adaptive behaviors, making NPCs less predictable and more challenging, thereby enhancing gameplay and immersion .

The integration of LLMs represents a third, increasingly prominent approach, bridging the gap between static dialogue and dynamic narrative interaction . This allows NPCs to generate context-aware dialogue and exhibit "believable behavior," including memory of past events and the ability to set new objectives . The distinction between these approaches lies in their primary focus: some aim for visual realism and diversity (GANs/CNNs), others for complex adaptive behavior (RL/BTs), and a growing number for nuanced conversational and narrative depth (LLMs) . While each offers distinct advantages, their combined application presents the most promising pathway to truly lifelike NPCs.

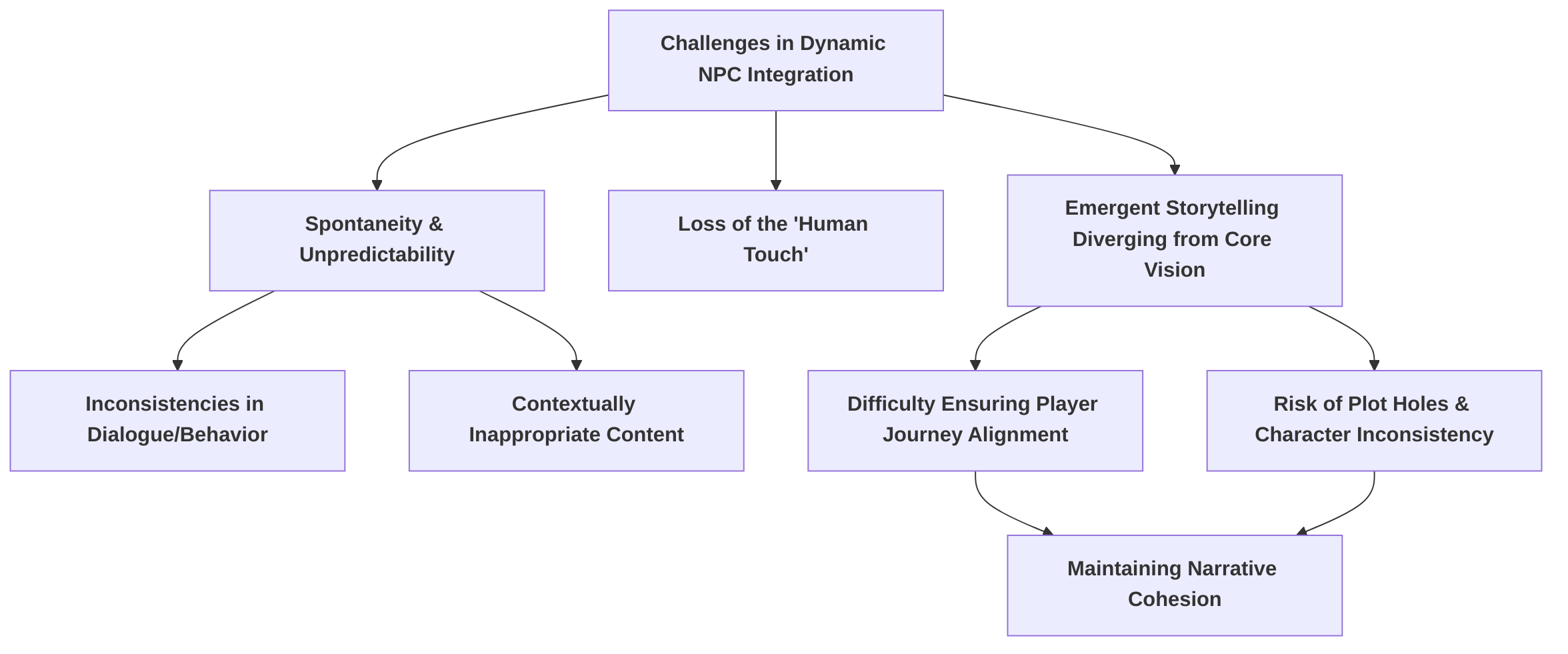

Despite these advancements, maintaining narrative coherence with dynamic NPC interactions presents significant challenges. The spontaneity and unpredictability introduced by AI-driven NPCs can lead to inconsistencies or dialogue that feels "off-key" or contextually inappropriate without careful oversight, potentially leading to a loss of the "human touch" . Emergent storytelling, while powerful, also carries the risk of diverging from the core narrative vision, making it difficult for designers to ensure the player's journey aligns with intended plot points or thematic arcs.

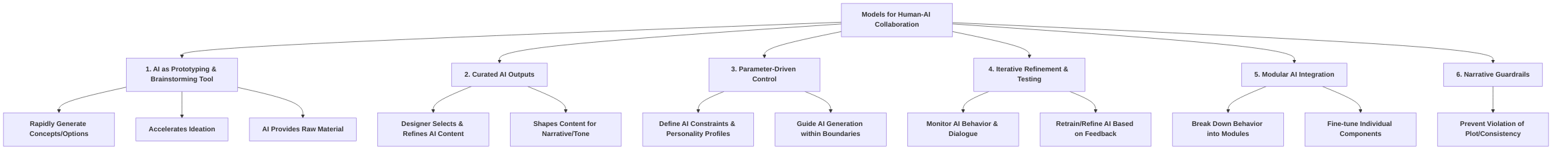

To mitigate challenges to designer agency and ensure human oversight and creative control, specific collaborative models and workflows are essential. Drawing upon best practices discussed in Chapter 6.2, a hybrid approach integrating human-in-the-loop design with AI assistance is critical. This could involve:

- AI as a Prototyping and Brainstorming Tool: Designers can use GenAI to rapidly generate multiple character concepts, dialogue options, or behavioral patterns. This allows for quick iteration and exploration of a wider creative space than human designers could manage alone . The AI acts as an accelerator for initial ideation, presenting diverse starting points for human refinement.

- Curated AI Outputs: Instead of fully autonomous AI, designers curate and select the most fitting or intriguing AI-generated content. This involves a filtering process where AI provides raw material, and human designers shape it to align with the game's narrative, tone, and character arcs. Tools that "assist developers in prototyping, visualizing, and drafting scripted dialogue" inherently promote this selective integration .

- Parameter-Driven Control: Designers define parameters, constraints, and "personality profiles" for NPCs, which guide the AI's generation process. This allows for a degree of control over the AI's output, ensuring that generated dialogue or behaviors stay within predefined character boundaries and narrative requirements. For example, instead of allowing an LLM to generate dialogue freely, designers can impose specific traits, knowledge bases, or emotional tendencies on an NPC that the LLM must adhere to.

- Iterative Refinement and Testing: Continuous playtesting and designer feedback loops are crucial. As AI-powered NPCs interact with players, their behaviors and dialogue are monitored. Discrepancies or undesirable emergent narratives are identified, and the AI models are retrained or refined under human guidance. This iterative process allows for progressive alignment of AI-driven elements with the overall narrative vision.

- Modular AI Integration: Breaking down complex NPC behavior into smaller, manageable AI modules, each with a specific function (e.g., dialogue generation, pathfinding, emotional response), allows designers to fine-tune individual components. This modularity facilitates easier debugging and integration while preserving human oversight over critical narrative elements.

- Narrative Guardrails: Implementing "narrative guardrails" or safety mechanisms within the AI that prevent it from generating content that violates core plot points, character consistency, or thematic integrity. This ensures that even dynamic interactions contribute positively to the overall story rather than detracting from it.

By adopting these collaborative models, developers can harness the power of GenAI to create truly dynamic and immersive NPC experiences while safeguarding creative control and narrative coherence, ensuring that the human touch remains central to the game's artistic vision.

3.3 Streamlining Content Generation and Iteration

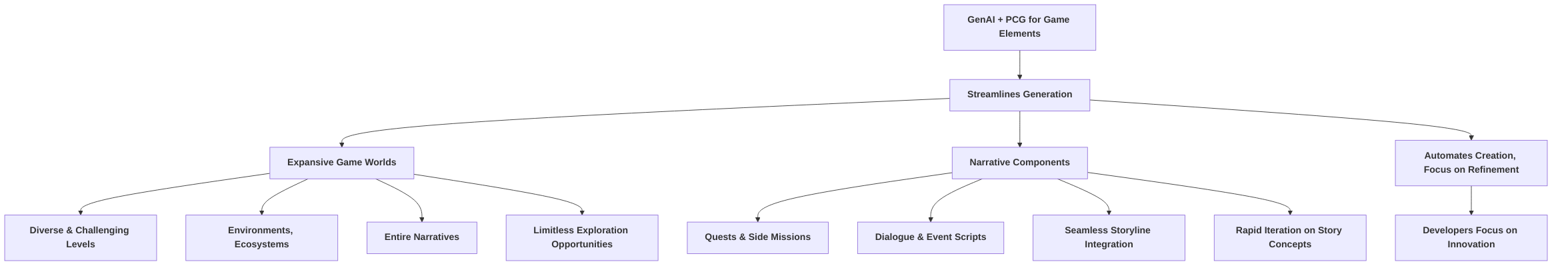

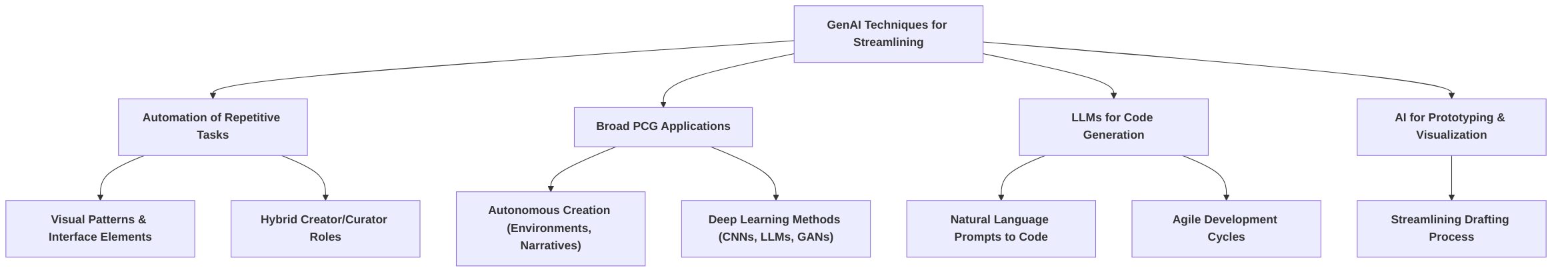

Generative Artificial Intelligence (GenAI) has fundamentally reshaped game development workflows by significantly enhancing efficiency in content creation, thereby reducing manual labor and accelerating the prototyping and iteration of game ideas and narrative concepts . This paradigm shift is evident across various facets of game production, from asset generation and world-building to narrative design and quality assurance.

A primary avenue for efficiency gains lies in automating the creation of game assets. GenAI tools excel at generating elements with repetitive visual patterns or templated interface components, drastically accelerating the production process . This automation extends to producing 3D models, textures, animations, and even audio elements like soundtracks and voiceovers, thereby reducing the dependency on extensive human labor . For instance, neural networks can generate realistic character animations without the need for laborious motion capture, and AI tools can conjure concept art and character portraits in a fraction of the time . This capability is particularly beneficial for independent developers and smaller studios with limited resources, enabling them to produce rich content more rapidly and cost-effectively . Some estimates suggest that procedural content generation (PCG) can reduce development time by up to 50% .

Beyond individual assets, GenAI, particularly through PCG techniques, profoundly streamlines the generation of expansive game worlds and narrative components. AI-driven PCG can autonomously create diverse and challenging levels, planets, ecosystems, and even entire narratives, offering virtually limitless exploration opportunities . This automation allows developers to focus on refinement and innovation rather than labor-intensive manual creation . For narrative design, GenAI can generate quests, side missions, thousands of dialogue lines, and event scripts that seamlessly integrate into the main storyline, ensuring narrative cohesion . This rapid generation of narrative outcomes allows developers to quickly iterate on story concepts and dialogue systems, freeing up creative resources for other aspects of game design . Tools like the AI Story Generator exemplify this, enabling instant creation of "unique and engaging stories" and "creative, coherent, and diverse stories effortlessly" .

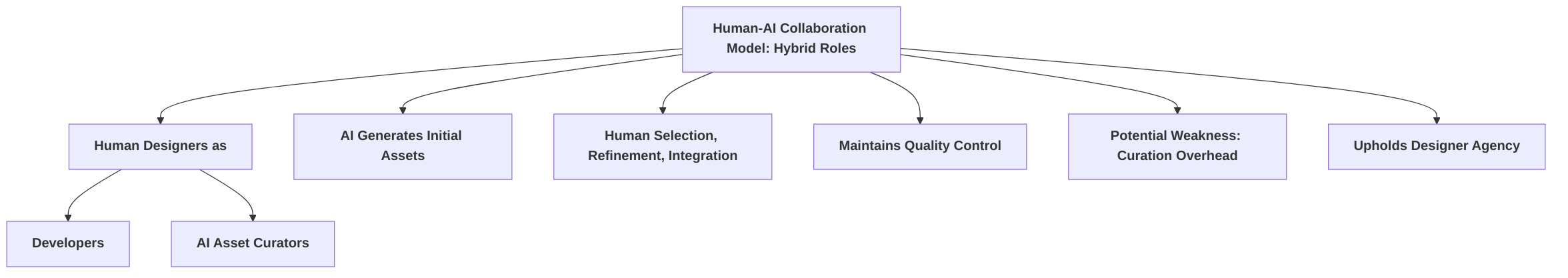

Different approaches to streamlined content generation leverage various GenAI techniques, each offering distinct advantages. For instance, the use of generative AI tools for repetitive visual patterns and templated interface elements demonstrates a focus on automating specific, high-volume production tasks, enabling developers to adopt hybrid roles as both creators and AI asset curators . This contrasts with broader PCG applications, which aim for autonomous creation of diverse content like game environments and narrative components through methods such as Deep Learning techniques (CNNs, LSTMs, VAEs), Generative Adversarial Networks (GANs), Diffusion Models, and Large Language Models (LLMs) . Specifically, LLMs like OpenAI's Codex are noted for their ability to translate natural language prompts into functional code, effectively bridging the gap between creative ideation and technical implementation, fostering agile development cycles . This indicates a move towards more natural language interfaces for content creation, streamlining the input process for designers .

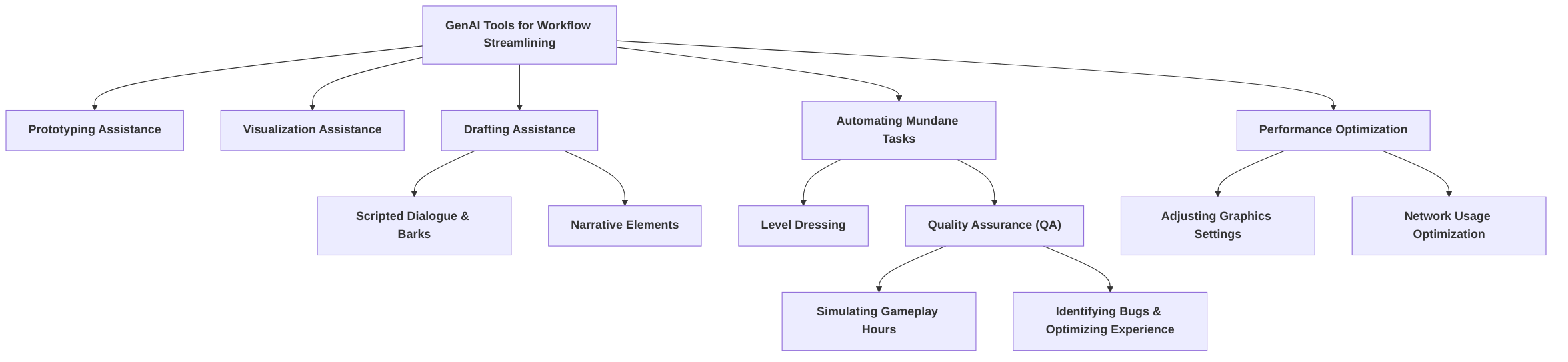

Beyond content generation, GenAI tools streamline the entire development workflow by assisting in prototyping, visualizing, and drafting various aspects of game content, accelerating the iteration process . This includes automating "mundane and time-consuming tasks" like level dressing, enabling human designers to concentrate on refining gameplay . Furthermore, AI-powered tools significantly enhance quality assurance by simulating thousands of gameplay hours for testing, efficiently identifying bugs and optimizing player experience, a stark contrast to the resource-intensive nature of manual testing . Ubisoft's "Commit Assistant," which utilizes static analysis and pattern recognition to predict bugs, exemplifies this application . AI also contributes to performance optimization by automatically adjusting graphics settings and network usage, utilizing deep learning for image processing and recurrent neural networks (RNNs) for time-series data handling to improve frame rates .

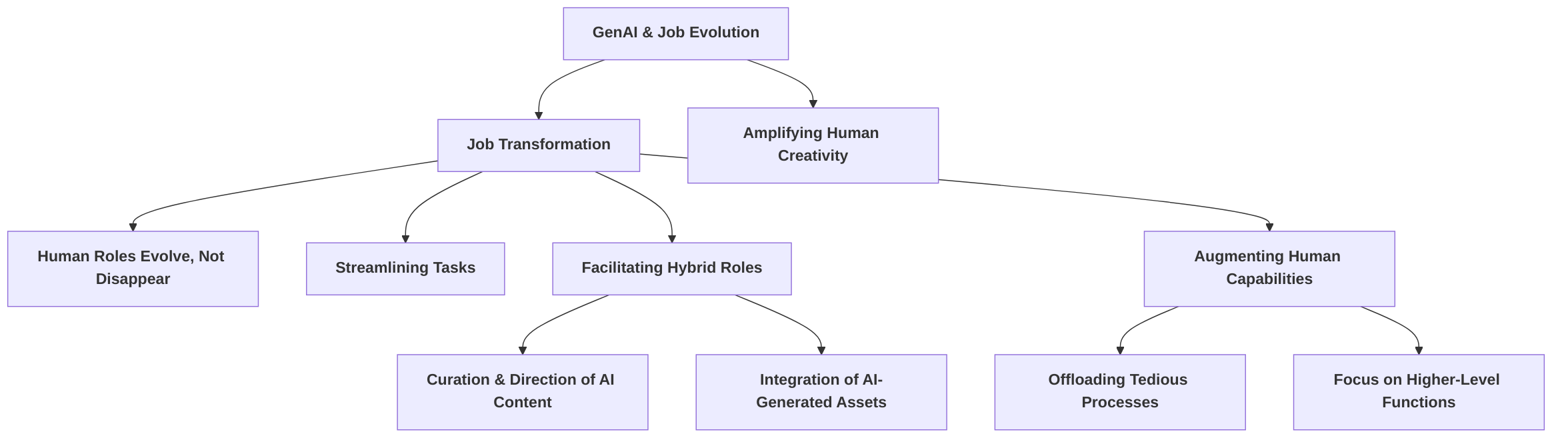

In summary, the efficiency gains from GenAI are multifaceted. It automates repetitive and time-consuming tasks, thereby reducing manual labor and development costs, particularly benefiting indie developers . It enables rapid prototyping and iteration of game ideas and narrative concepts by quickly generating diverse content options . Moreover, it fosters hybrid roles, where developers can act as curators and refine AI-generated outputs, allowing them to focus on higher-level creative directives and critical polishing . The various approaches, from automating asset production to comprehensive PCG for world and narrative generation, highlight the versatility of GenAI in streamlining game development workflows, paving the way for more agile and innovative creative processes.

4. Creative Dilemmas Posed by Generative AI

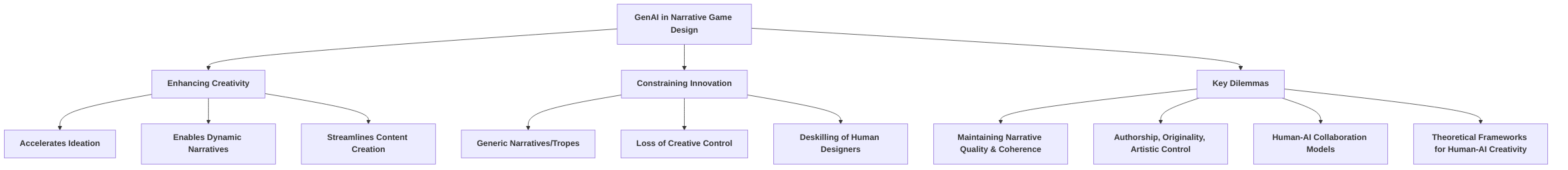

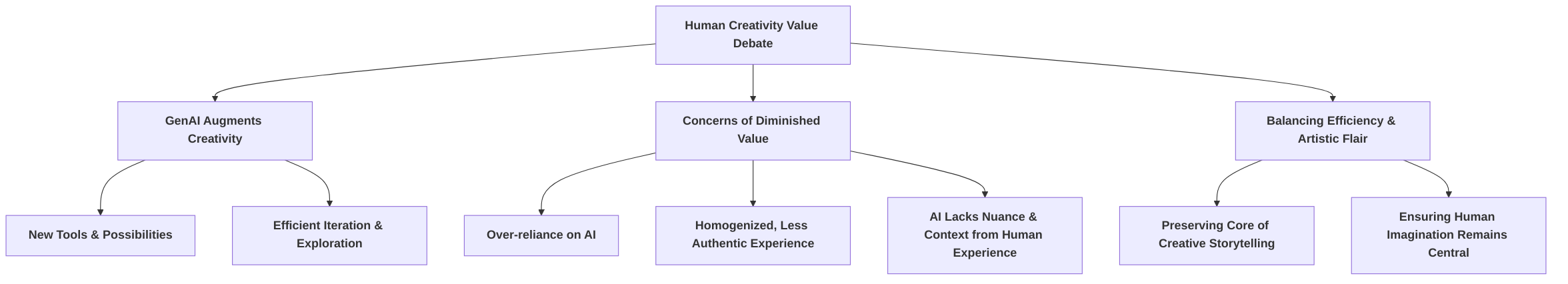

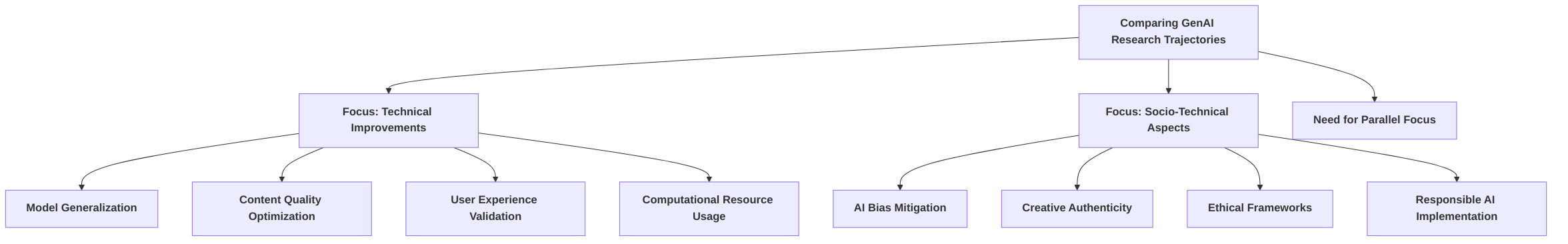

The integration of Generative AI (GenAI) into narrative game design presents a complex array of creative dilemmas, compelling a critical examination of its role in either enhancing or constraining innovation. This section directly addresses the central question of "Enhancing Creativity or Constraining Innovation?" by comparing and contrasting arguments for AI as a creative accelerant versus a creativity dampener . We will delve into specific instances where AI might lead to generic narratives or character tropes, counterbalancing these with examples where AI has genuinely fostered unique narrative paths. A significant challenge explored is GenAI's impact on maintaining consistent narrative quality and coherence, particularly with dynamically generated content. This includes potential pitfalls such as plot holes or inconsistent character motivations, alongside strategies for human oversight and intervention to mitigate these issues. Furthermore, the discussion will extend to the broader implications for creative practices in the industry, including the potential for deskilling among human designers. Finally, this section will propose theoretical frameworks for understanding the evolving collaboration between human designers and AI, prompting a comparison of different philosophical and legal viewpoints on originality in AI-generated content, and drawing insights from art theory and cognitive psychology regarding human creativity .

The first subsection, "Authorship, Originality, and Artistic Control," examines the foundational challenges GenAI poses to established notions of creative ownership and novelty. It critically analyzes whether AI-generated narratives and assets achieve genuine originality, exploring concerns that AI predominantly remixes existing data, potentially leading to "derivative, homogenized" outputs lacking unique artistic identity . This subsection also delves into philosophical and legal perspectives on originality in AI-generated content, considering doctrines like "sweat of the brow" versus "modicum of creativity" and highlighting the ambiguity surrounding copyright ownership . It concludes by proposing multi-faceted approaches to evaluating AI-generated originality, including transformative use analysis and hybrid authorship models, to navigate these complex creative and legal landscapes.

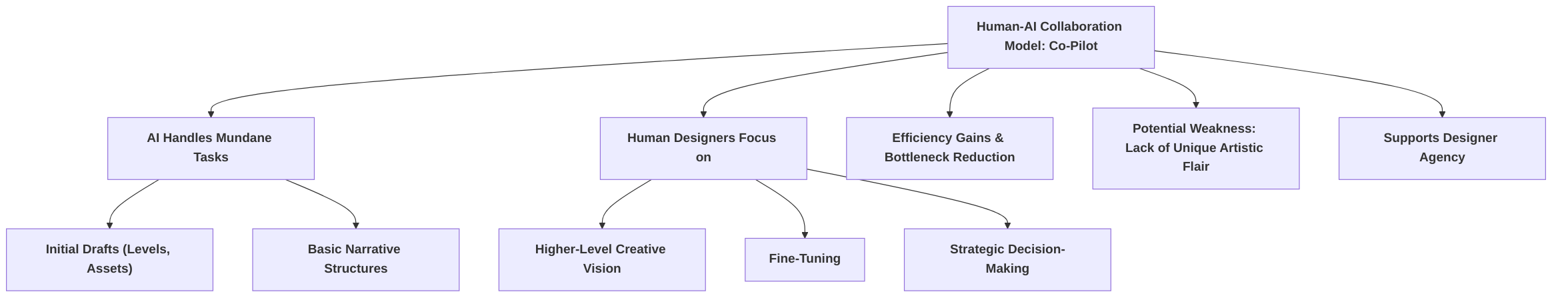

Building upon the challenges of authorship, the second subsection, "Human-AI Collaboration and Designer Agency," shifts focus to the evolving role of human designers within AI-assisted workflows. It outlines various models of human-AI collaboration, from AI as a "creative co-pilot" handling mundane tasks to a more advanced role as a "creative companion" that actively contributes to ideation and problem-solving . This part compares the strengths and weaknesses of these models, analyzing their impact on designer agency and creative satisfaction. It explores how human designers can maintain artistic vision and control, particularly through curation and refinement of AI outputs, rather than direct creation . The subsection further discusses strategies for mitigating challenges to designer agency, such as integrating AI tools seamlessly into existing pipelines and fostering transparent, human-oversight-driven workflows . This integrated approach ensures that AI augments, rather than diminishes, human creativity, preserving the unique artistic vision essential for engaging game experiences .

4.1 Authorship, Originality, and Artistic Control

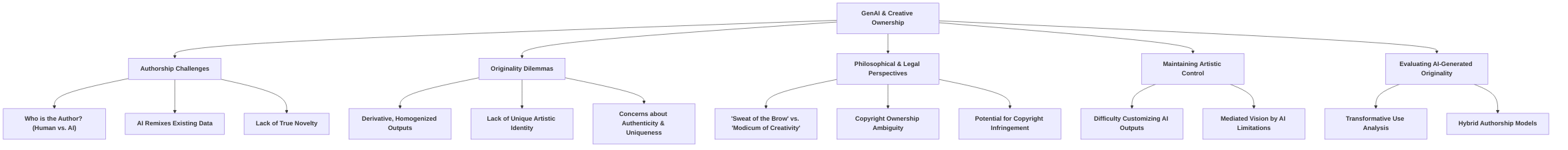

The emergence of Generative AI in narrative game design introduces profound creative dilemmas, fundamentally challenging established notions of authorship, originality, and artistic control. As AI systems become capable of generating intricate narrative elements and game assets, the traditional boundaries of human creative input and automated output begin to blur, raising complex questions about who—or what—is the true author .

A central dilemma revolves around whether AI-generated narratives and assets achieve genuine originality. Critics argue that AI tools, by their very nature, primarily remix and synthesize existing data from their vast training sets rather than invent truly novel content . This perspective suggests that AI output often results in a "derivative, homogenized look" or unoriginal outputs, making it difficult to achieve a unique visual or narrative identity, as the AI merely recombines existing patterns . For instance, concerns were raised by over 60% of participants in one study, who agreed that generative AI might diminish the originality of game design, expressing apprehension that AI-generated content might be overly reliant on its training data, leading to derivative outputs that lack authenticity and uniqueness . This contrasts with the human capacity for intentionality and expressive depth, which AI-generated outputs often struggle to replicate, frequently lacking the nuance, emotional tone, and stylistic coherence characteristic of human-made work . The community widely expresses concern that excessive reliance on AI could result in a "homogenized, less authentic experience" due to AI's inability to fully grasp the subtlety and context derived from human experience .

From a philosophical standpoint, the debate centers on the definition of "originality" itself when applied to AI-generated content. If originality implies a unique, uncopied creation stemming from a conscious creative intent, then AI, operating on algorithms and data, complicates this definition. Legal viewpoints on originality for AI-generated content often grapple with the "sweat of the brow" doctrine versus the "modicum of creativity" standard. The "sweat of the brow" doctrine, prevalent in some jurisdictions, grants copyright based on the labor and effort expended, which could theoretically extend to the developers of the AI or the users who craft prompts. However, the "modicum of creativity" standard, more common in U.S. copyright law, requires a minimal degree of creative expression, which remains contentious for purely AI-generated works. The ambiguity regarding the ownership of AI-generated content is a significant concern for designers, with some hesitant to integrate AI-generated outputs directly into final products due to potential legal risks related to copyright infringement .

The lack of clear legal frameworks for AI authorship, as further explored in Chapter 5.1, directly exacerbates challenges in maintaining artistic control by human designers. Without established legal precedents for who owns the copyright to AI-generated content, developers face considerable uncertainty, particularly if the AI inadvertently "regurgitates" copyrighted material from its training data . This potential for copyright infringement creates a complex legal minefield for developers and can constrain artistic freedom by forcing designers to be overly cautious about integrating AI outputs . Moreover, the technical challenges in creatively customizing AI-generated assets also limit artistic control, as designers may struggle to precisely align AI outputs with their unique artistic vision . This difficulty in fine-tuning AI-generated content further blurs the lines of creative agency, as the designer's vision may be mediated and potentially compromised by the AI's inherent limitations or biases.

Evaluating the "originality" of AI-generated content in narrative games necessitates a multi-faceted approach. Several methods and considerations can be proposed:

- Transformative Use Analysis: This involves assessing whether the AI-generated content transforms the source material it was trained on sufficiently to be considered a new work. This assessment could involve human review panels or advanced AI-driven comparison tools to quantify the degree of alteration and departure from known training data.

- Intentionality and Prompt Complexity: While AI lacks consciousness, the originality could be partly attributed to the human prompt engineer's ingenuity and the complexity of their prompts. The more detailed and specific the prompt, the more credit could be given to human input, especially if the prompt guides the AI toward a genuinely novel outcome.

- Human Curation and Iteration: Originality might be evaluated based on the extent of human curation, refinement, and iterative design applied to AI-generated drafts. Content that undergoes significant human modification and selection would demonstrate more human authorship than content used "as-is."

- Statistical Novelty Metrics: Developing quantitative metrics to measure the statistical novelty of AI-generated content compared to existing datasets. This could involve algorithms that detect unique patterns, structures, or semantic combinations not present in the training data or other existing works. For example, a metric could quantify the divergence of an AI-generated narrative structure from known narrative archetypes.

- Perceptual Originality Surveys: Conducting user studies where human participants evaluate the originality of AI-generated content without knowing its origin. This subjective evaluation, while prone to bias, could offer insights into how "original" the content feels to an audience.

- Hybrid Authorship Models: Recognizing that AI acts as a sophisticated tool rather than a sole author. In this model, originality is attributed to the collaborative process between the human designer and the AI, emphasizing the human's role in guiding, selecting, and integrating AI outputs into a coherent artistic vision. This perspective frames AI as an amplifier of human creativity rather than a replacement.

In conclusion, as generative AI becomes more integrated into narrative game design, addressing the creative dilemmas surrounding authorship, originality, and artistic control becomes paramount. Establishing clearer legal frameworks, developing robust methods for evaluating originality, and fostering a collaborative paradigm between human designers and AI are crucial steps toward navigating these complex ethical and creative landscapes.

4.2 Human-AI Collaboration and Designer Agency

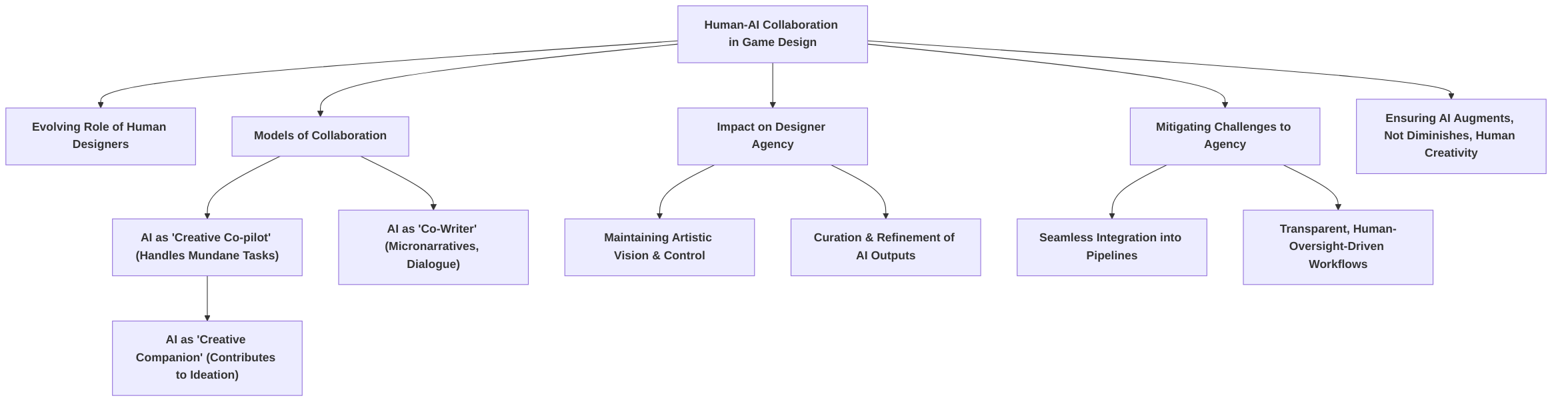

The integration of generative artificial intelligence (AI) into narrative game design environments has instigated a significant re-evaluation of the narrative designer's evolving role. This paradigm shift necessitates a detailed analysis of how human designers maintain their artistic vision and control within an AI-assisted workflow. The literature presents various models of human-AI collaboration, ranging from AI functioning as a mere suggestion engine to its more advanced role as a co-creator, each with distinct implications for designer agency and creative satisfaction.

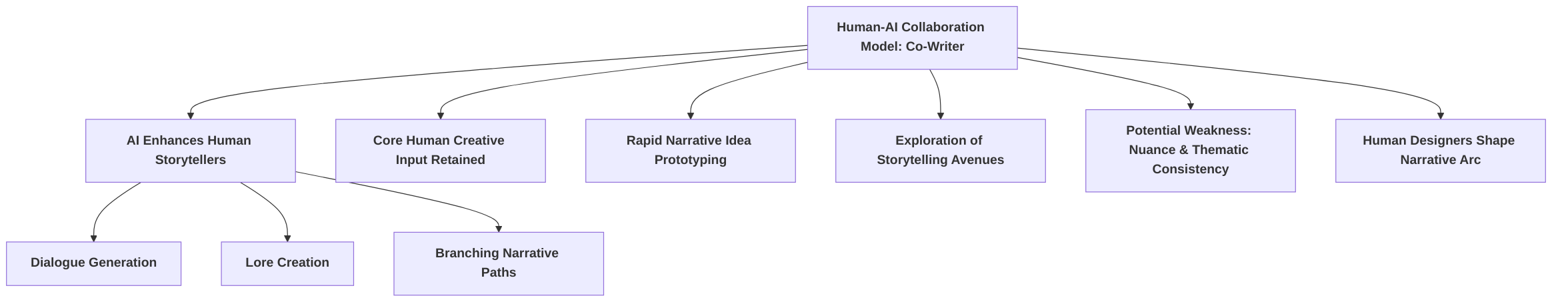

One prevalent model positions AI as a "creative co-pilot" or "level design assistant," primarily tasked with handling procedural or mundane elements of game development . In this model, AI excels at automating "grunt work," thereby liberating human designers to concentrate on the overarching creative vision and the meticulous refinement of the user experience . For narrative applications, AI can serve as a "co-writer" by generating micronarratives or expanding the dialogue for minor in-game characters, while human writers retain responsibility for crafting high-concept storylines and maintaining narrative coherence . This augmentation approach, where AI acts as a tool to "assist developers" in "prototyping, visualizing, and drafting," allows human creativity to be amplified rather than supplanted . The community's acceptance of AI integration is largely contingent upon its transparent application as a foundational element, not a definitive replacement for human input, thereby underscoring the critical need for sustained human oversight and agency .

A more advanced collaborative model envisions AI as a "creative companion" or co-creative element that actively supports various stages of the design pipeline . This perspective highlights AI's capacity to offer unexpected solutions and novel perspectives, thereby amplifying human creativity . The emergence of "hybrid roles" where designers function as both developers and AI asset curators exemplifies this collaborative dynamic, shifting human agency towards directing and refining AI-generated content rather than originating it from scratch . This redefines the traditional designer's role, emphasizing curation and iteration over pure creation. Designers express a strong desire for the deeper integration of generative AI capabilities directly within established game engines such as Unity or Unreal Engine, advocating for AI as an embedded feature rather than a standalone tool . This seamless integration would facilitate access to AI-driven functionalities, streamlining workflows and fostering a culture of experimentation and knowledge sharing .

Comparatively, the "creative co-pilot" model, exemplified by AI handling procedural generation or micronarratives , prioritizes efficiency and burden reduction. Its strength lies in automating repetitive tasks, allowing human designers to dedicate more time to core creative challenges. However, a potential weakness is the risk of designers becoming overly reliant on AI for basic content, potentially diminishing their foundational creative skills if not carefully managed. Designer agency remains relatively high in this model, as the human retains ultimate control over high-level creative decisions. In contrast, the "creative companion" or co-creative model, where AI offers unexpected solutions and prompts new perspectives , emphasizes ideation and innovation. This model's strength is its ability to push creative boundaries and introduce novel elements that human designers might not conceive independently. Its weakness, however, lies in the potential for blurred authorship and the challenge of maintaining a cohesive artistic vision if AI contributions are not meticulously curated. The impact on designer agency in this model is a shift from direct creation to curation and refinement, potentially altering the sense of traditional authorship .

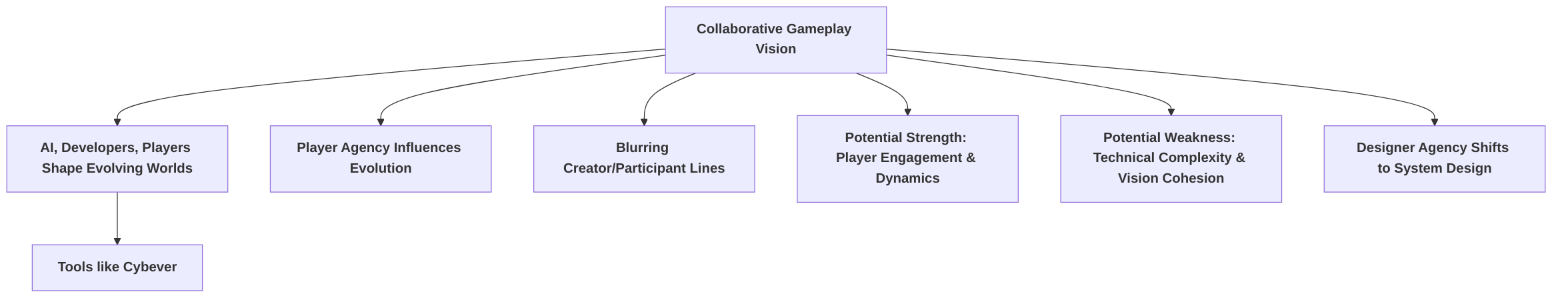

A more collaborative effort between AI, developers, and players is also envisioned, where AI tools empower both creators and consumers to dynamically shape game worlds and experiences . Tools facilitating rapid prototyping and real-time modification, such as Cybever, blur the traditional lines between creator and participant by allowing player input to directly influence design, suggesting a future where games evolve adaptively based on player agency .

To mitigate challenges to designer agency, specific collaborative models and workflows should be adopted. Best practices discussed in Chapter 6.2 (as referenced in the sub-section description) would likely emphasize hybrid models that judiciously blend AI capabilities with human oversight, thereby achieving a balance between AI-driven efficiency and human creative input . This involves clearly defining the scope of AI's involvement, ensuring that AI-generated content serves as a starting point for human refinement rather than a final product . Workflows should integrate AI tools directly into existing game development pipelines, facilitating seamless interaction and reducing cognitive load for designers. This includes establishing clear protocols for version control, content management, and attribution for AI-assisted contributions to address concerns regarding authorship and shared conventions . Furthermore, fostering a collaborative environment that promotes experimentation and knowledge sharing among designers regarding AI tool usage is crucial. This proactive approach helps designers develop the necessary skills to effectively direct and curate AI outputs, ensuring that the human remains the primary driver of creative intent.

The implications of these collaborative models on the sense of authorship and creative satisfaction for human designers are multifaceted. When AI functions as a "creative co-pilot" for "procedural grunt work," designers can experience enhanced satisfaction due to reduced tedious tasks, allowing them to focus on high-impact creative decisions . This augmentation can lead to a greater sense of efficacy and productivity. However, as AI transitions towards a "co-creative" role, offering novel solutions, the sense of individual authorship may become more distributed. The emergence of "hybrid roles" where designers curate AI-generated content suggests a shift in the definition of authorship, from sole creator to a director or editor of AI outputs . While this might initially challenge traditional notions of creative ownership, it also opens avenues for new forms of creative expression and collaboration. Maintaining transparency in AI's role and ensuring human oversight are paramount to preserving designers' sense of agency and creative control, ultimately contributing to sustained creative satisfaction . Designers' positive reception and willingness to explore AI tools suggest a promising future, provided clear guidance on integration and collaboration protocols are established . The ultimate goal is to leverage AI to augment, rather than diminish, the human element in narrative game design, ensuring that engaging games continue to marry AI-driven efficiencies with the unique artistic vision of human developers .

5. Ethical Dilemmas of Generative AI

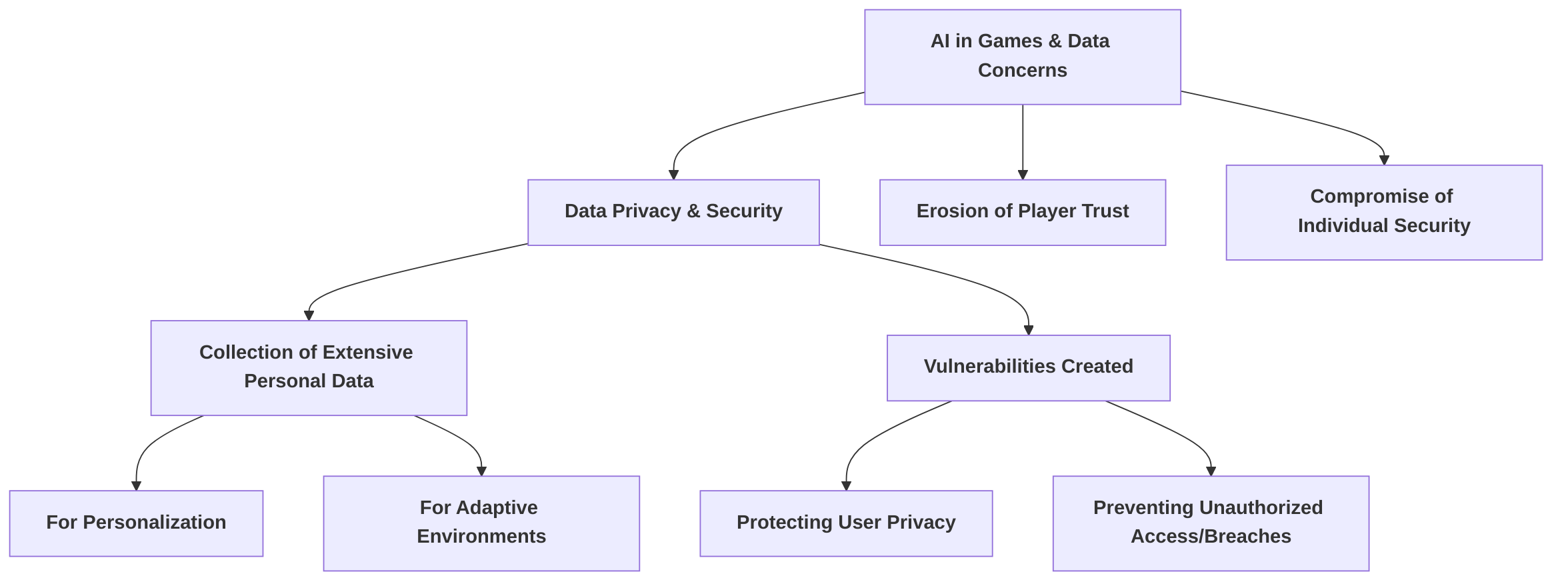

The integration of generative artificial intelligence (AI) into narrative game design presents a complex array of ethical dilemmas, necessitating a systematic categorization and comprehensive analysis of their nature, impact, and potential mitigation strategies. These challenges extend across intellectual property rights, accountability for biased content, player data privacy, and broader societal influences, affecting game developers, players, and the industry at large . This section aims to provide a general overview of these multifaceted concerns, establishing a theoretical framework for subsequent detailed discussions.

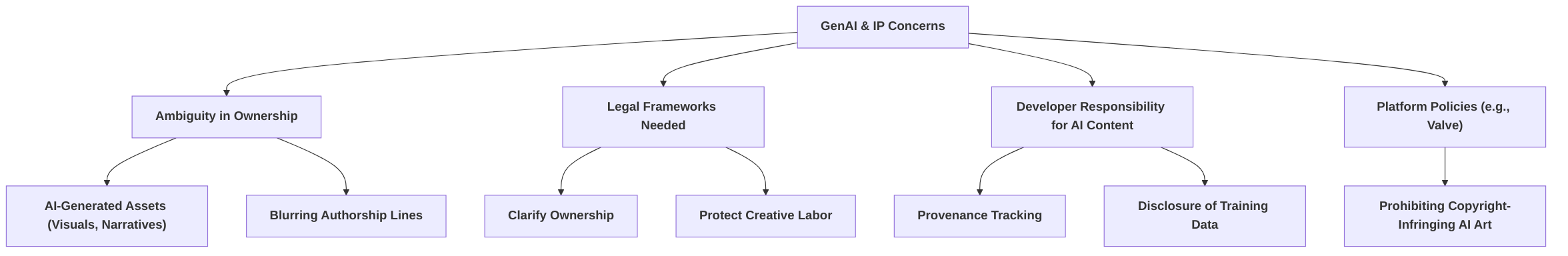

The first critical area revolves around intellectual property (IP) and authorship. The advent of AI-generated content raises fundamental questions about copyright ownership, particularly when AI models are trained on vast datasets that may include copyrighted material without explicit consent . This legal ambiguity poses significant risks for developers, who face the burden of proving originality and non-infringement, potentially leading to player backlash and platform prohibitions . The evolving legal landscape struggles to define "authorship" in an AI-driven creative process, challenging traditional IP laws predicated on human creativity and intent.

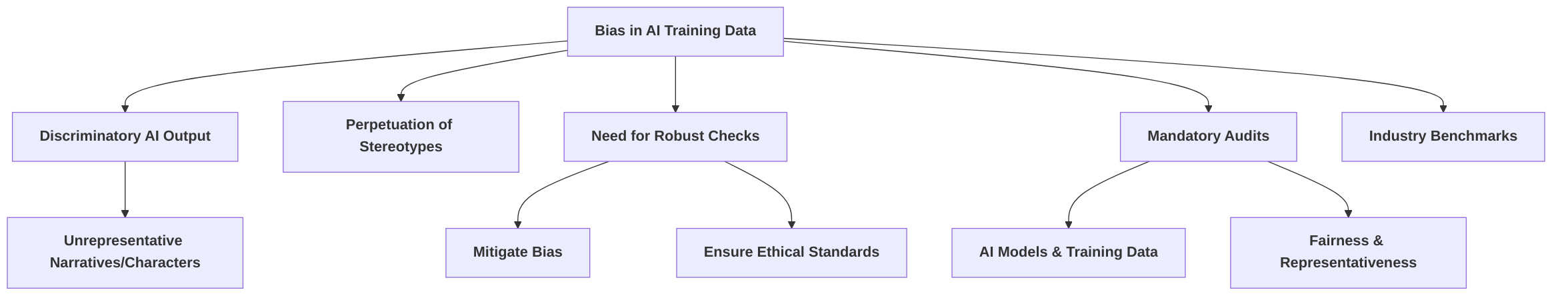

Secondly, concerns regarding bias and inclusivity in AI-generated content are paramount. AI systems, by reflecting their training data, risk perpetuating harmful stereotypes and discriminatory narratives, thereby impacting player experience and contributing to social controversies . This necessitates robust methods for data curation, bias detection, and mitigation to ensure AI-generated narratives align with ethical standards of fairness and diversity . An interdisciplinary approach, drawing from sociological concepts of representation and critical race theory, is crucial for understanding and addressing these complex biases.

The player experience and psychological impact of generative AI also constitute a significant ethical domain. While AI can enhance personalization and immersion by adapting narratives to player behaviors and preferences , it simultaneously introduces risks of emotional manipulation and the "uncanny valley" effect . The authenticity of AI-generated content and its impact on player agency are critical considerations, as player perception of AI involvement can significantly influence trust and game reception . Psychological theories such as Self-Determination Theory, Cognitive Load Theory, Theory of Presence, and Attribution Theory offer valuable frameworks for analyzing these complex dynamics.

Furthermore, the integration of generative AI sparks considerable debate about job displacement and the future of human creativity within the gaming industry. Concerns exist that AI could render traditional roles redundant, particularly in repetitive asset creation or early-stage iteration . Conversely, some argue for job transformation, where human roles evolve towards higher-level functions like curation and strategic integration of AI-generated content . The core ethical question revolves around maintaining the intrinsic value of human artistic contributions in an increasingly AI-assisted landscape .

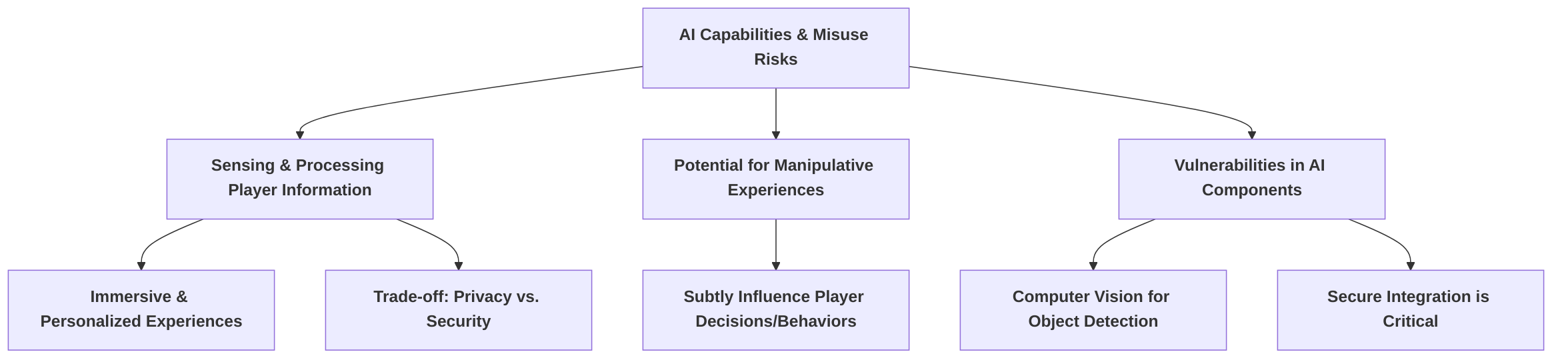

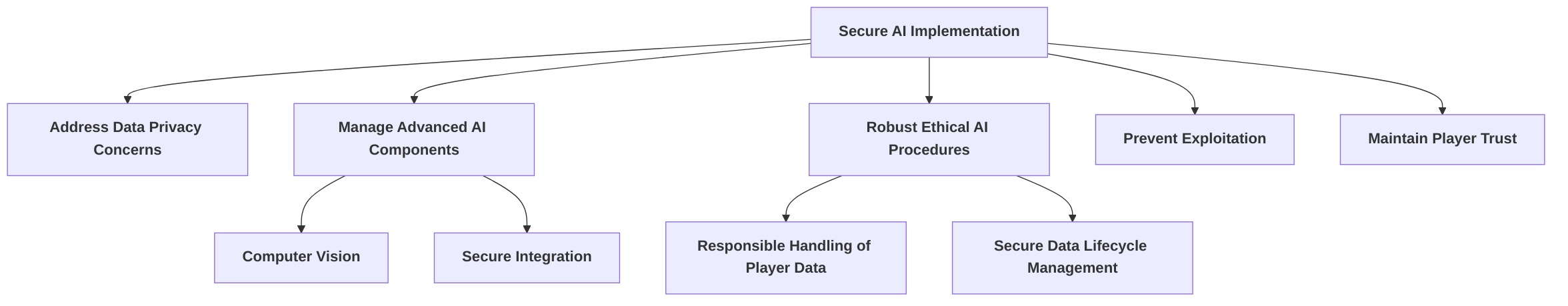

Finally, the security and potential misuse of AI in gaming present distinct challenges. The extensive collection of player data for personalization purposes necessitates stringent security measures to prevent breaches and misuse, thereby preserving user privacy and trust . Beyond data privacy, the adaptive and "sensing" capabilities of AI could, if mishandled, lead to manipulative experiences or open avenues for exploits within game systems . This underscores the need for robust ethical AI procedures and comprehensive frameworks to guide the design, deployment, and monitoring of AI systems, ensuring they enhance the gaming experience without compromising security or player well-being.

5.1 Intellectual Property and Authorship

The proliferation of generative AI in narrative game design has introduced profound and multifaceted challenges concerning intellectual property (IP) and authorship, which existing legal frameworks are ill-equipped to address adequately. A significant concern revolves around the ownership of AI-generated content, prompting questions from designers about the commercial viability and legality of integrating assets created with generative tools into their projects . This ambiguity is particularly pronounced when AI models are trained on extensive datasets that may include copyrighted or unattributed material, raising considerable legal risks related to stylistic overlap or the unintentional replication of existing works .

The absence of clear documentation regarding training data sources or explicit licensing terms exacerbates this hesitation among developers, leading many to restrict AI-generated outputs to prototyping or internal ideation rather than direct integration into final commercial products . The concerns are not merely theoretical; game distribution platforms like Valve have already implemented policies that prohibit games with AI-generated assets found to infringe on existing copyrights, effectively placing the substantial burden of verifying the rights to all training data and outputs directly onto the developers . This policy creates a complex legal and moral quagmire, especially for independent developers who must navigate the intricate issues of ownership and the legality of AI-generated content without clear precedents .

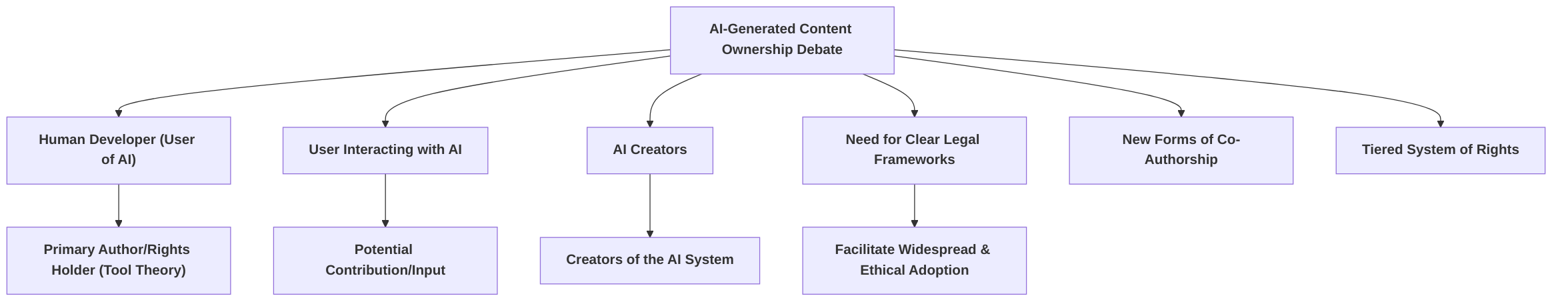

The central debate concerning AI-generated content ownership involves multiple stakeholders: the human developer who employs the AI, the user interacting with the AI, or the creators of the AI itself . This necessitates the establishment of robust and clear legal frameworks and ownership rights to facilitate the widespread and ethically sound adoption of generative AI within the gaming industry . The legal landscape struggles to define "originality" and "authorship" when a significant portion of the creative process is automated. Traditional IP laws are predicated on human creativity and intent, posing a fundamental challenge when confronted with AI outputs that lack human consciousness or direct creative intent. This philosophical conundrum requires a re-evaluation of current legal doctrines to accommodate the unique nature of AI-generated works.

Philosophical and legal viewpoints on originality in AI-generated content diverge significantly. One perspective posits that the human developer, by exercising creative control over the AI's prompts, parameters, and ultimate selection of generated content, remains the primary author and therefore the rights holder. This perspective aligns with the "tool theory," where the AI is merely an advanced instrument, akin to a brush or a camera, used by a human creator. Another viewpoint suggests that if the AI autonomously generates novel content without specific human input beyond the initial programming, the AI itself, or its developers, should be considered the "author." This challenges the traditional notion of authorship entirely, as legal personhood is typically a prerequisite for holding intellectual property rights. A third, more nuanced perspective, considers a co-authorship model, where both the human and the AI system contribute to the final product, leading to a shared ownership or a tiered system of rights. This approach acknowledges the distinct contributions of both parties and might involve intricate agreements on revenue sharing and usage rights.

The lack of established legal frameworks for AI authorship directly impedes human designers' ability to maintain artistic control, as discussed in Chapter 4.1. When the legal status of AI-generated assets is ambiguous, designers face uncertainty regarding their ability to freely use, modify, or license these creations without fear of future legal challenges. This uncertainty can stifle creativity and innovation, as designers may opt for traditional, legally clear methods rather than leveraging the full potential of generative AI. The burden of proving originality and non-infringement for AI-generated content often falls on the human developer, who may not have full transparency into the AI's training data or algorithmic processes. This creates a disincentive for integrating AI tools deeply into the creative workflow, thereby constraining artistic expression rather than enhancing it. Moreover, the fear of unintentionally reproducing copyrighted material or infringing on existing designs due to the AI's training data can lead to a more conservative design approach, limiting the experimental and transformative potential that AI offers.

In conclusion, the evolving landscape of generative AI in narrative game design necessitates a comprehensive re-evaluation of intellectual property and authorship laws. Key questions that emerge include: Who owns the copyright to a narrative generated by an AI? What constitutes "fair use" when AI models are trained on copyrighted material? How can attribution be equitably assigned to both human and AI contributions? And what legal recourse do human artists have when their style or specific works are used as training data without consent or compensation? Addressing these complex legal and ethical questions through clear legal frameworks, industry standards, and possibly new forms of licensing or attribution models is paramount for fostering an environment where generative AI can truly enhance, rather than constrain, creativity and innovation in game design.

5.2 Bias and Inclusivity in AI-Generated Content

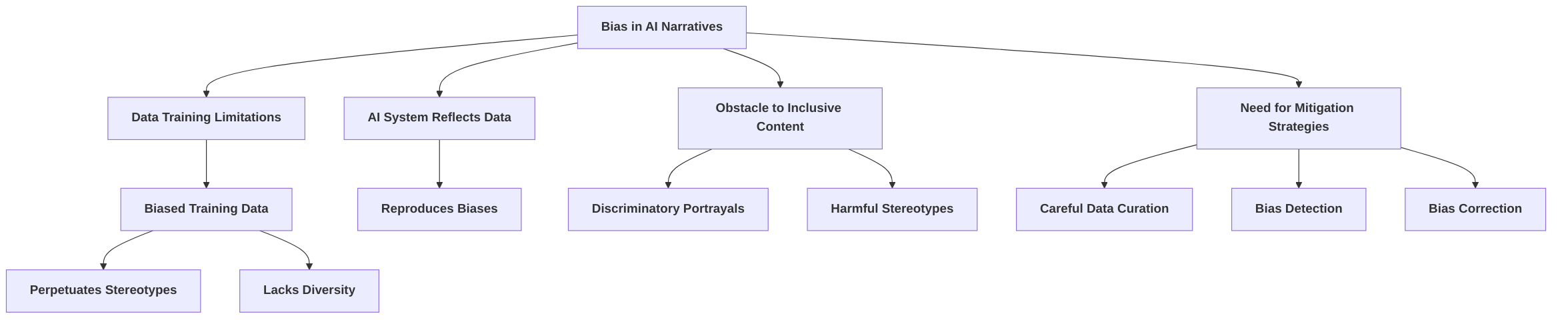

The proliferation of generative AI in narrative game design introduces significant ethical implications, particularly concerning bias and inclusivity. A primary concern is how biases inherent in training data can lead to problematic representations and perpetuate stereotypes within game narratives and character designs . AI systems, being reflections of their training data, risk generating content that is discriminatory or racially charged if that data contains historical biases or lacks diversity . This directly impacts player experience, potentially leading to unfair treatment of certain player types and contributing to broader social controversies . It is thus imperative for developers to be vigilant in ensuring AI-generated narratives avoid reinforcing harmful stereotypes and biases .

The core challenge lies in how biases are introduced into datasets and subsequently perpetuated in generated narratives. Generative AI systems are inherently limited by the quality and nature of their training data; if this data contains biased or stereotypical content, the AI is likely to reproduce these biases in its output, presenting a significant obstacle to creating inclusive and representative narratives or characters . For instance, a dataset reflecting historical underrepresentation of certain demographic groups might lead an AI to generate narratives where those groups are marginalized or portrayed stereotypically. This perpetuation is not merely a technical glitch but a reflection of systemic biases embedded in the real-world data used for training.

Mitigating these biases to promote more inclusive game experiences requires a multi-faceted approach. Developers must implement robust checks to ensure AI output aligns with ethical standards . This involves not only careful curation of training data but also the development of methods for identifying and correcting biases post-generation. While some papers broadly emphasize the need for caution and the importance of diversity, fairness, and inclusivity in AI-generated content , specific methodologies for bias detection and mitigation are largely underexplored in the current literature. The general consensus points to the necessity for AI algorithms to adhere to best practices to prevent the perpetuation of social disparities and ensure fair gameplay for all, avoiding harmful stereotypes .

A significant ethical dilemma arises when attributing accountability for biased or harmful content when an algorithm is the "creator." Unlike human artists, an AI does not possess intent, which complicates traditional notions of responsibility. This necessitates a re-evaluation of ethical frameworks to encompass algorithmic outputs. Strategies and frameworks for mitigating bias in AI models must ensure that ethical considerations are integrated throughout the entire narrative generation process, from data collection and model training to content deployment and post-release monitoring. This implies a shift from reactive problem-solving to proactive ethical design.

To effectively address bias in AI-generated narratives, novel interdisciplinary approaches are crucial. Drawing methods from sociology, critical race theory, or computational social science can offer robust frameworks for understanding and mitigating these biases. Sociological concepts of representation and power dynamics, for example, can inform the understanding of how AI-generated content might reflect or reinforce existing societal inequalities. By analyzing historical and contemporary power structures, these disciplines can provide insights into subtle forms of bias that might not be immediately apparent through purely technical analysis. For instance, critical race theory offers tools to deconstruct how race and power intersect in narratives, helping to identify and challenge embedded biases related to racial stereotypes or discriminatory portrayals.

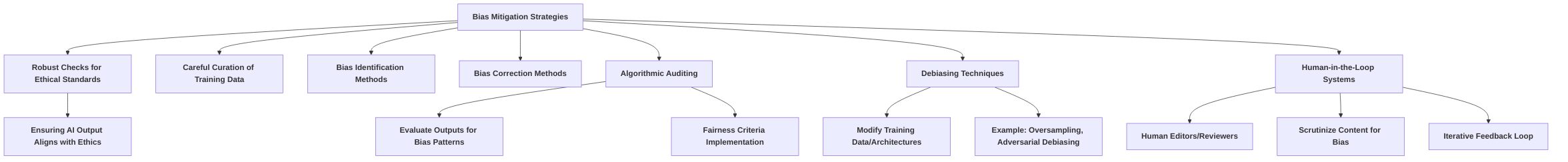

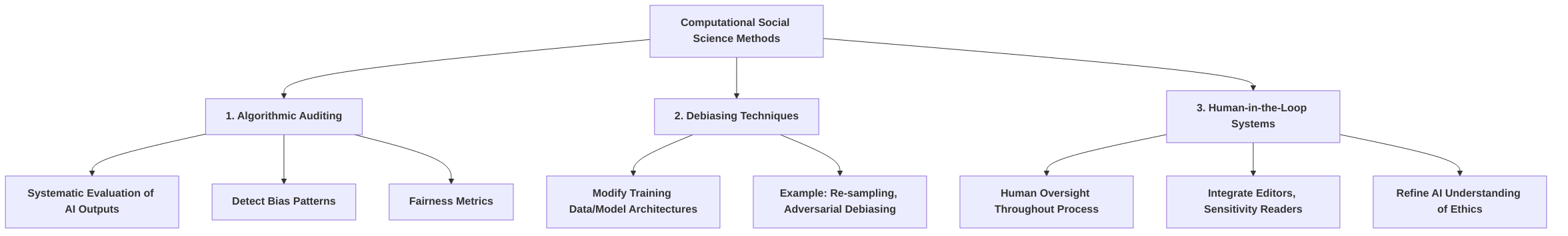

From a computational social science perspective, methods for bias detection and mitigation can involve:

- Algorithmic Auditing: Systematically evaluating AI outputs for patterns of bias, using metrics derived from fairness criteria in computational ethics. This could involve comparing generated narratives against diverse demographic profiles to ensure equitable representation.

- Debiasing Techniques: Applying technical methods to modify training data or model architectures to reduce bias. This might include re-sampling, re-weighting, or adversarial debiasing techniques. For example, if a dataset is found to underrepresent certain character traits for a specific gender, techniques like oversampling or data augmentation could be employed to balance the representation.

- Human-in-the-Loop Systems: Integrating human oversight throughout the AI-generated narrative pipeline. This involves human editors, sensitivity readers, or diverse review panels actively scrutinizing generated content for biases before its final integration into a game. This iterative feedback loop helps refine the AI's understanding of ethical and inclusive content.

The strengths of these interdisciplinary approaches lie in their comprehensive nature. Sociological and critical race theory perspectives provide the theoretical grounding for identifying and understanding complex, often subtle, forms of bias that may elude purely quantitative methods. Computational social science offers the methodological tools to implement these insights into practical detection and mitigation strategies.

However, these approaches are not without weaknesses in the context of narrative game design. Integrating sociological concepts requires domain expertise that many game developers or AI engineers may lack, necessitating cross-functional collaboration. The application of debiasing techniques can be technically complex and may sometimes inadvertently reduce the creative freedom or narrative coherence of AI-generated content if not carefully balanced. Furthermore, the very definition of "fairness" or "inclusivity" can be subjective and culturally dependent, posing challenges for universal ethical guidelines. What constitutes a harmful stereotype in one cultural context might be perceived differently in another.

In conclusion, while the potential for generative AI to introduce and perpetuate biases in game narratives is a recognized concern, the current literature often highlights the risk without detailing specific technical or procedural reasons for bias perpetuation or proposing robust, interdisciplinary mitigation strategies. Future research needs to deeply explore the technical mechanisms by which biases infiltrate datasets and narratives, alongside the development and validation of sophisticated bias detection and mitigation methods drawn from ethical AI research, computational social science, sociology, and critical race theory. The ultimate goal is to guide developers towards creating "safer and better experiences" by ensuring AI systems operate fairly and without bias, fostering truly inclusive and representative game worlds .

5.3 Player Experience and Psychological Impact

The integration of Generative AI (GenAI) into narrative game design presents a complex interplay of player experience and psychological impact, necessitating a nuanced ethical examination. GenAI's capacity to analyze player behaviors, emotions, and fatigue enables the tailoring of narratives and gameplay experiences, fostering a heightened sense of personalization and engagement . This personalization extends to dynamically adjusting difficulty levels, content, and gameplay mechanics to match individual player skills and preferences, thereby enhancing immersion and overall satisfaction . The development of realistic and emotionally resonant AI-driven characters, each with unique behavior patterns, further deepens gameplay and contributes to more believable interactions within the game world .

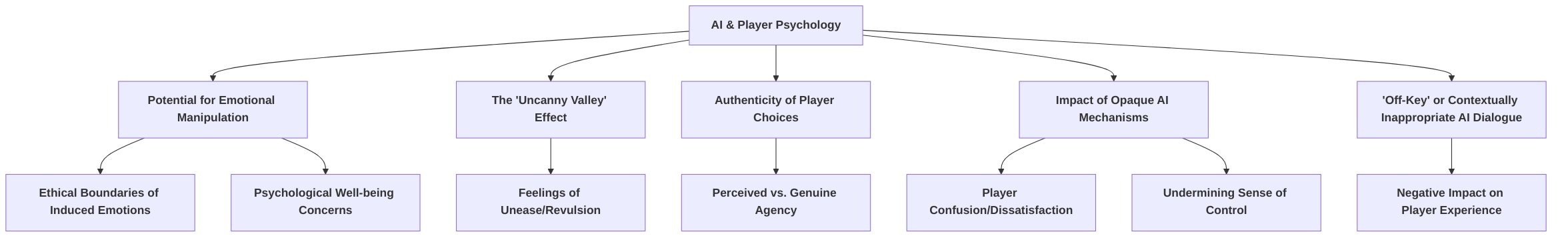

Despite these advancements, ethical considerations surrounding the psychological impact are paramount. One critical area involves the potential for emotional manipulation, particularly given AI's ability to elicit specific emotional responses in players . This raises questions about the ethical boundaries of artificially induced emotions and the psychological well-being of players interacting with systems designed to exploit or amplify their emotional states. While current literature acknowledges the enhancement of immersion and engagement through dynamic worlds and NPCs , many analyses do not deeply delve into the specific psychological or emotional impacts such as the "uncanny valley" effect, where AI-generated entities appear nearly human but possess subtle imperfections that evoke feelings of unease or revulsion .

Player agency, a cornerstone of engaging game design, also evolves significantly with GenAI. While AI can tailor narratives to individual choices, making game worlds feel more responsive , the authenticity of player choices and their perceived impact can be compromised if the underlying AI mechanisms are opaque or if the "personalization" feels less like true agency and more like sophisticated algorithmic prediction. A lack of transparency in AI decision-making can lead to player confusion or dissatisfaction, potentially undermining the sense of control and authenticity within the game experience . Furthermore, the potential for AI-generated dialogue to be "off-key" or contextually inappropriate without careful oversight can negatively impact player experience and immersion . This aligns with broader community reactions to AI-generated content, where even the suspicion of AI involvement in game art can lead to significant player backlash and damage a game's reputation, as exemplified by the case of Project Zomboid . Players exhibit sensitivity to AI-generated art, perceiving it as potentially lacking the authenticity or human creative touch .

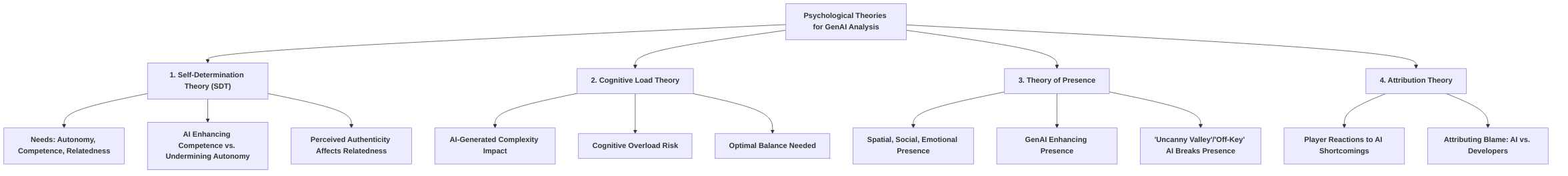

To better understand and mitigate these psychological impacts, it is imperative to move beyond merely discussing the effects and instead apply established psychological theories and frameworks. For instance, Self-Determination Theory (SDT), which posits that individuals are motivated by needs for autonomy, competence, and relatedness, provides a valuable lens. GenAI's adaptive systems could theoretically enhance competence by adjusting challenges to optimal levels, but it could simultaneously undermine autonomy if players perceive their choices as pre-determined or merely guided by algorithms rather than genuine self-expression. The perceived authenticity of AI-generated narratives and characters, as opposed to human-crafted content, directly impacts relatedness and engagement. If players feel that AI "merges" rather than truly creates , it can diminish the perceived value and authenticity of the interactive experience.