0. Digital Twin-Driven Predictive Maintenance in Smart Factories: Research Progress and Industrial Practices

1. Introduction

The current landscape of smart manufacturing is characterized by an escalating demand for operational efficiency, heightened productivity, and robust asset management. The paradigm of Industry 4.0, with its emphasis on interconnected systems and data-driven decision-making, necessitates a departure from traditional reactive or scheduled maintenance strategies. This evolution has spotlighted predictive maintenance (PM) as a critical need in modern industrial settings . Predictive maintenance is a proactive approach leveraging data analytics, machine learning, and continuous condition monitoring to identify potential equipment failures before they manifest, thereby mitigating unplanned downtime and extending asset lifespan .

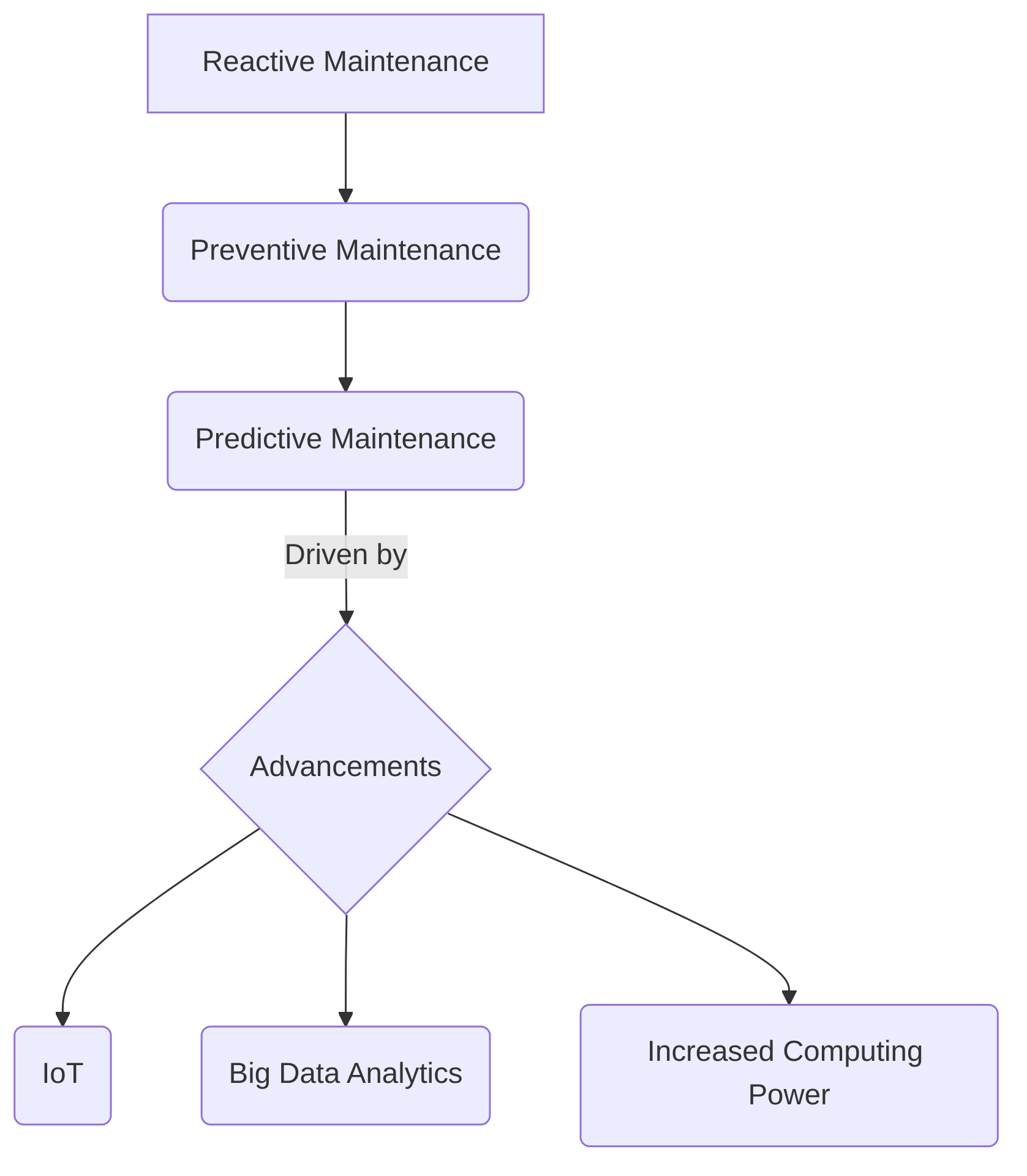

The progression of maintenance strategies, from reactive to preventive and now to predictive, has been significantly propelled by advancements in the Internet of Things (IoT), Big Data analytics, and increased computing power, facilitating real-time monitoring and analysis .

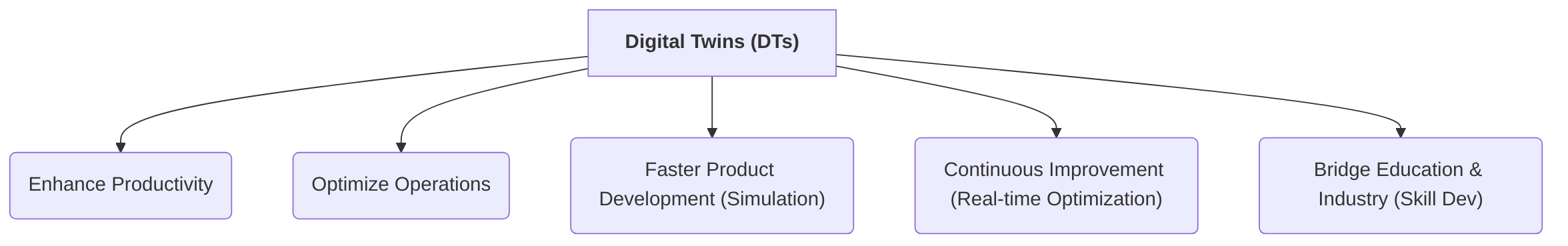

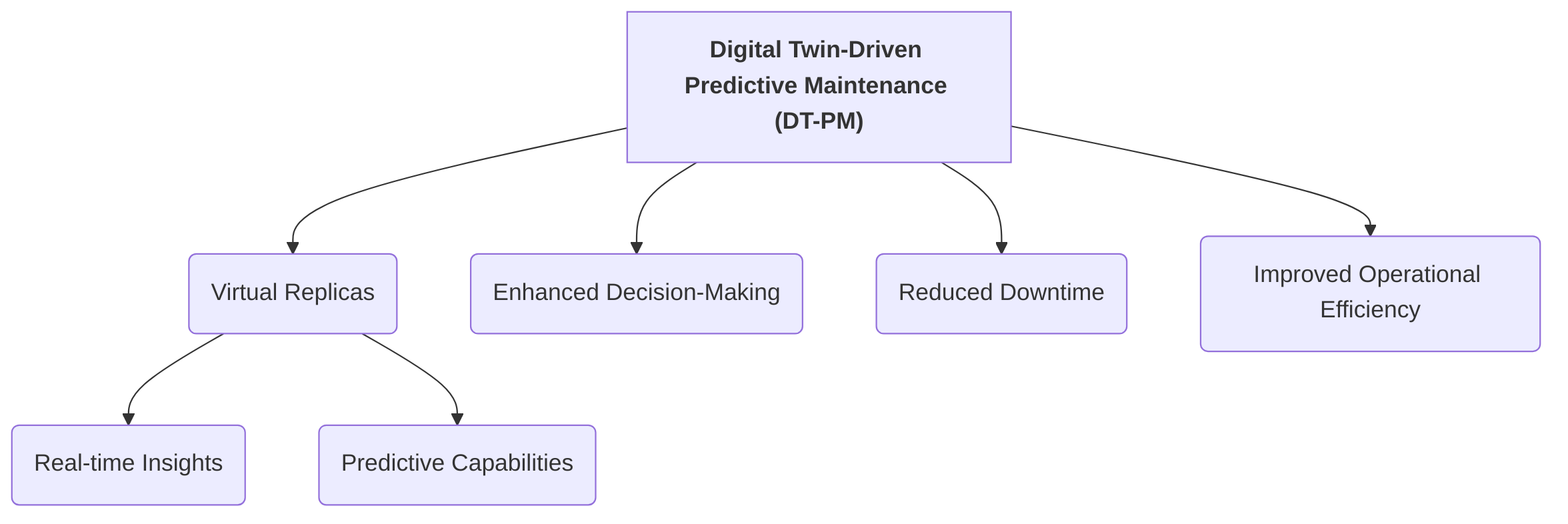

Within this transformative environment, Digital Twins (DTs) emerge as a foundational technology for achieving advanced predictive maintenance . A Digital Twin is conceptually defined as a virtual replica of a physical asset, process, or system that integrates real-time data and advanced analytics to provide profound operational insights . This dynamic digital counterpart faithfully reproduces reality, enabling close performance monitoring and the identification of deviations from expected behavior . The "Digital Twin on Smart Manufacturing" project further underscores this by emphasizing the integration of advanced DT technologies into manufacturing education and practice to prepare a new generation of technicians, fostering innovation and optimization . The significance of DTs in smart manufacturing lies in their capacity to enhance productivity, optimize operations, facilitate faster product development through simulation, and drive continuous improvement via real-time process optimization .

Different perspectives on the role of DTs in achieving smart factory goals highlight their multifaceted contributions. While some papers define DTs as dynamic digital replicas for real-time monitoring and forecasting of equipment health , others emphasize their role in bridging education and industry by equipping future technicians with smart manufacturing skills . Fundamentally, DTs are presented as a transformative technology in smart manufacturing, providing deep operational insights through the integration of real-time data and advanced analytics . The common motivation for adopting DT-driven predictive maintenance stems from its ability to address inherent challenges in traditional PM implementation, such as unifying disparate data streams, ensuring predictive accuracy, and managing multi-site operations by enhancing system visibility .

The necessity and initial framing of DT-PM in smart factories are collectively established by contributions from papers focusing on foundational cyber-physical integration. For instance, the extensive survey of Digital Twin technologies across industrial, smart city, and healthcare sectors highlights their rapid development driven by advancements in IoT, cloud computing, and AI, laying the groundwork for their overarching potential . Similarly, the emphasis on Cyber-Physical Systems (CPS) and DTs as crucial for cyber-physical integration, enabling manufacturing systems with greater efficiency, resilience, and intelligence through feedback loops, defines the problem space for advanced manufacturing contexts . These foundational principles of real-time interaction and data-driven insights are indispensable for DT-PM.

The identified benefits and motivations for adopting DT-driven predictive maintenance directly address the challenges outlined in existing literature. For example, the challenge of unifying data streams and ensuring predictive accuracy is mitigated by the DT's capacity to integrate real-time data from various sources, providing a cohesive and structured context for analysis . This integration enables predictive accuracy by leveraging machine learning and historical data to forecast equipment maintenance needs, preventing malfunctions and costly downtime . Furthermore, the DT's ability to provide constant performance monitoring helps identify deviations from expected behavior early , thereby addressing the challenge of preventing more significant and expensive damage . The motivation to shift from reactive or scheduled maintenance to a proactive, data-driven strategy directly combats the issue of unplanned downtime, leading to reduced breakdowns, improved maintenance planning, optimal efficiency, maximized uptime, extended equipment lifespan, and reduced operational costs . Additionally, the transformative potential of DTs to address limitations in AI-guided Predictive Maintenance, such as poor explainability and sample inefficiency, provides a roadmap for automated PMx at scale .

This survey aims to bridge theoretical advancements and industrial practices by presenting a critical nexus of DT-PM research and implementation . The subsequent sections will outline the key areas of focus:

- Research Progress: This segment will delve into the methodological advancements, architectural frameworks, and technological innovations in DT-driven predictive maintenance. It will encompass discussions on AI and machine learning integration, data management strategies, and modeling techniques.

- Industrial Practices: This part will explore real-world applications, case studies, and the practical implementation challenges and solutions of DT-PM in smart factory environments. It will also cover the economic benefits and operational improvements observed in industrial settings.

By clearly demarcating these areas, the survey ensures a comprehensive yet structured exploration of the field, avoiding overlaps and maintaining clarity in the analysis of DT-driven predictive maintenance in smart factories.

2. Fundamentals of Digital Twin and Predictive Maintenance

This section lays the groundwork for understanding Digital Twin (DT) driven predictive maintenance in smart factories by delineating the core concepts, architectural components, and enabling technologies of Digital Twins, alongside the principles, benefits, and traditional approaches of predictive maintenance. It begins by exploring the multifaceted definitions and conceptual evolutions of Digital Twins, highlighting their role as dynamic virtual replicas of physical assets . Subsequently, it delves into the typical architectural layers and the critical technological enablers, such as AI, IoT, Big Data, and cloud computing, that facilitate the comprehensive mirroring and analytical capabilities of DTs . A crucial aspect of this discussion involves differentiating Digital Twins from Cyber-Physical Systems (CPS), positioning DTs as an advanced implementation within the broader CPS paradigm specifically tailored for detailed virtual replication and advanced analysis in manufacturing .

The section then transitions to predictive maintenance (PdM), contrasting its proactive, data-driven nature with traditional reactive and preventive maintenance strategies . It elaborates on the substantial benefits of PdM, including reduced downtime, extended asset lifespan, and minimized operational costs, particularly in the context of smart factories . Traditional PdM techniques, often categorized under Prognostics and Health Management (PHM), are reviewed, acknowledging their foundational role while highlighting their limitations in capturing complex degradation patterns within dynamic smart factory environments . Finally, the section emphasizes the transition to advanced data-driven methods, especially those leveraging Artificial Intelligence and Machine Learning, to overcome these limitations. It also discusses the critical assumptions regarding data availability, quality, and stationarity that underpin the success of modern PdM, and how Digital Twins can mitigate these challenges by providing a unified, real-time data repository and supporting more comprehensive system understanding . By integrating these foundational concepts, this section establishes the theoretical framework necessary for understanding the subsequent discussion on DT-driven predictive maintenance architectures and applications in smart factories.

2.1 Digital Twin: Core Concepts, Architecture, and Key Enablers

Digital Twins (DTs) represent a foundational paradigm within smart manufacturing, enabling advanced functionalities such as predictive maintenance through sophisticated virtual representations of physical assets. Various definitions of Digital Twins underscore common themes of virtual replication and real-time interaction, yet they also exhibit subtle distinctions in their conceptualization and emphasis.

Fundamentally, a Digital Twin is widely conceptualized as a virtual replica or simulation of a physical asset, process, or system . This virtual entity dynamically mirrors the properties and working dynamics of its physical counterpart, enabling real-time monitoring, analytics, and forecasting of equipment health . Some definitions trace the origin of the concept to Grieves, encompassing physical objects, virtual objects, and the links between them, while NASA's 2012 definition highlights an integrated multi-physics, multi-scale, probabilistic simulation mirroring a physical system's life . A key distinguishing feature in some conceptualizations is the progression from a 'Digital Model' (3D geometry), to a 'Digital Shadow' (real-time data integration), and finally to a true 'Digital Twin' (two-way data flow enabling feedback to the physical world) . This staged evolution highlights increasing levels of fidelity and interactivity. Other perspectives define DTs as AI-based virtual replicas, emphasizing the convergence of AI, big data, IoT, and cloud computing . The overarching commonality is the dynamic, real-time connection between the physical and virtual realms, facilitating close performance monitoring and the identification of deviations .

While there are commonalities, differences in conceptualization primarily revolve around the emphasis on interaction depth and technological integration. For instance, the definition provided by explicitly details a three-stage creation process, emphasizing two-way data flow for continuous improvement, which is a more advanced conceptualization than general definitions focusing solely on real-time monitoring. In contrast, focuses on the functional aspect of DTs for predictive maintenance without detailing the underlying architectural sophistication. Similarly, defines DTs as virtual information constructs for "design-in-use" and "what-if simulation," implying a higher level of analytical capability within the virtual model.

The architectural components of a Digital Twin typically involve several layers to facilitate comprehensive mirroring and analysis. Although does not explicitly define DT architecture, it discusses Prognostics and Health Management (PHM) in smart manufacturing, inherently reliant on elements crucial for DTs, such as sensors for data acquisition, data processing, and analytical tools. Implicitly, a DT architecture involves sensors for collecting real-time data from physical assets, a robust data acquisition system, sophisticated modeling capabilities to create the virtual replica, simulation environments to test various scenarios, and visualization tools for human-computer interaction . For instance, mentions the use of Unity game engine for interactive environments and the Ivion portal for hosting scan data, illustrating the visualization and modeling aspects. The capability to structure and contextualize centralized data from various sources and tag assets with metadata, as highlighted by , further underscores the importance of data management within the architecture.

Critical enabling technologies underpin the creation and operation of Digital Twins in smart factories. Artificial Intelligence (AI) and data analytics are paramount, as they process real-time sensor data to generate complex data visualizations and extrapolate device lifecycles accurately . Other essential enablers include the Internet of Things (IoT) for pervasive data collection, Big Data technologies for handling voluminous data, and cloud computing for scalable processing and storage . While implicitly points to sensors for data feeding and AI for predictive analytics, other papers explicitly list AI, Big Data, IoT, and cloud computing as drivers . The integration of these technologies allows DTs to provide dynamic digital replicas enabling real-time monitoring and forecasting of equipment health .

Smart manufacturing principles are deeply intertwined with the development and application of Digital Twins. The pursuit of enhanced efficiency, flexibility, and autonomy in smart factories is directly facilitated by DTs. For example, the concept of DTs for industrial training and practical skill development, as suggested by , exemplifies their role in preparing the workforce for advanced manufacturing challenges. DTs enable the virtual replication of factory environments, fostering advanced analysis and optimization processes crucial for Industry 4.0 objectives .

A critical comparison of DT conceptualizations reveals a spectrum of understanding, particularly in relation to Cyber-Physical Systems (CPS). While many papers describe DTs without explicitly mentioning CPS , provides a nuanced discussion. It posits that DTs are a key means for achieving cyber-physical integration in smart manufacturing, sharing core characteristics with CPS such as intensive cyber-physical connection and real-time interaction. However, it critically differentiates DTs from CPS based on their origins, development, engineering practices, and cyber-physical mapping. This distinction is crucial for understanding DT-PM architectures: DTs, within this view, represent a more advanced or specific implementation within the broader CPS paradigm, particularly suited for detailed virtual replication and advanced analysis in manufacturing. This underpinning implies that DT-PM architectures leverage the fundamental principles of CPS—the seamless integration of computation, networking, and physical processes—but extend them to create dynamic, bidirectional linkages that enable highly accurate predictive capabilities. The continuous feedback loop inherent in a true Digital Twin (as described by ) goes beyond mere monitoring, allowing for virtual-to-physical actions and continuous improvement, which is a sophisticated evolution of the CPS concept.

The contribution of different architectural layers and key enablers to improved predictive accuracy or reduced downtime is not merely about their presence, but how they are leveraged. For instance, the implicit enablers in —sensors and AI—are fundamental. Sensors provide the raw, real-time data necessary for accurate condition monitoring. AI, particularly machine learning algorithms, transforms this raw data into actionable insights by identifying patterns, predicting failures, and optimizing maintenance schedules. This contrasts with papers that focus on 3D modeling and visualization, such as , which, while crucial for enhancing system visibility and contextualizing data, do not directly contribute to the predictive accuracy itself but rather the usability and interpretability of predictive outputs. The ability to tag assets with metadata and attach documents in a 3D model, as described in , facilitates human decision-making and operational efficiency, thereby indirectly contributing to reduced downtime by streamlining maintenance actions.

The explicit emphasis on real-time data processing using AI and ML algorithms, as highlighted by , directly impacts predictive accuracy. These algorithms analyze continuous data streams to extrapolate device lifecycles accurately, enabling precise forecasting of remaining useful life. The sophisticated 'Digital Shadow' and 'Digital Twin' stages described by , which involve imbuing models with real-time data and enabling two-way data flow, provide the necessary fidelity for highly reliable predictive maintenance. The higher the fidelity of the virtual model, encompassing comprehensive data streams and robust simulation capabilities, the more accurately it can mimic real-world degradation processes. This direct correlation means that conceptualizations emphasizing a rich data environment, advanced analytical tools (AI/ML), and bidirectional communication are most conducive to supporting robust predictive maintenance functionalities. The integration of big data and cloud computing further enhances scalability and computational power, enabling more complex models and faster analysis, which are critical for real-time predictive capabilities in large-scale smart factory environments.

2.2 Predictive Maintenance: Principles, Benefits, and Traditional Approaches

Predictive maintenance (PdM) represents a paradigm shift in asset management, moving from reactive or scheduled interventions to a proactive, data-driven approach aimed at anticipating equipment failures before they occur . Its fundamental principle is the continuous monitoring of equipment health to detect irregularities and foresee malfunctions, thereby enabling timely and targeted maintenance actions . This proactive nature contrasts sharply with corrective maintenance (CM), which addresses failures after they happen, and preventive maintenance (PvM), which relies on fixed schedules regardless of actual equipment condition .

The implementation of PdM in industrial settings yields substantial benefits. Primary advantages include significant reductions in unplanned downtime, extended asset lifespan, and minimized operational and maintenance costs . For instance, the economic impact of maintenance in the U.S. alone, with annual costs approximating USD 222 billion and recall costs exceeding USD 7 billion, underscores the compelling need for more efficient maintenance strategies like PdM over traditional approaches . Beyond cost savings, PdM contributes to improved asset utilization, more effective repair planning, prevention of safety issues, and enhanced overall productivity and operational efficiency . Digital twins (DTs) are instrumental in achieving these benefits by providing accurate virtual replicas for real-time performance monitoring and deviation identification, thereby facilitating predictive maintenance and minimizing downtime .

Traditionally, predictive maintenance has relied on various techniques, often categorized within the broader field of Prognostics and Health Management (PHM). PHM encompasses several stages: data acquisition and preprocessing, degradation detection, diagnostics, prognostics, and the development of maintenance policies . Traditional methods typically involve condition monitoring techniques such as vibration analysis, acoustic emission monitoring, thermography, and oil analysis. For example, vibration analysis, implicitly mentioned through sensor types, is a common technique for detecting anomalies in rotating machinery . These methods are primarily model-based or rely on expert knowledge and empirical rules. They assume that specific physical parameters (e.g., vibration amplitude, temperature) directly correlate with equipment health and degradation, and that deviations from established baselines indicate impending failure.

However, these traditional methods often prove insufficient in the dynamic and complex environment of smart factories. As noted, traditional maintenance methods, even with checklists and set processes, frequently fail to identify issues before a failure occurs . This limitation is due to several factors. Traditional techniques often operate on isolated data points or limited datasets, making it difficult to capture complex degradation patterns or interactions between multiple components. Their underlying assumptions—such as the stationarity of operational conditions or the existence of clear thresholds for failure—are frequently challenged in smart factories where operational parameters can fluctuate significantly, and equipment behavior is non-linear. The broad benefits claimed by some, like maximizing uptime and reducing operational costs through AI-driven analytics , starkly contrast with the specific challenges highlighted, such as data silos, legacy system integration, security concerns, data limitations, and workforce adoption issues . These challenges imply that traditional, less data-intensive methods may struggle to provide the comprehensive, real-time insights required for effective PdM in interconnected smart factory ecosystems.

The transition from traditional to advanced data-driven methods, particularly those leveraging Artificial Intelligence (AI) and Machine Learning (ML), is a critical development in smart factories. Modern PdM, often termed Smart Prognostics and Health Management (SPHM), increasingly relies on sophisticated data-driven approaches for anomaly detection and fault prediction . These methods, including ML and Deep Learning (DL) algorithms, utilize historical and real-time data to forecast equipment degradation and failure points accurately .

The applicability and effectiveness of data-driven versus model-based approaches vary. Model-based methods, relying on physics-of-failure or empirical models, are effective when degradation mechanisms are well-understood and can be accurately modeled. However, they struggle with system complexity, unknown failure modes, and variability in operating conditions. Data-driven approaches, conversely, learn complex patterns from operational data, making them highly adaptable to diverse and evolving manufacturing environments. They require substantial amounts of high-quality data to train robust models and face challenges such as scarcity of failure data, lack of explainability, and sample inefficiency .

The success of advanced PdM techniques in a smart factory environment, especially with DT integration, hinges critically on assumptions regarding data availability, quality, and stationarity. PdM requires specific Information Requirements (IRs) such as physical properties, reference values, contextual information, performance metrics, historical data, and fault data . While smart factories generate vast amounts of data, challenges like data silos and legacy system integration often impede data availability and quality . DTs can address these by offering a unified, real-time data repository, improving data accessibility and coherence. Moreover, the assumption of data stationarity, often crucial for traditional statistical process control methods, is frequently challenged by dynamic operational conditions in smart factories. DTs, by integrating real-time sensor data with historical performance, contextual information, and even physics-based models, can provide a more comprehensive and dynamic understanding of system behavior, thereby mitigating the limitations imposed by non-stationary data. This integration facilitates the realization of Functional Requirements (FRs) for advanced PdM, including theory awareness, context awareness, interpretability, robustness, adaptivity, scalability, transferability, and uncertainty awareness . Ultimately, while traditional PdM laid the groundwork, the advent of smart factories and DTs necessitates and enables a transition to more sophisticated, data-intensive approaches that can better navigate the complexities of modern industrial environments.

3. Digital Twin-Driven Predictive Maintenance Frameworks and Models

Digital Twin-driven Predictive Maintenance (DT-PM) represents a paradigm shift in industrial asset management, moving from reactive or preventive approaches to proactive, data-informed strategies. This section provides a comprehensive overview of the theoretical frameworks and practical models that underpin DT-PM in smart factories. It commences by detailing the integrated architectures and conceptual frameworks crucial for establishing robust DT-PM systems, highlighting the foundational elements and design considerations that dictate their effectiveness, scalability, and interoperability. This exploration will cover the evolution of DT concepts from digital models to fully integrated digital twins, emphasizing the critical role of cyber-physical integration and the Digital Thread in facilitating bidirectional data flow between physical assets and their virtual counterparts .

Following the architectural discussion, the section transitions to the critical aspects of data acquisition, fusion, and AI-guided analytics. This sub-section will elaborate on the various types of data sources—primarily IoT sensors and SCADA systems—and the challenges associated with collecting and processing large volumes of heterogeneous data in real-time . It will then discuss data fusion techniques essential for integrating disparate data streams into a coherent digital representation, enabling a comprehensive understanding of asset health .

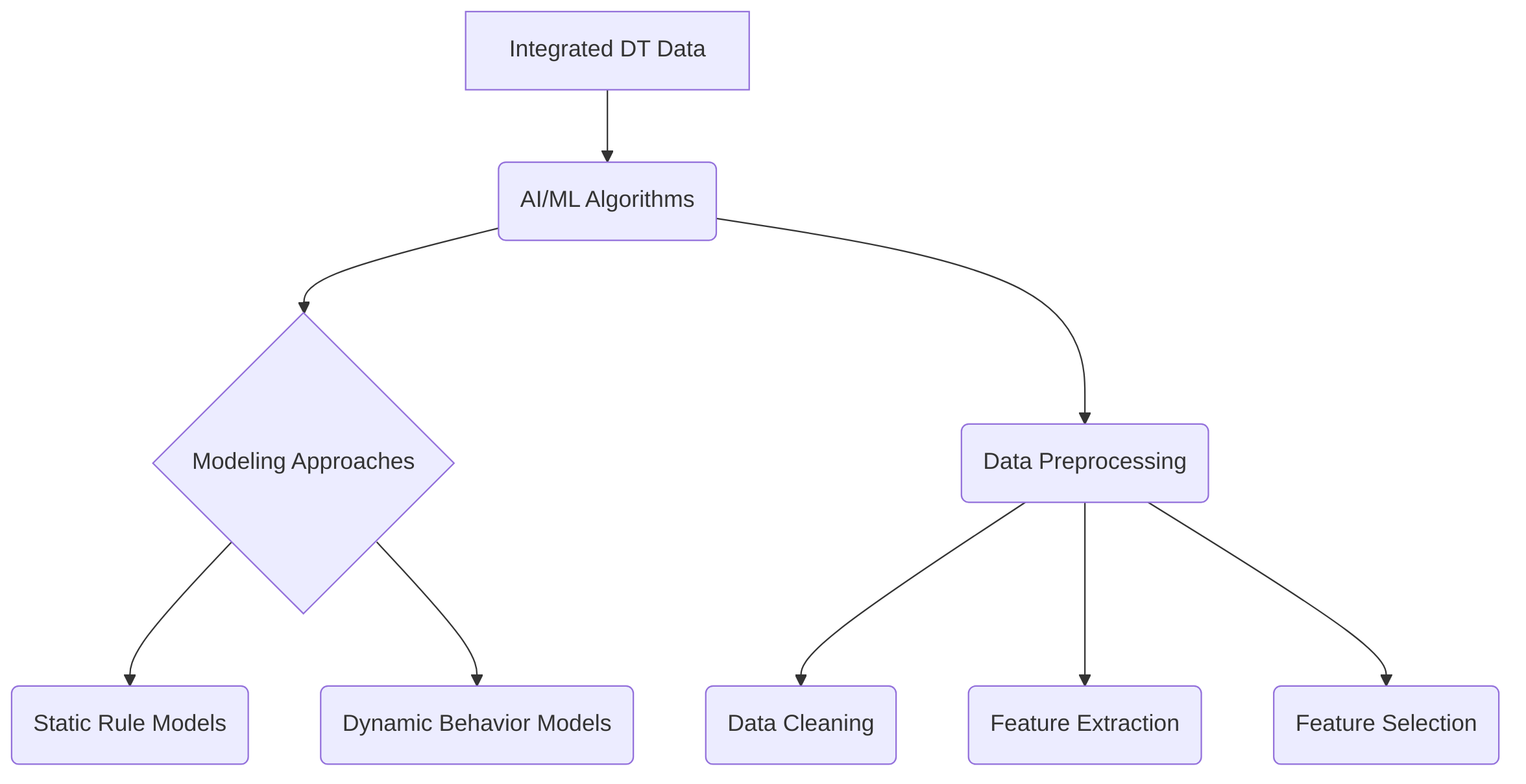

Crucially, the role of AI and machine learning (ML) algorithms in transforming this integrated data into actionable predictive insights will be detailed, covering various modeling approaches from static rule models to dynamic behavior models, and the necessary data preprocessing steps to ensure model robustness and accuracy .

A core challenge identified across both architectural design and data analytics is the need for interpretability in complex AI/ML models, particularly in critical industrial settings where "black box" models can hinder trust and adoption. Future research directions emphasize the development of explainable AI (XAI) and adaptive data cleaning techniques to enhance the trustworthiness and reliability of DT-PM systems in real-world scenarios. This section aims to provide a structured understanding of the current state of DT-PM frameworks and models, identifying key enabling technologies, persistent challenges, and promising avenues for future research and industrial implementation.

3.1 Integrated Architectures and Frameworks for DT-PM

The integration of Digital Twins (DTs) with predictive maintenance (PM) necessitates robust architectural frameworks that facilitate real-time data flow, advanced analytics, and proactive decision-making. While several conceptualizations exist, a comparative analysis reveals both common foundational elements and distinct design choices that influence their effectiveness, scalability, interoperability, and real-time capabilities .

A recurring pattern across various proposed architectures is the emphasis on cyber-physical integration, a fundamental concept shared by Cyber-Physical Systems (CPS) and Digital Twins . This integration, essential for advanced maintenance strategies, underpins the ability of DT-PM frameworks to bridge the gap between physical assets and their virtual counterparts. At a high level, frameworks often involve layers dedicated to physical assets, data acquisition, modeling, and application. For instance, one conceptual framework outlines a progression from a 'Digital Model' (3D geometry) to a 'Digital Shadow' (model with real-time data) and finally to a true 'Digital Twin' (bidirectional data flow), implying a layered structure where raw data is transformed into actionable insights within a virtual environment . This approach highlights a common functional flow: data capture, processing, and integration into an interactive virtual space, often utilizing technologies such as 3D laser scanning, data portals, and game engines for immersive environments .

A more structured approach to DT-PM architectures is presented through comprehensive frameworks. For example, a holistic Digital Twin Framework (DTF) for PMx tasks explicitly delineates essential components such as the Physical Twin (PT), Physical Twin Environment (PTE), Digital Twin (DT), Digital Twin Environment (DTE), Digital Environment (DE), Instrumentation, Realization, Digital Thread (DThread), Historical Repository (HR), Analysis Module, Accountability Module, and Query & Response (QR) . This detailed conceptualization offers a clear functional separation and interdependency between components. The Physical Twin represents the real-world asset, with the Instrumentation layer responsible for real-time data acquisition. This data feeds into the Digital Twin, which mirrors the physical asset's state and behavior. The Digital Twin Environment and Digital Environment provide the computational infrastructure for hosting and running the digital models. The Historical Repository is crucial for storing past operational data, facilitating trend analysis and model training, while the Analysis Module performs the core predictive maintenance functions. Specific examples cited for realization include using Ansys Mechanical for the DE and InfluxDB for the HR, demonstrating concrete technological choices .

Crucial to the effectiveness of any DT-PM architecture is the Digital Thread (DThread). It is distinguished from the Digital Twin itself, serving as an essential element that provides bidirectional information flow between the physical and digital realms . This continuous, connected data stream is vital for enabling PMx by ensuring that real-time sensor data from industrial IoT devices is consistently fed into the digital twin model, enabling immediate monitoring of asset health and early anomaly detection . The Digital Thread facilitates the entire feedback loop, from data collection to analysis and ultimately to actionable maintenance insights. Without a robust Digital Thread, the theoretical benefits of DT in maintenance, such as enhanced visibility and data-driven decision-making, cannot be fully realized .

Another conceptual framework, the three-phased interoperable framework for Smart Prognostics and Health Management (SPHM), aligns with DT-PM principles, albeit noting DT-driven modeling as a future research area . This framework, loosely based on CRISP-DM, comprises:

- Phase 1: Setup and Data Acquisition, focusing on identifying critical machinery, sensor deployment, and establishing secure data collection methodologies (e.g., cloud-based systems).

- Phase 2: Data Preparation and Analysis, which involves data cleaning, signal preprocessing, feature extraction (time, frequency, time-frequency domains), and feature evaluation/selection.

- Phase 3: SPHM Modeling and Evaluation, encompassing the selection of modeling methods (data-driven, hybrid, ML, DL), model evaluation using metrics like RMSE, MAE, and accuracy, and model deployment .

While this framework does not explicitly detail DT components, its structured approach to data handling and model deployment provides a strong foundation upon which DTs can be integrated. The emphasis on robust data acquisition and preparation in Phase 1 and 2 is critical for feeding accurate and timely information into any DT model.

Regarding scalability, interoperability, and real-time capabilities, specific design choices within these architectures significantly influence performance. The recommendation for modular systems with loosely coupled components, supporting open protocols like OPC UA, MQTT, or REST APIs, directly addresses interoperability and scalability, allowing for easier upgrades and smoother data exchange . Furthermore, leveraging open APIs for historical data pull from systems like ERP/CMMS and middleware for real-time data streaming from SCADA systems, combined with standardizing data formats (JSON, CSV, XML), are practical design choices that enhance both interoperability and real-time data flow . The capacity for real-time status visibility through integrated 3D digital twins, allowing visual navigation and consolidated data without switching systems, exemplifies how architectural design can improve user interaction and operational efficiency .

Critically assessing the maturity and readiness for industrial deployment, many proposed architectures are still largely conceptual or in early stages of implementation. Practical adoption barriers include ensuring seamless interoperability across diverse legacy systems, achieving the necessary real-time performance for critical industrial processes, and managing the sheer volume and velocity of data. A phased rollout, starting with read-only data flows to validate connections before expanding to bi-directional syncing, is a pragmatic approach to mitigate deployment risks and progressively build confidence in these complex systems . While components like "digital model," "digital shadow," and "digital thread" are recognized as key pillars of digital twins , the full deep integration models for production lines remain an area of ongoing research and industrial development. The current landscape suggests a strong theoretical foundation with ongoing efforts to bridge the gap to widespread, robust industrial deployment.

In summary, integrated DT-PM architectures share core layers for data acquisition, modeling, and application, emphasizing cyber-physical integration and the indispensable role of the Digital Thread for bidirectional data flow. Distinct architectural frameworks differentiate themselves through the specificity of their components, data flow mechanisms, and the extent of their real-world implementation. The progression from conceptual models to tangible systems requires careful consideration of design choices that optimize for scalability, interoperability, and real-time capabilities, thereby moving beyond theoretical discussions to overcome practical barriers to industrial adoption.

3.2 Data Acquisition, Fusion, and AI-Guided Analytics for DT-PM

Effective Digital Twin-driven Predictive Maintenance (DT-PM) necessitates robust data acquisition, sophisticated data fusion, and advanced AI-guided analytics to create a holistic digital representation of physical assets and accurately forecast their degradation. The foundation of any DT-PM system lies in the acquisition of diverse data types from the physical asset. Key technologies for this purpose include IoT sensors and, implicitly, SCADA systems, which collect vast volumes of real-time data on machinery and procedures . These sensors provide crucial operational parameters such as temperature, vibration, pressure, and speed, which are continuously fed into the digital twin model for real-time monitoring and early anomaly detection . Data acquisition for digital twins can involve tapping into existing sensor information or retrofitting analogue machines to create digital outputs . However, challenges persist, including the sheer volume, variety, and velocity of data, as well as issues like sensor placement, interference, and environmental factors, which often lead to noisy or incomplete data . Best practices to mitigate these issues include regular sensor calibration, employing redundant sensors, and utilizing data cleaning techniques with filters . Digital Twins can also provide spatial insights to optimize sensor placement and identify coverage blind spots .

Data fusion is a critical technique for integrating these diverse data streams to create a comprehensive digital representation. While many publications address data fusion or digital twins independently, fewer focus on their combined application . Data fusion techniques are generally classified into data-level, feature-level, and decision-level fusion, each offering distinct advantages and disadvantages in combining heterogeneous data types such as camera feeds, location data, and sensor readings . Despite its importance, specific data fusion techniques beyond the general integration of sensor data with digital twin visualizations are often not explicitly detailed in the literature .

The integrated data from the digital twin is then processed through AI and ML algorithms to predict faults and equipment degradation . These algorithms leverage both real-time and historical data to provide accurate predictions of equipment degradation and potential failures. While some sources broadly mention "advanced analytics" for pattern recognition , others delve into specific models and tools. The paper by Al-Ali et al. provides a detailed categorization of models used within DTs for PMx, distinguishing them into Information Models (e.g., Point Cloud Modeling, Building Information Modeling (BIM)), Static Rule Models (e.g., Fuzzy Logic Modeling), and Dynamic Behavior Models (e.g., Finite Element Method (FEM), Computational Fluid Dynamics (CFD), Bayesian Networks (BN), Gaussian Processes (GP)). Digital Environments (DEs) and tools such as OpenModelica, Simulink, Ansys, Unity3D, Flexsim, Revit, Simpack, and GeNIe Modeler are also identified as critical for enabling these diverse modeling capabilities . These models and tools are chosen based on their suitability for different PMx tasks, their representational accuracy, and their computational efficiency. For instance, while methods like Support Vector Machines (SVMs) or Random Forests might be efficient for fault classification, more complex deep learning models could be employed for Remaining Useful Life (RUL) prediction.

Key enabling technologies, including IoT sensors, Big Data analytics platforms, AI/ML algorithms, and Cloud/Edge computing, are foundational for facilitating DT-driven predictive maintenance . IoT sensors acquire the raw data, Big Data platforms handle the immense volume and velocity of this data, AI/ML algorithms process it to extract insights and make predictions, and Cloud/Edge computing infrastructures provide the necessary computational power and storage, enabling real-time processing and decision-making at the edge or centralized cloud . AI and Big Data analytics specifically enhance the performance of cloud computing and IoT, which are fundamental to DTs, by improving fault diagnosis and performance prediction .

A significant challenge for AI/ML algorithms in smart factories is their robustness to noisy or incomplete data . Data cleaning and preprocessing steps are crucial, including handling missing values (imputation or deletion), noise reduction (e.g., wavelet transforms, median filters), outlier detection and removal, and data scaling/normalization (e.g., min-max, mean, unit scaling, standardization) . Signal preprocessing techniques like denoising, amplification, and filtering are also vital. Furthermore, feature extraction methods (time-domain like mean, RMS, variance; frequency-domain based on DFT/FFT) and feature selection methods (filter, wrapper, embedded) are employed to prepare data for predictive models .

Another crucial aspect for the trustworthiness and adoption of DT-PM models is their interpretability. Many advanced AI/ML models, particularly deep learning networks, operate as "black boxes," making it difficult for human operators to understand the reasoning behind a prediction. This lack of transparency can hinder trust and adoption in critical industrial environments. Therefore, future research must focus on developing explainable AI (XAI) for DT-PM models to provide clear insights into failure predictions, fostering greater confidence and facilitating informed decision-making.

Future research directions should focus on developing adaptive data cleaning and imputation techniques integrated directly within the DT framework to autonomously address data quality issues . Furthermore, the development of robust explainable AI (XAI) techniques for DT-PM models is paramount to build trust and facilitate understanding of failure predictions, especially given the complexity of the underlying AI/ML algorithms and the critical nature of predictive maintenance tasks. Evaluating the performance of these AI/ML algorithms under diverse and dynamic operational conditions, including those with varying levels of data noise and incompleteness, will be essential to ensure their real-world applicability and reliability.

4. Research Progress and Industrial Practices

This section provides a comprehensive overview of the current state of Digital Twin (DT)-driven predictive maintenance (PMx) in smart factories, dissecting both the advancements in academic research and the tangible progress in industrial adoption. It begins by examining the evolving landscape of academic contributions, highlighting key research trends such as the integration of advanced data-driven methodologies, particularly Machine Learning (ML) and Deep Learning (DL), for Prognostics and Health Management (PHM) and Remaining Useful Life (RUL) assessment . We explore the various analytical techniques and modeling approaches employed in academic settings, along with their associated strengths and limitations, including the critical challenge of data availability . Furthermore, this section addresses the persistent gap between theoretical DT requirements and their full integration in current research, emphasizing the need for more holistic DT implementations that encompass all Information Requirements (IRs) and Functional Requirements (FRs) for PMx .

Following the academic perspective, the section shifts its focus to industrial adoption, presenting an analysis of current practices and case studies. It discusses the conceptual benefits and projected outcomes of DT-PM across various industrial sectors, such as manufacturing, aerospace, and automotive, while critically evaluating the generalizability of these benefits and the underlying reasons for their anticipated impact . Emphasis is placed on identifying the key challenges encountered during the implementation of DT-PM in real-world industrial environments, including data quality, interoperability, and integration with existing infrastructure . This part also scrutinizes the reporting of industrial outcomes, highlighting the prevalent optimism and the scarcity of concrete, independently verified metrics of success .

By systematically reviewing both academic research and industrial applications, this section aims to bridge the understanding between theoretical advancements and practical implementations. It identifies the crucial research gaps and challenges that need to be addressed to accelerate the widespread and effective deployment of DT-driven PMx solutions in smart factories. Proposed future directions include the development of standardized datasets, robust simulation environments, and scalable DT frameworks to facilitate broader adoption, particularly among Small and Medium-sized Enterprises (SMEs) .

4.1 Academic Research Trends and Contributions

Academic research in Digital Twin (DT)-driven predictive maintenance (PMx) in smart factories is a rapidly evolving field, characterized by significant advancements in data-driven methodologies and an increasing emphasis on cyber-physical integration . While the overarching goal of projects like "Digital Twin on Smart Manufacturing" is to bridge the gap between education and industry by preparing technicians for future manufacturing demands and leveraging real-time capabilities , the academic landscape is primarily focused on sophisticated analytical techniques and system integration.

A prominent research trend in this domain is the application of Machine Learning (ML) and Deep Learning (DL) for data-driven Prognostics and Health Management (PHM), especially for Remaining Useful Life (RUL) assessment . Researchers are actively exploring various ML algorithms, including Support Vector Machines (SVM), Decision Trees, Random Forest, k-Nearest Neighbors (kNN), and regression models for RUL prediction, with comparative analyses of their performance being a key contribution . Furthermore, DL methods such as Recurrent Neural Networks (RNNs) and Deep Belief Networks (DBNs) are being utilized for RUL estimation and advanced feature extraction, demonstrating the push towards more complex and autonomous analytical capabilities . The construction of a Health Index (HI) using techniques like Principal Component Analysis (PCA) is also a notable area of investigation, contributing to comprehensive health assessment of industrial assets . These advancements underscore the field's shift towards more proactive and precise maintenance strategies.

Academic contributions extend to the integration of PHM within broader manufacturing paradigms such as mass customization, reconfigurable manufacturing systems (RMS), and service-oriented manufacturing (SOM), highlighting the systemic impact of DT-driven PMx beyond isolated machinery . This holistic approach emphasizes the importance of interoperable frameworks that can support dynamic manufacturing environments.

Regarding DT characteristics and their implications for PMx, academic research frequently leverages DTs for simulation and replication purposes . While modeling and simulation are recognized as crucial components for DTs, alongside data fusion and data transfer , a significant gap exists in achieving full integration of all Information Requirements (IRs) and Functional Requirements (FRs) for PMx. Many studies, despite analyzing various academic works on DT for PMx based on their field, main DT characteristics (e.g., Knowledge-embedded, Simulation, Replication, Real-time Monitoring), DT description, and DThread description, reveal that few studies achieve complete integration of these requirements . This indicates a recurring theme in research gaps, highlighting the need for more comprehensive DT implementations that seamlessly map DT characteristics to PMx requirements. The lack of explicit detail on specific DT characteristics and their precise mapping to requirements also underscores this challenge .

The methodologies predominantly employed in academic research include simulation and data-driven modeling . While simulation provides a controlled environment for testing novel algorithms and concepts, its strength lies in rapid prototyping and iterative design, yet it may lack the fidelity of real-world conditions. Data-driven modeling, leveraging big data analytics and AI, is essential for extracting insights from operational data . However, a significant limitation identified is the scarcity of publicly available datasets, which hinders robust benchmarking and comparative analysis of different models . This often necessitates reliance on specific datasets for model testing, potentially limiting the generalizability and practical applicability of research findings. The case study approach, exemplified by the application of an SPHM framework to milling machine data focusing on data preparation and feature engineering, demonstrates a common validation method in the absence of broad datasets .

The gap between theoretical requirements for comprehensive DT-PMx systems and current research capabilities highlights several areas needing more academic attention. There is a need to move beyond conceptual applications and vendor perspectives towards empirically validated and fully integrated DT architectures. Specifically, the challenges in realizing holistic DTs that encompass all IRs and FRs for PMx are significant.

Future research avenues should focus on developing standardized benchmark datasets and robust simulation environments for DT-PM research. This will enable more rigorous and comparable evaluations of new algorithms, fostering faster progress in the field. Furthermore, research into transfer learning methodologies is crucial for adapting DT-PM models across different machine types or operational contexts, addressing the practical challenge of data scarcity and heterogeneity in real-world industrial settings. This would significantly enhance the scalability and generalizability of DT-driven PMx solutions.

4.2 Industrial Adoption and Case Studies

The industrial adoption of Digital Twin-driven Predictive Maintenance (DT-PM) is a focal point for smart factories, with conceptual benefits widely discussed, though concrete, quantifiable real-world deployments remain less prevalent in current literature. While numerous studies articulate the potential advantages of DT-PM, a critical analysis reveals a landscape dominated by conceptual applications and anticipated outcomes, rather than documented industrial practices with robust, independently verified metrics .

In terms of industry sectors, the manufacturing domain is frequently cited as a primary beneficiary. Specifically, conceptual applications extend across product design, manufacturing, and Prognostics and Health Management (PHM) . Digital twins are envisioned for use in designing new products, redesigning existing ones, analyzing product flaws, and optimizing manufacturing schedules and control. Within PHM, conceptual applications include predictive maintenance for large-scale infrastructure like the FAST telescope, tracking spacecraft component lifetimes, and monitoring diverse industrial equipment, such as thermal power plants, automotive components, and battery systems, for fault detection and diagnosis . The aerospace and automotive industries, for instance, are conceptualized to leverage 'what-if simulation' and 'design-in-use' models, while manufacturing primarily focuses on 'design-in-use' scenarios . These examples, however, largely represent conceptualizations or research prototypes rather than fully scaled industrial deployments with detailed, quantifiable returns on investment .

The projected benefits of DT-PM are substantial, encompassing optimized equipment lifespan by identifying components prone to breakdown and suggesting upgrades, leading to improved equipment efficiency and longevity . Furthermore, digital twins are conceptualized to significantly lower maintenance costs by foreseeing issues, optimizing performance, and reducing equipment replacement and repair expenses. Projected savings in maintenance expenditures could be as high as 20% . Additionally, DT-PM is expected to reduce operational expenses through increased operational effectiveness and decreased unscheduled downtime, potentially lowering upfront costs for new equipment by extending their lifespan, and minimizing energy and resource usage via enhanced operational efficiency . General benefits include preventing disruptions, reducing breakdowns, and improving overall operational efficiency within factories . These conceptualized benefits align strongly with the fundamental principles of DT-PM, which aim to shift maintenance from reactive or preventive schedules to data-driven, condition-based interventions, maximizing asset utilization and minimizing costs.

However, the generalizability of these conceptualized benefits across diverse industrial sectors warrants careful consideration. While the core principles of reduced downtime and optimized performance are universally desirable, the specific mechanisms and magnitudes of benefit may vary significantly. For example, the complexity of assets, regulatory environments, data availability, and existing maintenance infrastructures differ considerably between sectors like aerospace and heavy manufacturing. The implementation challenges are also considerable, often centering on the integration of digital twins with existing facility management platforms, such as CMMS and BIM systems . Common challenges include building robust predictive maintenance processes, ensuring data quality and interoperability, and overcoming the initial investment hurdles. Despite these challenges, digital twins are anticipated to accelerate diagnostics, simplify updates, reduce search time for maintenance information, and improve overall maintenance efficiency by linking records directly to equipment . They can also facilitate safe procedure practice in virtual environments, thereby reducing on-site risk and training time, and aid in preserving "tribal knowledge" through embedded annotations and repair steps .

A critical evaluation of reported outcomes in these conceptualized industrial applications often reveals a degree of optimism, with metrics frequently presented without the rigor of standardized reporting or independent verification. For instance, the general discussions on anticipated outcomes, such as those presented by , broadly mention digital twins "gaining traction" and being used across the "entire manufacturing lifecycle," listing potential use cases like onboarding, safety training, and troubleshooting, but lacking specific data on realized benefits like reduced downtime or cost savings. Similarly, the automotive factory conveyor motor example in provides a general overview of benefits without real-world deployment details or quantifiable savings. The lack of concrete, verifiable metrics in many conceptual discussions makes it challenging to assess the true practical impact and differentiate between aspirational goals and achieved results. This potential over-optimism stems from the inherent difficulty in precisely quantifying the long-term, systemic benefits of a nascent technology like DT-PM in complex industrial environments. The underlying reasons for these projected benefits are rooted in the theoretical capabilities of DTs to provide comprehensive, real-time insights into asset health and behavior, enabling proactive interventions that are demonstrably superior to traditional maintenance paradigms .

One of the few instances approximating a case study of methodological application rather than broad industrial adoption is the implementation of a Smart Prognostics and Health Management (SPHM) framework applied to data from a milling machine operation . This study focuses on the experimental setup, data acquisition, preprocessing, feature extraction, and selection for predictive maintenance, demonstrating the process of preparing data in an industrial context. However, it does not detail specific benefits achieved in terms of cost savings or improved asset utilization, primarily serving as a methodological demonstration .

To advance industrial adoption, future research should prioritize the development of lightweight and scalable DT frameworks, particularly those suitable for Small and Medium-sized Enterprises (SMEs). Currently, custom-crafted DTs often impede the development of unified DT frameworks within PMx, suggesting a need for more standardized and adaptable solutions . Additionally, investigating the role of Digital Twin-as-a-Service (DTaaS) models is crucial for reducing the prohibitive upfront investment typically associated with DT implementations, thereby lowering barriers to entry for a broader range of industrial players.

5. Challenges and Future Directions

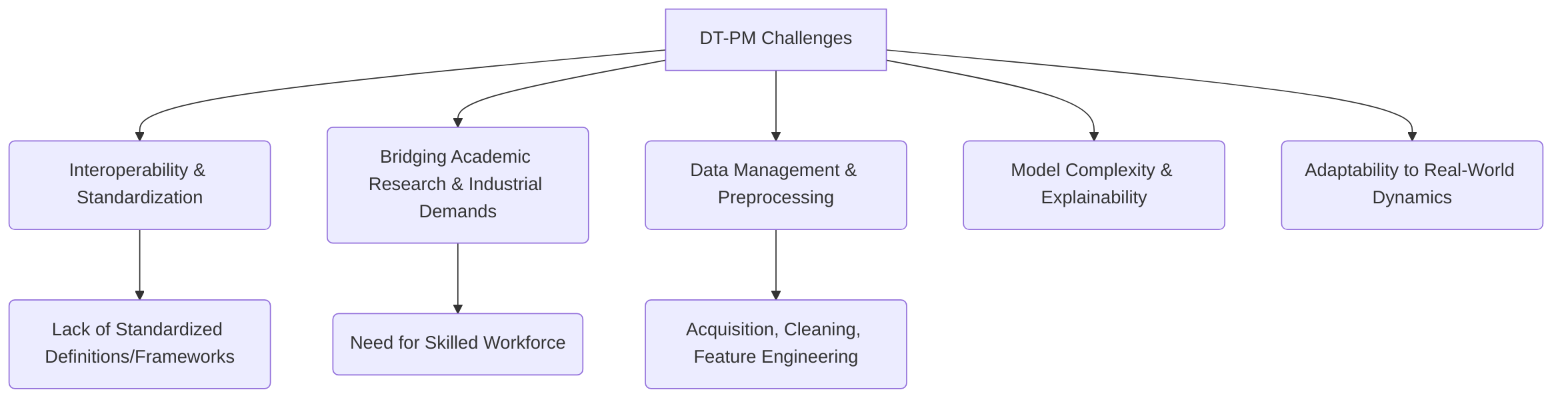

The pervasive integration of Digital Twin-driven Predictive Maintenance (DT-PM) into smart factories represents a paradigm shift from reactive to proactive maintenance, promising substantial operational advantages . However, the full realization of this potential is impeded by a complex interplay of technical, standardization, financial, and human-centric challenges. Overcoming these hurdles necessitates not only advancements in core DT technologies but also a concerted effort in developing robust frameworks, fostering interoperability, addressing ethical concerns, and cultivating a skilled workforce. This section synthesizes the current limitations, explores emerging technologies poised to mitigate these challenges, and outlines critical future research avenues, culminating in a discussion of the ethical and societal implications of widespread DT-PM adoption.

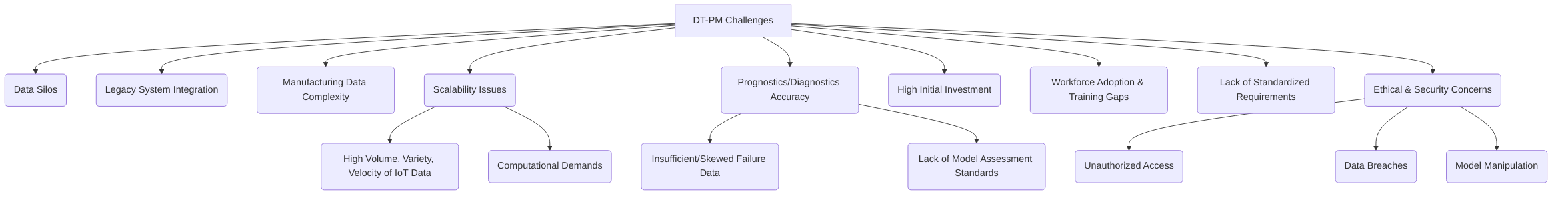

A primary set of challenges revolves around data management and computational demands. The prevalence of data silos and difficulties in integrating with legacy systems hinder the creation of comprehensive and consistent datasets vital for accurate predictive insights . This is compounded by the inherent complexity of manufacturing data, requiring extensive preprocessing and robust data pipelines . Scalability issues further arise from the high volume, variety, and velocity of IoT data, demanding significant computational resources and efficient edge deployments to reduce latency and bandwidth needs . Moreover, the accuracy of prognostics and diagnostics is often hampered by insufficient or skewed failure data, and a lack of standards for model assessment .

Beyond technical complexities, significant non-technical barriers impede DT-PM adoption. The high initial investment and uncertain ROI often lead to executive hesitancy . More critically, workforce adoption and training gaps pose a substantial challenge, as existing staff may lack the technical domain knowledge and data literacy required to effectively utilize DT-PM systems . This highlights the need for user-friendly interfaces and specialized educational programs .

A critical cross-cutting challenge is the lack of standardized requirements for PMx-related Digital Twin Frameworks . The inherent diversity of industrial applications makes a 'one-size-fits-all' standard impractical, hindering interoperability and broader adoption across heterogeneous smart factory systems .

Furthermore, ethical and security concerns are paramount. Digital Twin systems handle sensitive operational data, making them attractive targets for cyber threats such as unauthorized access, data breaches, and model manipulation . Balancing comprehensive data capacity with privacy needs and developing robust countermeasures against cyber-attacks on data integrity are crucial research areas .

The path forward involves leveraging emerging technologies and defining clear future research avenues. Blockchain technology, while not explicitly detailed in the provided digests for DT-PM, holds promise for enhancing data security and trustworthiness through decentralized, immutable ledgers, thereby ensuring data provenance and reliability critical for robust data pipelines . Augmented Reality (AR) and Virtual Reality (VR), as components of Extended Reality (XR), are poised to revolutionize maintenance support by providing immersive and intuitive human-DT interaction, improving training, visualization, and human-machine collaboration . Edge computing is crucial for enabling faster, localized decision-making, reducing latency, and optimizing bandwidth, particularly for critical assets requiring immediate insights . The synergistic combination of these technologies, such as integrating Explainable AI (XAI) with AR for visual explanations of predictive insights, offers profound advancements in human-machine collaboration and trust .

Future research directions should focus on developing unified, standardized DT frameworks for PMx, defining common data models and interoperability interfaces . The development of Explainable AI (XAI) for DT-PM models integrated with AR visualization is crucial for enhancing interpretability and user trust . Research into scalable and efficient hybrid edge-cloud DT deployments is vital for optimizing computational loads and reducing latency . Furthermore, the application of blockchain for blockchain-enhanced data pipeline robustness and reliability will ensure data integrity and auditability . The development of adaptive and transferable DT frameworks with bidirectional model libraries is essential for DTs to evolve with changing conditions and be readily deployed across similar assets . Finally, AI-driven DT configuration and management tools will lower the barrier to entry, while human-in-the-loop DT systems with AR/VR will promote intuitive operator interaction.

The widespread adoption of DT-PM also brings significant ethical and societal implications, particularly concerning data governance and workforce dynamics. Data privacy is a central concern, as comprehensive data collection for DTs necessitates robust protocols to prevent unauthorized access or disclosure of sensitive information . Cybersecurity is integral to upholding data privacy, as breaches can compromise data integrity and confidentiality . Mitigation strategies must balance the benefits of extensive data collection with ethical privacy imperatives, employing secure multi-party computation, homomorphic encryption, and federated learning paradigms . Societally, DT-PM will reshape workforce roles, creating new demands for data analytics, AI model management, and cybersecurity expertise, necessitating proactive educational and reskilling initiatives. Addressing these technical, financial, standardization, and ethical challenges collectively is paramount to realizing the full transformative potential of DT-PM in smart factories.

5.1 Current Limitations and Open Issues

Digital Twin-driven predictive maintenance (DT-PM) offers substantial advantages in smart factories, transforming maintenance practices from reactive to proactive . However, despite the proven benefits and transformative potential, the widespread adoption of DT-PM is hindered by a confluence of technical, standardization, and ethical/security challenges. While some literature emphasizes the capabilities of DTs, such as enabling bidirectional data flow for enhanced realism , a deeper analysis reveals significant hurdles.

A primary obstacle to widespread DT-PM implementation is the prevalence of data silos . Organizations often maintain scattered maintenance records, equipment manuals, inspection reports, and sensor data in disconnected systems. This fragmentation leads to prolonged diagnostic times, inconsistent data, suboptimal scheduling, and duplicated efforts, ultimately diminishing the effectiveness of predictive insights . Compounding this is the challenge of integrating with legacy systems, where outdated technologies lack real-time data exchange capabilities and standardized communication protocols. Such integration becomes both difficult and costly, limiting the seamless flow of information crucial for a comprehensive DT . The inherent complexity of manufacturing data necessitates extensive preparation and preprocessing to ensure quality and consistency for DT models .

Scalability issues represent another critical challenge, particularly concerning the high volume, variety, and velocity of IoT data generated in smart factories . Physical constraints often lead to noisy or incomplete data, hindering the derivation of accurate insights. This is further complicated by the computational demands for high data transfer and processing speeds . The research question RQ5.1, concerning the advantages and limitations of edge versus centralized deployment for PMx DTs, directly addresses this scalability challenge . Efficient edge deployments with limited computational resources (RQ5.2) are crucial for localized processing and reduced latency, which can be explored through methodologies like distributed computing and optimized data compression algorithms . Moreover, the lack of robust and reliable data pipelines (RQ6.1) for practical deployment remains a significant hurdle, necessitating advancements in fault-tolerant data ingestion and processing frameworks .

The high initial investment and uncertain return on investment (ROI) also contribute to executive hesitancy . This financial hurdle is exacerbated by the need for significant technological proficiency to create realistic and practical virtual models . A critical non-technical challenge is workforce adoption and training gaps . The lack of technical domain knowledge and data literacy among staff impedes their ability to interpret predictive insights, thereby slowing adoption and impacting the perceived value of DT-PM systems . This issue is further underscored by the need for user-friendly interfaces and the collection of expert knowledge . Educational curricula must align with the rapidly evolving industry needs, requiring specialized training programs and practical experience through Digital Twin labs . Data integration complexities (technical) directly exacerbate the need for skilled personnel (non-technical) as more sophisticated methods are required to manage disparate data sources.

From a standardization perspective, a critical limitation is the "lack of standardized requirements" for PMx-related Digital Twin Frameworks (DTFs) (RQ1.1) . This issue is difficult to resolve due to the inherent diversity of industrial applications (RQ1.2) , making a 'one-size-fits-all' standard impractical. The absence of unified standards hinders interoperability, data consistency, and the broader adoption of DTs across heterogeneous smart factory systems . Understanding how standardization facilitates automation (RQ1.3) is crucial . Future research should focus on developing interoperability standards and semantic models for DTs, potentially through domain-specific ontologies and open-source frameworks, to allow for flexible yet structured data exchange.

Ethical and security concerns are paramount, as maintenance systems handle sensitive information, making them attractive targets for cyber threats . Connected systems introduce vulnerabilities such as unauthorized access, data breaches, and model manipulation, posing significant risks to critical business data and intellectual property . The research question RQ2.1 addresses balancing DTs' comprehensive data capacity with privacy needs, while RQ2.2 focuses on developing countermeasures against cyber-attacks on data integrity . Data integration complexities can inadvertently increase cybersecurity risks by expanding the attack surface. To address these, research into privacy-preserving federated learning for DT-PM models and secure data sharing protocols, alongside robust anomaly detection mechanisms within DT architectures, is essential.

Beyond these, several other technical challenges hinder DT-PM effectiveness and scalability:

- Prognostics and Diagnostics Accuracy: Insufficient or excessive failure data often skews Remaining Useful Life (RUL) predictions . There is also a lack of standards for prognostic model assessment and uncertainty in RUL accuracy. Diagnostics face limitations due to a lack of training data and difficulty in authenticating methods, compounded by noise and outliers . This aligns with the challenge of building robust data pipelines (RQ6.1) .

- Integrated Simulation Engines: The challenge lies in understanding the advantages and disadvantages of data-driven versus physics-based models and effectively combining them (RQ3.1) . Utilizing integrated simulators to explicitly define, learn, and update rules/laws (RQ3.2) is a critical research direction, potentially leveraging co-simulation techniques that combine multi-physics models with machine learning algorithms .

- Explainable Simulators and Decision Models: There is a recognized need for model-agnostic methods for local explanations of simulators and decision models in PMx DTs (RQ4.1) to improve flexibility, adaptability, and address ethical concerns related to transparency . This can be addressed through the application of explainable AI (XAI) techniques, such as SHAP or LIME, tailored for complex DT environments.

- Adaptive and Transferable Digital Twin Framework: Implementing bidirectional model libraries for robustness, adaptability, and transferability (RQ7.1) is vital for ensuring DTs can evolve with changing operational conditions and be readily deployed across similar assets . Research into meta-learning and domain adaptation techniques can facilitate the development of such frameworks.

In summary, the transition to widespread DT-PM is a multi-faceted challenge, where technical complexities like data integration and scalability are intertwined with non-technical hurdles such as workforce skills and financial investment. The lack of standardization exacerbates interoperability issues, while cybersecurity risks threaten the integrity and trustworthiness of DT deployments. Addressing these limitations requires concerted research efforts into developing robust, interoperable, secure, and human-centric DT frameworks, along with strategic investments in workforce training and clear ROI demonstrations to foster broader industrial adoption.

5.2 Emerging Technologies and Future Research Avenues

The future trajectory of Digital Twin-driven Predictive Maintenance (DT-PM) in smart factories is intrinsically linked to its convergence with a spectrum of emerging technologies, offering synergistic benefits that can overcome current limitations and enhance operational capabilities. While several papers acknowledge the foundational role of technologies such as the Internet of Things (IoT), cloud computing, and Artificial Intelligence (AI) in DT development , a more explicit integration of advanced concepts like blockchain, Augmented Reality (AR), and edge computing is paramount for the next generation of DT-PM systems.

Blockchain technology holds significant promise for enhancing data security and trustworthiness within DT applications. By providing a decentralized, immutable ledger, blockchain can ensure the integrity of sensor data, maintenance records, and operational parameters, which are critical for accurate predictive modeling. This distributed trust mechanism could prevent data tampering and facilitate secure data sharing among various stakeholders in a smart factory ecosystem, addressing critical concerns around data provenance and reliability. Although not directly addressed in the provided digests, the general need for robust data pipelines indirectly supports the exploration of blockchain for enhanced data integrity.

Augmented Reality (AR) is poised to revolutionize maintenance support by providing immersive and intuitive human-DT interaction. Papers indicate the increasing relevance of XR (Extended Reality), encompassing AR and Virtual Reality (VR), for training, maintenance visualization, and skill development in industrial contexts . For instance, the use of NVIDIA Omniverse applications for DT education and Meta Quest 3 for industrial training underscores the potential of immersive technologies to enhance DT utilization . AR can overlay real-time diagnostic data and maintenance instructions directly onto physical machinery, guiding technicians through complex repair procedures and improving human-machine collaboration . This integration moves beyond mere visualization, enabling technicians to interact with the digital twin's insights in a contextualized and efficient manner.

Edge computing is crucial for enabling faster, localized decision-making in DT-PM systems. By processing data closer to the source, edge computing reduces latency, minimizes bandwidth requirements, and enhances the responsiveness of predictive maintenance actions. This is particularly vital for critical assets where immediate insights are necessary to prevent catastrophic failures. The need for improved scalability and efficiency of edge DT deployments is a recognized research gap . Research into hybrid edge-cloud computing architectures for DT-PM is imperative, where critical tasks are performed at the edge, and complex analytics and long-term data storage are offloaded to the cloud. This distributed architecture could optimize resource utilization and enhance the overall robustness of DT-PM systems.

A significant challenge identified in the literature is the lack of standardized protocols for Digital Twin development and data exchange. The need for an interoperable approach to Prognostics and Health Management (PHM) across different industries is highlighted as a key challenge . Interoperability is critical for fostering wider adoption and creating interconnected smart factory ecosystems, allowing seamless data flow and model sharing between different DT instances and systems. Ongoing efforts in developing industry standards are essential to move DT-PM from fragmented solutions to a cohesive, integrated paradigm. This includes developing standardized DT frameworks for PMx by analyzing and integrating existing proposals, addressing a crucial research gap identified in the literature .

Beyond individual technologies, the synergistic combination of these emerging fields offers profound advancements. For example, the integration of Explainable AI (XAI) for DT-PM models with AR visualization presents a powerful avenue for improved human-machine collaboration. While the need to enhance explainability of DT decision models for PMx is recognized , combining XAI with AR allows for direct, visual explanation of complex AI decisions in the physical context of the asset, enhancing operator trust and facilitating quicker, more informed interventions. Similarly, blockchain-secured data combined with edge computing could enable highly reliable and localized decision-making in autonomous maintenance systems.

Future research directions should be framed as actionable projects that bridge the gap from proof-of-concept to industrial viability. Specific avenues include:

- Standardized DT Frameworks for PMx: Research into developing unified, standardized DT frameworks by analyzing and integrating existing proposals. This includes defining common data models, communication protocols, and interoperability interfaces to facilitate seamless integration across diverse manufacturing environments. This directly addresses the need for standardization identified in various studies .

- Explainable AI (XAI) for DT-PM Models Integrated with AR Visualization: Develop advanced XAI techniques specifically tailored for DT-PM models, focusing on interpretability and transparency. Integrate these XAI capabilities with AR platforms to provide intuitive visual explanations of predictive insights and suggested maintenance actions to human operators. Expected outcomes include increased user trust, faster decision-making, and improved diagnostic accuracy.

- Scalable and Efficient Hybrid Edge-Cloud DT Deployments: Investigate novel architectures for DT deployment that intelligently distribute computational loads between edge devices and cloud infrastructure. This involves optimizing data flow, model synchronization, and real-time processing at the edge while leveraging cloud resources for complex analytics and long-term data archival. Research should focus on efficiency metrics, latency reduction, and fault tolerance. This addresses the challenge of scalability and edge deployment identified in the literature .

- Blockchain-Enhanced Data Pipeline Robustness and Reliability for DT-PM: Explore the application of blockchain and distributed ledger technologies to enhance the security, immutability, and auditability of data pipelines feeding DT-PM systems. This would involve developing secure data ingestion, storage, and sharing mechanisms that ensure data integrity from sensor to DT model, strengthening data pipeline robustness and reliability .

- Adaptive and Transferable DT Frameworks with Bidirectional Model Libraries: Research into developing DT frameworks that can adapt to evolving operational conditions and transfer knowledge across different assets or factory lines. This includes creating bidirectional model libraries that allow for continuous learning and refinement of DT models based on new data and operational feedback, addressing the need for adaptive and transferable frameworks .

- AI-Driven DT Configuration and Management Tools: To counter the need for highly skilled personnel, future research should focus on developing AI-driven tools that automate the complex setup, calibration, and ongoing management of Digital Twins. This would involve machine learning algorithms to autonomously configure DT parameters, integrate data sources, and self-optimize for performance, lowering the barrier to entry for widespread industrial adoption.

- Human-in-the-Loop DT Systems with AR/VR for Intuitive Interaction: Investigate advanced human-in-the-loop DT systems that leverage AR/VR for highly intuitive operator interaction and decision support. This includes research into natural language processing for DT querying, gesture-based controls for model manipulation, and personalized user interfaces that adapt to operator skill levels and task requirements, promoting seamless human-DT collaboration.

The expansion of DT applications beyond single assets to entire factory systems is also a critical future direction, though it is only briefly touched upon in some papers . Developing system-level digital twins would enable holistic predictive maintenance strategies, optimizing production flow, energy consumption, and overall factory resilience, rather than focusing solely on individual components. This systemic approach is essential for realizing the full potential of smart factories, leading to a broader future for industry adoption . Critically assessing the maturity and adoption readiness of these proposed technologies and structuring research to bridge the gap from proof-of-concept to industrial viability will be paramount for the continued evolution of DT-PM.

5.3 Ethical and Societal Implications

The widespread adoption of Digital Twin-driven Predictive Maintenance (DT-PM) in smart factories, while offering substantial operational benefits, introduces a complex array of ethical and societal implications, particularly concerning data governance, privacy, and workforce dynamics. The collection of vast amounts of industrial data for DT-PM systems inherently raises concerns about data ownership, access control, and the potential for misuse of sensitive information.

A central ethical consideration in DT-PM is data privacy. Digital Twins, by their nature, aggregate comprehensive real-time and historical data from physical assets, often including operational parameters, performance metrics, and even environmental conditions . This extensive data collection, while crucial for accurate predictive models, necessitates robust privacy protocols to prevent unauthorized access or disclosure. Beyond direct operational data, concerns also arise regarding data inferred from these systems that might inadvertently reveal proprietary manufacturing processes or sensitive business intelligence.

The interplay between data privacy and cybersecurity is paramount in the context of DT-PM. Cybersecurity is not merely a separate technical concern but an integral component of upholding data privacy. A breach in cybersecurity, such as a cyber-attack targeting Internet of Things (IoT) devices or the Digital Twin itself, directly compromises data integrity and confidentiality, thereby eroding privacy . For instance, a sophisticated attack could not only expose sensitive operational data but also manipulate the data fed into the Digital Twin, leading to erroneous predictions and potentially catastrophic physical failures. Therefore, a security-by-design approach, incorporating robust cybersecurity measures from the initial stages of DT-PM system development, is essential to mitigate privacy risks . This includes implementing strong encryption for data at rest and in transit, establishing stringent access control mechanisms, and developing comprehensive data governance policies . Secure sharing options and granular access permissions within DT platforms are also critical to allow authorized personnel selective access to information while restricting sensitive areas .