0. A Review of Natural Language Processing Methods for Identifying "Greenwashing" in ESG Disclosures

1. Introduction

The escalating global emphasis on sustainable business practices has amplified the demand for corporate Environmental, Social, and Governance (ESG) disclosures, with companies increasingly showcasing environmental commitments to enhance public perception and attract environmentally conscious investors . This heightened focus, however, has also precipitated the rise of "greenwashing"—a deceptive practice where organizations present a misleading impression of environmental responsibility without substantial operational changes . Such deliberate misrepresentation undermines the integrity of ESG ratings and corporate sustainability reporting, necessitating robust detection mechanisms .

The pervasive nature of greenwashing is a significant concern, with a substantial proportion of corporate environmental claims found to be ambiguous, deceptive, or lacking verifiable evidence . This issue is compounded by the acknowledgment from many corporate leaders in the United States regarding their engagement in greenwashing practices . The ramifications of greenwashing are extensive, impacting risk assessments, regulatory compliance, ESG investment decisions, and corporate reputation . Consequently, stakeholders face considerable difficulty in accurately assessing a company's genuine commitment to sustainability .

Traditional methods of analyzing ESG disclosures are increasingly inadequate due to the voluminous and text-heavy nature of corporate sustainability reports, making manual analysis cumbersome and time-intensive . The sheer volume of information from diverse sources, including corporate reports, news, and social media, often overwhelms human analytical capabilities . Moreover, the absence of standardized analytical tools hinders reproducibility and methodological robustness . ESG rating systems also struggle with processing vast, often misaligned metrics, leading to potential manipulation of investor perceptions through low-quality indicators .

The manual identification of greenwashing is further complicated by the nuanced linguistic features in corporate sustainability reporting. Companies frequently employ vague and ambiguous language to mask or exaggerate their environmental efforts, using buzzwords without clear definitions or verifiable evidence . The subjective nature of greenwashing definitions and the use of selective disclosure to create overly positive corporate images pose significant challenges for automated detection methods . Discrepancies between internal corporate sentiment and external social media narratives can also signal potential greenwashing . Additionally, the complexity and varied layouts of corporate PDF documents impede automated information extraction for NLP solutions .

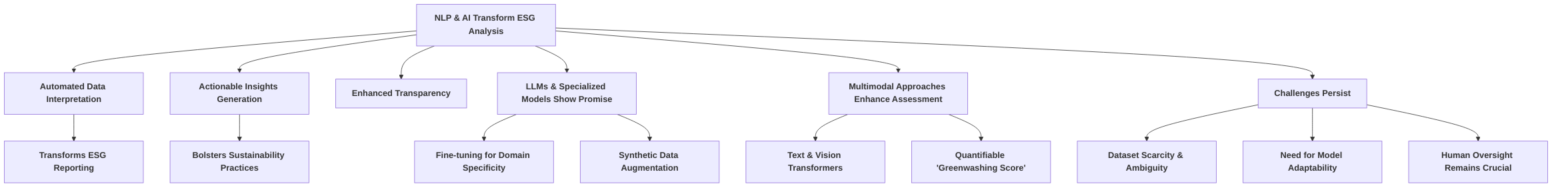

These limitations underscore a critical demand for robust, automated detection mechanisms, particularly those leveraging Artificial Intelligence (AI) and Natural Language Processing (NLP). AI and Machine Learning (ML) offer the potential for accurate and rapid analysis of extensive textual information in sustainability reports, overcoming the challenges of manual scrutiny . NLP is crucial for advancing ESG efforts by automating data analysis and uncovering insights for sustainable business practices . It can automate ESG data extraction, enhance accuracy, and provide deeper insights for improvement . Specific applications include classifying textual segments against precise ESG activities, often requiring domain-specific fine-tuning for general-purpose Large Language Models (LLMs) . By improving transparency and accountability in ESG reporting, NLP can provide data-driven evidence of sustainability, fostering stakeholder confidence and driving sustainable long-term practices . The development of AI-based detection models, potentially leveraging text and vision transformers to quantify sustainability messaging, is vital for company ratings and enhancing explainability . This evolving landscape highlights the imperative for sophisticated NLP solutions to accurately identify misleading claims and promote genuine corporate accountability in ESG disclosures.

This survey aims to provide a comprehensive critical review of Natural Language Processing (NLP) methods specifically designed for identifying greenwashing in ESG disclosures. The objective is to systematically analyze existing research, identify prevalent methodologies, evaluate their strengths and weaknesses, and propose promising avenues for future research . This endeavor builds upon the critical need to detect misleading climate-related corporate communications, as highlighted by existing surveys on NLP methods for such identification . Similarly, a systematic literature review employing the PRISMA 2020 statement underscores the interrelationships between greenwashing, sustainability reports, AI, and ML, aiming to identify research patterns, gaps, and future opportunities .

The logical flow of this survey is structured to provide a clear narrative, progressing from understanding the multifaceted problem of greenwashing to exploring advanced NLP solutions and charting future research directions. Initially, the survey will delve into the foundational understanding of greenwashing, including its various manifestations and profound impact on sustainable business practices and investor confidence . This initial section will establish the rationale for employing data analytics, particularly NLP, as a robust tool for detecting and preventing these unethical practices . Subsequently, the survey will transition into a detailed examination of the NLP methods employed in greenwashing detection. This will involve a systematic categorization of methodologies, such as those that identify climate-related statements, assess green claims, perform sentiment/tone analysis, and conduct topic detection, often leveraging frameworks like the Task Force on Climate-related Financial Disclosures (TCFD) . For instance, some research evaluates NLP frameworks specifically for detecting greenwashing in ESG, proposing innovative mechanisms for automated surveillance and examining the correlation between internal ESG sentiments and public opinion on social media . One study, for example, evaluates 12 pharmaceutical entities by analyzing sentiment using the FinBERT-ESG-9-Categories model, suggesting that a lack of correlation between internal and external sentiment scores can indicate potential greenwashing .

The survey will also analyze the role of advanced language models, including Large Language Models (LLMs), in enhancing the precision of greenwashing detection. Papers focusing on the capabilities of current-generation LLMs in identifying text related to environmental activities, particularly aligning textual segments from Non-Financial Disclosures (NFDs) with the EU ESG taxonomy, will be reviewed . This includes exploring how LLM performance can be significantly enhanced through fine-tuning on a combination of original and synthetically generated data, as demonstrated by the ESG-Activities benchmark dataset and detailed analysis of LLM performance in zero-shot and fine-tuning settings . The integration of NLP tools can further streamline ESG reporting, automate data analysis, and uncover actionable insights, thereby improving transparency and accountability . While one paper directly addresses how NLP, through services offered by Elite Asia, can enhance ESG marketing and reporting, leading to more sustainable business practices , it also underscores the broader utility of NLP for identifying opportunities for positive environmental, social, and governance impacts.

Furthermore, the survey will critically assess the limitations and challenges prevalent in current NLP approaches to greenwashing detection. This includes discussions on the availability and quality of datasets, the complexities of capturing nuanced deceptive language, and the computational resources required for advanced models . The discussion will also encompass methodologies for assessing corporate disclosures and developing models to explain variations in ESG ratings, thereby bridging existing gaps in ESG measurement through NLP . Finally, the survey will conclude by outlining future research directions, emphasizing the potential for multimodal deep learning approaches and the development of more robust, interpretable, and scalable NLP models for greenwashing detection. This forward-looking perspective aims to guide researchers toward unexplored areas and foster innovation in this critical domain, ensuring that the field continues to evolve in response to the dynamic nature of greenwashing practices . While some papers focus on specific research projects developing AI models for greenwashing detection rather than comprehensive survey outlines , their contributions to specific techniques, such as multimodal deep learning, will be integrated into the broader discussion of potential solutions. The survey’s holistic structure is designed to provide a comprehensive and actionable overview of NLP's role in combating greenwashing, thereby supporting more genuine corporate sustainability efforts.

1.1 Background and Motivation

The increasing global focus on sustainable business practices has led to a surge in demand for corporate Environmental, Social, and Governance (ESG) disclosures, with companies often highlighting their commitment to environmental stewardship to enhance public image and attract environmentally conscious investors . However, this heightened emphasis on ESG factors has also given rise to greenwashing, a deceptive practice where organizations create a false or misleading impression of their environmental responsibility without substantial operational changes . This deliberate attempt to mislead investors and the public through unverified or ambiguous environmental claims poses a significant challenge to the integrity of ESG ratings and the reliability of corporate sustainability reporting, necessitating robust detection mechanisms .

The prevalence of greenwashing is a critical concern, with research indicating that a significant portion of corporate environmental claims are ambiguous, deceptive, or unfounded, and many entirely lack supporting evidence . This widespread issue is further exacerbated by the acknowledgment from a substantial percentage of corporate leaders in the United States regarding their involvement in greenwashing practices . The implications of greenwashing are far-reaching, leading to distorted risk assessments, regulatory compliance issues, flawed investment decisions in ESG-focused portfolios, legal liabilities, and reputational damage for companies . Consequently, accurately gauging a company's genuine dedication to sustainability becomes exceedingly difficult for stakeholders, including investors and regulators .

Traditional methods of scrutinizing ESG disclosures are increasingly insufficient in addressing the complexities of greenwashing due to several inherent limitations. Corporate sustainability reports are often lengthy and text-heavy, making manual analysis cumbersome and time-consuming for analysts attempting to extract relevant information . The sheer volume of information available across various sources, including corporate reports, news articles, and social media, overwhelms human analytical capabilities . Moreover, the lack of standardized, open tools for report analysis burdens researchers, hindering reproducibility and raising concerns about methodological robustness . Furthermore, traditional ESG rating systems struggle to process the vast and often misaligned reported metrics, which can lead to the manipulation of investor perceptions through the use of low-quality indicators .

The challenge of manually identifying greenwashing is compounded by specific linguistic features inherent in corporate sustainability reporting. Companies frequently employ nuanced, vague, and ambiguous language to mask their actual environmental impact or to exaggerate their efforts. Terms such as "net zero," "environmental-friendly," and "energy efficient" are often used as buzzwords without clear definitions or verifiable evidence, making it difficult to discern genuine commitment from mere rhetoric . The subjective nature of defining and quantifying greenwashing further complicates automated detection methods, as companies may use selective disclosure to create an overly positive corporate image, misleading stakeholders about their actual environmental impact . This deceptive portrayal can lead to a diminished correlation between in-house corporate sentiment metrics and external social media narratives, which can hint at potential greenwashing activities . The complexity and varied layouts of corporate PDF documents also complicate automated information extraction, posing a significant hurdle for NLP solutions .

Given these limitations, there is a critical and growing demand for more robust, automated detection mechanisms, particularly those leveraging Artificial Intelligence (AI) and Natural Language Processing (NLP). AI and Machine Learning (ML) offer the potential to analyze the extensive textual information within sustainability reports accurately and quickly, overcoming the challenges posed by manual scrutiny . NLP, in particular, is highlighted as a crucial technology for advancing ESG efforts by automating data analysis and uncovering insights for more sustainable business practices . It can automate ESG data extraction from diverse sources, saving time and maintaining accuracy, and enables businesses to gain deeper insights into their ESG performance, identifying areas for improvement . Specific NLP applications include classifying textual segments against precise ESG activities, which is a more granular task than linking to broad SDG categories and necessitates domain-specific fine-tuning for general-purpose Large Language Models (LLMs) . By enhancing transparency and accountability in ESG reporting, NLP can provide data-driven evidence of sustainability efforts, building stakeholder confidence and driving sustainable long-term business practices . The development of AI-based detection models, potentially leveraging text and vision transformers to quantify sustainability-related messaging, is crucial for facilitating company ratings based on a "greenwashing score" and improving explainability by mapping textual and visual information onto sustainability axes . This evolving landscape underscores the imperative for sophisticated NLP solutions to accurately identify misleading claims and promote genuine corporate accountability in ESG disclosures.

1.2 Survey Objectives and Structure

This survey aims to provide a comprehensive critical review of Natural Language Processing (NLP) methods specifically designed for identifying greenwashing in Environmental, Social, and Governance (ESG) disclosures. The objective is to systematically analyze existing research, identify prevalent methodologies, evaluate their inherent strengths and weaknesses, and propose promising avenues for future research . This endeavor builds upon the critical need to detect misleading climate-related corporate communications, as highlighted by surveys focused on NLP methods for such identification . Similarly, a systematic literature review employing the PRISMA 2020 statement underscores the interrelationships between greenwashing, sustainability reports, Artificial Intelligence (AI), and Machine Learning (ML), aiming to identify research patterns, gaps, and future opportunities .

The logical flow of this survey is structured to provide a clear narrative, progressing from understanding the multifaceted problem of greenwashing to exploring advanced NLP solutions and charting future research directions. Initially, the survey will delve into the foundational understanding of greenwashing, including its various manifestations and the profound impact it has on sustainable business practices and investor confidence . This initial section will establish the rationale for employing data analytics, particularly NLP, as a robust tool for detecting and preventing these unethical practices .

Subsequently, the survey will transition into a detailed examination of the NLP methods employed in greenwashing detection. This will involve a systematic categorization of methodologies, such as those that identify climate-related statements, assess green claims, perform sentiment/tone analysis, and conduct topic detection, often leveraging frameworks like the Task Force on Climate-related Financial Disclosures (TCFD) . For instance, some research evaluates NLP frameworks specifically for detecting greenwashing in ESG, proposing innovative mechanisms for automated surveillance and examining the correlation between internal ESG sentiments and public opinion on social media . One study, for example, evaluates 12 pharmaceutical entities by analyzing sentiment using the FinBERT-ESG-9-Categories model, suggesting that a lack of correlation between internal and external sentiment scores can indicate potential greenwashing .

The survey will also analyze the role of advanced language models, including Large Language Models (LLMs), in enhancing the precision of greenwashing detection. Papers focusing on the capabilities of current-generation LLMs in identifying text related to environmental activities, particularly aligning textual segments from Non-Financial Disclosures (NFDs) with the EU ESG taxonomy, will be reviewed . This includes exploring how LLM performance can be significantly enhanced through fine-tuning on a combination of original and synthetically generated data, as demonstrated by the ESG-Activities benchmark dataset and detailed analysis of LLM performance in zero-shot and fine-tuning settings . The integration of NLP tools can further streamline ESG reporting, automate data analysis, and uncover actionable insights, thereby improving transparency and accountability . While one paper directly addresses how NLP, through services offered by Elite Asia, can enhance ESG marketing and reporting, leading to more sustainable business practices , it also underscores the broader utility of NLP for identifying opportunities for positive environmental, social, and governance impacts.

Furthermore, the survey will critically assess the limitations and challenges prevalent in current NLP approaches to greenwashing detection. This includes discussions on the availability and quality of datasets, the complexities of capturing nuanced deceptive language, and the computational resources required for advanced models . The discussion will also encompass methodologies for assessing corporate disclosures and developing models to explain variations in ESG ratings, thereby bridging existing gaps in ESG measurement through NLP .

Finally, the survey will conclude by outlining future research directions, emphasizing the potential for multimodal deep learning approaches and the development of more robust, interpretable, and scalable NLP models for greenwashing detection. This forward-looking perspective aims to guide researchers toward unexplored areas and foster innovation in this critical domain, ensuring that the field continues to evolve in response to the dynamic nature of greenwashing practices . While some papers focus on specific research projects developing AI models for greenwashing detection rather than comprehensive survey outlines , their contributions to specific techniques, such as multimodal deep learning, will be integrated into the broader discussion of potential solutions. The survey’s holistic structure is designed to provide a comprehensive and actionable overview of NLP's role in combating greenwashing, thereby supporting more genuine corporate sustainability efforts.

2. Understanding Greenwashing and ESG Disclosures

This section provides a foundational understanding of greenwashing within the context of ESG disclosures, a critical prerequisite for developing effective detection methodologies. It systematically explores the conceptual definitions and common manifestations of greenwashing, details the intricate landscape of ESG reporting and diverse data sources, and highlights the inherent challenges in identifying deceptive environmental claims.

| Type of Greenwashing | Description | Examples |

|---|---|---|

| Vague Language | Use of ambiguous or unsubstantiated terms without clear definitions or verifiable evidence. | "Eco-friendly," "Net Zero," "Sustainable" |

| Irrelevant Claims | Highlighting an attribute that is technically true but irrelevant to environmental impact or misleading. | "CFC-free" (when CFCs are banned by law) |

| Hidden Trade-offs | Promoting one positive environmental aspect while ignoring significant negative impacts. | Recycled paper with high energy consumption in production |

| Unsubstantiated Claims | Environmental claims lacking supporting evidence or third-party verification. | Broad claims about carbon footprint reduction without data |

| Misleading Imagery/Graphics | Using visuals that evoke environmental responsibility but are not directly related to the product/company. | Images of nature for products with negative environmental impact |

| Selective Disclosure | Presenting only positive environmental information while omitting negative aspects. | Highlighting recycling efforts while ignoring waste generation |

| False/Exaggerated Claims | Making outright false or significantly exaggerated statements about environmental performance. | Claims of 100% renewable energy without proof |

| Irresponsible Marketing | Using environmental claims to mislead consumers about a product's or company's true environmental impact. | Promising "zero impact" for complex industrial processes |

The initial subsection, "Conceptualizing ESG Compliance and Identifying Misinformation," delineates greenwashing as a deceptive communication strategy used by corporations to misrepresent their environmental or sustainability efforts. It synthesizes various definitions, emphasizing the deliberate misleading or false impression of environmental responsibility . This subsection explores the nuances arising from the lack of a universal "green" definition and the exploitation of vague terminology . It further categorizes common greenwashing types, such as vague language, irrelevant claims, and unsubstantiated statements, which collectively complicate automated detection . The discussion then transitions to linguistic indicators of greenwashing, including the absence of specific commitments, non-specific language, and overly optimistic sentiment, and introduces frameworks like the "Green Authenticity Index" . This lays the groundwork for understanding the textual characteristics that NLP models must identify.

Following this conceptual overview, the "The Landscape of ESG Reporting and Data Sources" subsection elaborates on the extensive nature of ESG reporting, from corporate sustainability reports to GHG emissions reports, and highlights the lack of standardization as a significant challenge . It reviews the evolving legal and regulatory landscape, including international frameworks like the UNFCCC and regional initiatives such as the EU Green Claims Directive, which aim to establish clearer reporting requirements and reduce misleading claims . Crucially, this subsection outlines the diverse data sources utilized in greenwashing detection, encompassing corporate documents (e.g., 10-K filings, sustainability reports) and external sources (e.g., news articles, social media, ESG ratings) . It also discusses the implications of using different data sources, noting how external data can provide a more holistic and critical perspective than internal reports alone .

Finally, the "Challenges in Identifying Greenwashing" subsection delves into the inherent difficulties of detecting greenwashing. It identifies the primary obstacles as the pervasive subjectivity and ambiguity in defining greenwashing, the critical lack of standardization in ESG reporting, and the dynamic nature of misleading claims that continuously evolve . This section highlights how these challenges necessitate advanced NLP models capable of deep semantic understanding, contextual reasoning, and adaptability to unstructured and multimodal data, underscoring the urgent need for robust, continuously learning AI systems . Together, these three subsections provide a comprehensive overview of the fundamental concepts, contexts, and complexities involved in understanding and addressing greenwashing within ESG disclosures, thereby setting the stage for the subsequent discussion of NLP methods.

2.1 Conceptualizing ESG Compliance and Identifying Misinformation

Greenwashing, at its core, represents a deceptive communication strategy employed by corporations to misrepresent their environmental or sustainability efforts. Across various studies, common threads emerge in its definition, typically revolving around intentional misleading or the creation of a false impression of environmental responsibility. For instance, defines greenwashing as a deliberate attempt to mislead the public and investors through false or unverified environmental claims, distinguishing it from mere poor ESG performance alongside public commitments. This resonates with the definition provided by , which describes greenwashing as "behavior or activities that make people believe that a company is doing more to protect the environment than it really is." Similarly, characterizes it as the "deceptive portrayal of a firm's performance in environmental, social, or governance facets," emphasizing a discord between internal strategies and public perception.

Nuances in these definitions highlight the complexity of the phenomenon. While some definitions, like that from , acknowledge unintentional greenwashing stemming from a lack of understanding or transparency, others, such as , focus on the broader aspect of misleading the public about carbon transition efforts. The absence of a universally accepted definition of "green" or "sustainable" further contributes to ambiguity, allowing companies to exploit vague terminology, as noted by . This ambiguity is often exploited as a marketing strategy to capitalize on consumer demand for sustainability without genuine operational changes .

Common types of greenwashing observed in text include vague language, irrelevant information, false claims, and the excessive use of ESG buzzwords, which collectively pose significant challenges for automated detection. Vague language, such as "eco-friendly" or "net zero" without clear definitions or evidence, is a recurring theme . For instance, points to terms like "CFC-free" as irrelevant claims, where the advertised attribute is unrelated to the product's actual impact because CFCs have long been banned. Hidden trade-offs, where one positive environmental aspect is promoted while significant negative impacts are ignored (e.g., energy consumption in recycled paper production), also fall under this category .

Other forms of greenwashing include the use of unreliable sustainability labels, presenting legal requirements as distinctive features, making claims about an entire product when only a part is environmentally friendly, and unsubstantiated environmental claims . The use of misleading imagery and words without supporting evidence, such as tree images for products not involving trees, is also a recognized manifestation . These manifestations make automated detection challenging due to the subjective nature of "misleading" information, the reliance on contextual understanding, and the difficulty in discerning intent.

To address the challenge of identifying misinformation, particularly greenwashing, researchers are increasingly focusing on the classification of text segments against precise ESG activities. The approach proposed by exemplifies this, framing the problem as classifying text segments based on their relevance to specific ESG activities outlined by the EU taxonomy. This taxonomy provides a standardized framework for sustainable practices, enabling companies to evaluate and report their performance consistently. While not directly focused on greenwashing detection, this method of quantifying ESG communication can indirectly contribute to identifying misrepresentation by highlighting where reported claims deviate from established standards or lack supporting detail . Tools like REPORTPARSE also aid in this by extracting specific sustainability-related information, which can then be evaluated against corporate commitments .

Specific linguistic indicators of greenwashing, as highlighted across various papers, offer crucial insights for automated detection. characterizes greenwashing language by four key attributes: absence of explicit climate-related commitments and actions, use of non-specific language, overly optimistic sentiment, and lack of evasive or hedging terms. These attributes, developed through literature review and expert corroboration, provide a preliminary mathematical formulation for quantifying greenwashing risk. Similarly, introduces the "Green Authenticity Index" (GAI), which quantifies the sincerity of corporate claims through two dimensions: Certainty (clarity, factuality, specificity) and Agreement (alignment between reported claims and external data). Vague statements are explicitly contrasted with precise commitments as indicators of greenwashing.

The challenge of identifying misinformation within ESG disclosures is further complicated by the nuanced ways companies present their sustainability efforts. conceptualizes greenwashing as a consequence of misaligned ESG reported metrics, where low-quality metrics are used to influence investor perceptions. This approach suggests mapping textual and visual information sources onto sustainability axes, like SDGs 14 and 15, to detect discrepancies. This aligns with the notion of "cheap talk," "selective disclosure," "deceptive techniques," or "biased narrative" as components indicative of greenwashing, as discussed in . The inherent difficulty lies in discerning deliberate deception from genuine, albeit poorly articulated, efforts. For instance, vague expressions like "net zero" or "energy efficient" can be subjective and may not always indicate an intent to mislead, even if they lack direct evidence . The distinction between genuine sustainability claims and greenwashing often hinges on the presence or absence of verifiable data, specific commitments, and alignment with external, objective standards. Hence, the move towards classifying text segments against precise ESG activities, guided by frameworks like the EU taxonomy, is a crucial step in formalizing the assessment of corporate sustainability claims and, by extension, identifying potential greenwashing .

2.2 The Landscape of ESG Reporting and Data Sources

The identification of greenwashing necessitates a thorough understanding of the intricate landscape of Environmental, Social, and Governance (ESG) reporting and disclosure. ESG reporting encompasses a broad spectrum of corporate communications designed to convey a company's impact and performance across these dimensions . Key aspects relevant to greenwashing detection include the increasing volume and exhaustiveness of sustainability reports, which cover a wide array of ESG measures . These reports serve as fundamental tools for communicating environmental, social, and economic impacts, often encompassing various documents such as Corporate Social Responsibility (CSR) reports and Greenhouse Gas (GHG) emissions reports . The lack of alignment and standardization in reported metrics, despite the growing volume, poses a significant challenge, as it can facilitate misleading statements and makes cross-company comparisons difficult . Greenwashing is often characterized by quantifiable outcomes and consistency with external data, highlighting the importance of verifying claims against independently audited information .

The legal and regulatory context profoundly influences the nature of greenwashing and introduces considerable challenges in its identification. A lack of clear, universally enforced standards in ESG reporting has historically enabled companies to make ambiguous or exaggerated sustainability claims . However, global efforts are underway to mitigate this issue through a growing body of legislation and guidelines. Significant international frameworks include the United Nations Framework Convention on Climate Change (UNFCCC) and the Paris Agreement, which set overarching climate goals . At the corporate level, reporting is increasingly shaped by guidelines from organizations such as the Global Reporting Initiative (GRI), the Task Force for Climate-related Financial Disclosures (TCFD), the International Sustainability Standards Board (ISSB), and the European Sustainability Reporting Standards (ESRS) . The adoption of these standards is deemed indispensable for fostering a sustainable economy and meeting stakeholder expectations .

Regional and national regulatory initiatives are also emerging, such as the EU Green Claims Directive, the European Company Directive (CSRD), the UK Sustainability Disclosure Requirements, and various US regulations including Section 5 of the FTC Act, SEC Climate Disclosures, and the ESG Enforcement Task Force . Asian countries like Singapore are also implementing green labeling schemes . These regulations aim to establish clearer reporting requirements and hold companies accountable for their environmental claims, thereby reducing the prevalence of misleading statements. For instance, the ESG taxonomy in Europe provides a crucial benchmark for investors to assess sustainability, impacting how non-financial disclosures (NFDs) and sustainability reports are structured, particularly within specific industries like transportation . The evolving regulatory landscape underscores the legal, financial, and reputational risks associated with greenwashing, motivating companies and investors to prioritize accurate and verifiable disclosures .

Studies on greenwashing detection employ a diverse range of financial and sustainability texts as data sources, each with distinct implications for the scope and effectiveness of detection. Corporate sustainability reports are consistently identified as a primary data source . These documents, often hundreds of pages long and in PDF format, contain complex, unstructured data, varying in layout and disclosed items across companies . Tools like ReportParse are specifically designed to extract information from these intricate documents . Research also commonly leverages other official corporate disclosures, including 10-K filings, annual reports, policies, and executive statements from analyst calls . For instance, some studies specifically analyze Non-Financial Disclosures (NFDs) to detect ESG activities within specific industries, benchmarking them against established ESG taxonomies . The ClimateBERT dataset, which contains paragraphs from financial disclosures, has also been utilized for fine-tuning climate-related language models, with testing conducted on climate-related sections of sustainability reports .

Beyond official corporate documents, various external sources are incorporated to provide a more comprehensive view. News articles and press releases are frequently used, offering publicly available insights into corporate communications and public perception . Social media platforms like Twitter are also valuable, providing real-time, unstructured data and public sentiment that can reveal inconsistencies with official statements . Some research also integrates external data sources such as ESG ratings, independent audits, and verified emissions data to cross-reference corporate claims . The use of multimodal deep learning, which considers both textual and visual data, further expands the scope of analysis, recognizing that greenwashing can manifest in various forms of corporate messaging .

The implications of using different data sources are significant. Relying solely on internal corporate reports provides direct insight into a company's self-proclaimed ESG performance, but these documents may be carefully curated to present a favorable image, potentially masking greenwashing. For instance, the use of datasets like ClimateBERT, derived from financial disclosures, offers a standardized approach to climate-related information but may not capture broader ESG nuances . Incorporating external data, such as social media and news articles, offers a more holistic and often more critical perspective, enabling researchers to identify discrepancies between corporate rhetoric and public perception or actual performance. The inclusion of independently verified data, like audited emissions figures, is crucial for validating corporate claims and detecting instances where self-reported data might be misleading or incomplete . However, combining diverse data sources also introduces challenges in data collection, processing, and normalization, as noted by studies that involve extensive preprocessing steps like tokenization, stopword removal, and translation for non-English content . The breadth of data sources, from structured reports to unstructured social media posts, signifies a move towards more robust and comprehensive greenwashing detection methodologies, allowing for a multifaceted assessment of corporate sustainability claims and greater accuracy in identifying deceptive practices.

2.3 Challenges in Identifying Greenwashing

The detection of greenwashing is inherently challenging due to a confluence of factors, primarily stemming from the subjective definition of what constitutes misleading environmental claims, the pervasive lack of standardized reporting, and the dynamic, evolving nature of corporate communication .

One of the most prominent challenges is the inherent subjectivity and ambiguity surrounding the definition of "greenwashing" itself . This lack of a commonly accepted, actionable definition complicates the establishment of precise metrics and effective policies for detection and regulation . While certain attributes, such as positive sentiment and a lack of specificity, may indicate greenwashing, subtle nuances like legal hedging can be easily misconstrued, making it difficult to quantify risk . Examples like a company claiming carbon neutrality while producing disposable plastic products underscore this subjectivity . Furthermore, the legal and reputational consequences of false accusations, coupled with the often inconspicuous nature of greenwashing, add layers of complexity to its identification . The subjective interpretation extends to instances where internal ESG sentiments diverge significantly from public opinion, suggesting potential greenwashing, particularly in sectors like pollution and waste . This challenge necessitates NLP models capable of nuanced contextual understanding rather than mere keyword matching, compelling advancements in techniques that can discern subtle linguistic cues and implied meanings, as discussed in Chapter 3.

Another critical impediment is the lack of standardization in ESG reporting. Corporate sustainability reports vary significantly in layout, design, and disclosed items, often presented in complex, unstructured PDF formats . This inconsistency, coupled with the sheer volume of information, overwhelms traditional rating systems and contributes to the use of low-quality metrics, which are indicative of greenwashing . The absence of comprehensive, reliable data about companies' actual environmental performance further hinders the reliability of AI systems designed for greenwashing detection . Moreover, green claims are frequently dispersed across multiple documents and data types, requiring extensive expertise and often debate for accurate annotation . This highlights the need for advanced parsing and information extraction techniques, such as those provided by platforms designed to handle diverse document structures and semantics . The integration of multimodal analysis, encompassing both textual and visual information, also becomes crucial given the varied sources of corporate communication .

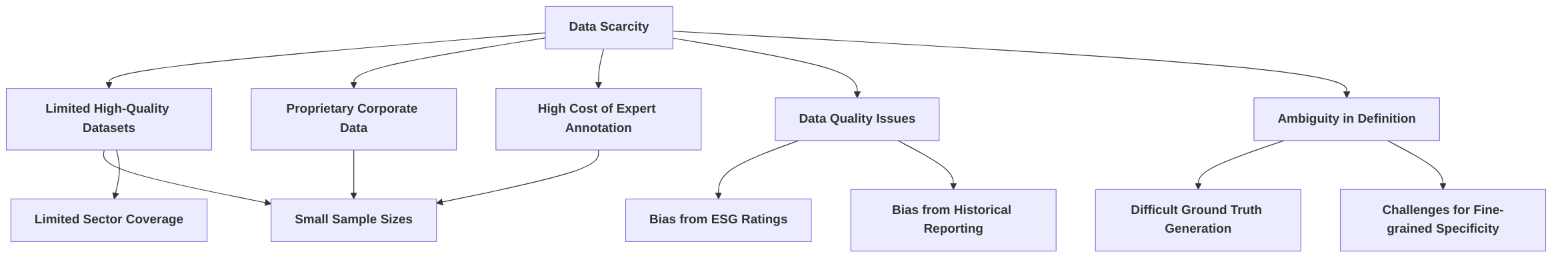

The dynamic nature of misleading claims presents a continuous challenge, requiring adaptable and context-aware NLP techniques . Companies are constantly refining their communication strategies to appear environmentally responsible, making static detection models quickly obsolete . This adaptability challenge is further exemplified by the difficulty Large Language Models (LLMs) face in achieving fine-grained specificity for ESG activities . General-purpose LLMs struggle with mapping text precisely to the complex and intricate ESG taxonomy, necessitating extensive fine-tuning and the development of high-quality, domain-specific datasets . The scarcity of such annotated datasets is a significant hurdle, limiting the robustness and generalization capabilities of models across diverse, noisy real-world settings . This situation underscores the need for NLP methodologies that are not only robust but also capable of continuous learning and adaptation to evolving corporate narratives and reporting landscapes.

These inherent challenges directly necessitate specific NLP methodological advancements. The subjectivity of greenwashing demands models capable of deep semantic understanding and contextual reasoning, moving beyond simple keyword matching to identify subtle linguistic patterns and deceptive framing. The lack of standardization in reporting requires sophisticated document parsing, information extraction, and multimodal fusion techniques to handle diverse and unstructured data formats effectively. Lastly, the dynamic nature of misleading claims and the need for fine-grained specificity with LLMs highlight the urgent requirement for adaptable, continuously learning NLP systems. These systems must be capable of frequent updates, leveraging techniques like transfer learning and few-shot learning to remain effective against evolving greenwashing tactics. The transition from manual, labor-intensive identification to automated, AI-driven solutions is thus not merely a matter of efficiency but a critical necessity for overcoming these multifaceted challenges .

3. Natural Language Processing Methods for Greenwashing Detection

The detection of "greenwashing" in Environmental, Social, and Governance (ESG) disclosures has become a critical area of focus, driven by the increasing demand for corporate transparency and accountability in sustainability claims. Natural Language Processing (NLP) methods have evolved significantly to meet this challenge, offering increasingly sophisticated tools to unmask misleading narratives. This section provides a structured overview of these advancements, tracing the progression from foundational text analysis techniques to the cutting-edge capabilities of large language models and multimodal approaches.

The journey begins with Traditional NLP Approaches, which form the bedrock of text analysis for identifying greenwashing . These methods, including keyword matching, lexicon-based sentiment analysis, and topic modeling, leverage basic linguistic patterns and statistical properties of text. While offering interpretability and requiring less computational power, their limitations in understanding context and nuanced language have necessitated the development of more advanced techniques. They operate on the assumption that greenwashing is detectable through explicit linguistic cues like specific keywords or shifts in sentiment, often relying on hand-crafted features. Data requirements for these methods are comparatively lower, typically involving structured text documents such as sustainability reports. However, their rigidity, proneness to false positives/negatives, and struggle with polysemy and synonymy underscore the need for more adaptable models capable of discerning subtle deceptive tactics.

Building upon these foundations, Advanced Neural Network Architectures like Convolutional Neural Networks (CNNs) and Long Short-Term Memory networks (LSTMs) represent a significant leap forward . These models automate feature extraction, capturing complex patterns and long-range dependencies within textual data. Unlike traditional methods, deep learning models assume that abstract features indicative of greenwashing can be automatically learned from large datasets, reducing reliance on manual feature engineering. However, this shift increases the demand for substantial, high-quality labeled datasets for training. The emergence of Transformer-based architectures, such as BERT and its variants, has further revolutionized this space, largely superseding LSTMs and CNNs by leveraging attention mechanisms to capture global dependencies more effectively. These models signify a move towards capturing deeper semantic meanings and contextual relationships beyond superficial lexical analysis.

The concept of Transfer Learning and Pre-trained Models revolutionized NLP by enabling models to leverage knowledge acquired from vast general or domain-specific corpora (e.g., ClimateBERT, FinBERT-ESG-9-Categories) and fine-tune them on smaller, task-specific datasets . This approach significantly mitigates data scarcity issues in specialized domains like ESG, improving efficiency and accuracy in greenwashing identification. The underlying assumption is that general linguistic knowledge is transferable and adaptable, leading to robust performance even with limited target data. Compared to training models from scratch, transfer learning offers reduced computational costs and time, while enhancing performance by leveraging extensive pre-trained knowledge.

Currently, Specialized and Large Language Models (LLMs) and Their Applications represent the frontier of greenwashing detection . These models, including generalist LLMs and their domain-adapted variants (e.g., ClimateGPT), offer unparalleled contextual understanding and reasoning capabilities. They enable the detection of highly sophisticated greenwashing through advanced sentiment analysis, Named Entity Recognition (NER), topic modeling, and relationship extraction. Fine-tuning, especially with techniques like Low-Rank Adaptation (LoRA), has proven highly effective, often outperforming zero-shot approaches for nuanced greenwashing detection . However, LLMs present challenges related to interpretability ("black box" nature), potential for hallucination, and prompt sensitivity, requiring robust validation frameworks and human oversight.

Finally, Multimodal Approaches integrate textual and visual information to provide a more holistic assessment of corporate communication . This approach assumes that greenwashing is a multifaceted phenomenon expressed across various communication channels. By combining insights from different data types, these methods aim to capture inconsistencies and contradictions that purely text-based methods might miss. While offering a more comprehensive view, multimodal approaches introduce increased complexity in data acquisition, model design (e.g., effective feature fusion), and interpretability due to the integration of disparate data types.

The progression through these NLP techniques illustrates a clear trend towards more sophisticated, context-aware, and data-intensive methods, reflecting the increasing complexity of greenwashing tactics and the ongoing efforts to enhance detection accuracy and robustness. Each advancement builds upon its predecessors, addressing limitations and offering more nuanced insights into deceptive corporate sustainability claims.

3.1 Key NLP Techniques and Methodologies

This section provides a comprehensive overview of the Natural Language Processing (NLP) techniques and methodologies employed for detecting "greenwashing" in ESG disclosures. Greenwashing, the deceptive practice of making unsubstantiated claims about environmental friendliness, poses a significant challenge to corporate transparency and sustainable finance. The evolution of NLP methods, from traditional statistical approaches to advanced deep learning and multimodal models, has progressively enhanced the ability to unmask these misleading narratives.

The discussion begins with Traditional NLP Approaches, which form the bedrock of text analysis for greenwashing detection. These methods, including keyword matching, lexicon-based sentiment analysis, and topic modeling, leverage basic linguistic patterns and statistical properties of text to identify potential deceptive claims . While offering high interpretability and requiring less computational power, their limitations in understanding context and nuanced language have spurred the development of more sophisticated techniques.

Building upon these foundations, Advanced Neural Network Architectures such as Convolutional Neural Networks (CNNs) and Long Short-Term Memory networks (LSTMs) are explored. These models represent a significant leap forward by automatically extracting complex features and capturing long-range dependencies within textual data . Their ability to learn intricate patterns without extensive manual feature engineering has improved the detection of subtle greenwashing tactics.

The section then delves into Transfer Learning and Pre-trained Models, a paradigm-shifting approach that has revolutionized NLP. This methodology leverages models pre-trained on vast general or domain-specific corpora (e.g., ClimateBERT, FinBERT-ESG-9-Categories) and fine-tunes them on smaller, task-specific datasets . This approach significantly mitigates data scarcity issues in specialized domains like ESG, leading to improved efficiency and accuracy in greenwashing identification.

Finally, Specialized and Large Language Models (LLMs) and Their Applications are discussed as the current frontier in NLP for greenwashing detection. These models, including generalist LLMs and their domain-adapted variants, offer unparalleled contextual understanding and reasoning capabilities, enabling the detection of highly sophisticated greenwashing through advanced sentiment analysis, Named Entity Recognition (NER), topic modeling, and relationship extraction . While powerful, LLMs present challenges related to interpretability, potential for hallucination, and prompt sensitivity.

The concluding part of the section will introduce Multimodal Approaches, which integrate textual and visual information to provide a more holistic assessment of corporate communication. By combining insights from various data types, these approaches aim to capture inconsistencies and contradictions that purely text-based methods might miss, addressing the multifaceted nature of greenwashing .

The progression through these techniques illustrates a clear trend towards more sophisticated, context-aware, and data-intensive methods, reflecting the increasing complexity of greenwashing tactics and the ongoing efforts to enhance detection accuracy and robustness.

3.1.1 Traditional NLP Approaches

Traditional Natural Language Processing (NLP) techniques constitute the foundational layer for identifying greenwashing within Environmental, Social, and Governance (ESG) disclosures, leveraging Artificial Intelligence (AI) and advanced analytics to unmask misleading claims . These methods typically involve basic text analysis such as keyword matching, lexicon-based approaches, rule-based systems, and classical machine learning with hand-crafted features . Early work in this domain often relied on hand-selected keywords to filter climate-related text from company reports, aiming to flag terms like "carbon neutral" or "recyclable" for subsequent human review .

Sentiment analysis is a primary application of NLP in greenwashing detection, offering insights into the emotional undertones of corporate communications. For instance, studies have employed tools like TextBlob, a lexicon-focused sentiment analysis library, to assign sentiment scores (ranging from negative to positive) to corporate internal ESG declarations . This allows researchers to gauge the general mood and compare it with public sentiment on social platforms like Twitter. Discrepancies identified through correlation coefficient analysis between internal corporate sentiments and external public opinions can indicate greenwashing . For example, a company might present an overwhelmingly positive self-assessment in its official reports while public discourse reflects skepticism or negative sentiment regarding its environmental claims.

Topic modeling further aids in greenwashing detection by identifying the prevalent subjects discussed within ESG reports. By analyzing the frequency and co-occurrence of words, topic modeling algorithms can reveal what companies are genuinely emphasizing in their disclosures . Discrepancies between espoused values and actual textual content, or an excessive prevalence of "buzzwords" without substantive action, can signal greenwashing. For instance, if a company frequently uses terms like "sustainability" and "eco-friendly" but its reports lack specific details on initiatives, investments, or measurable outcomes, it could indicate a greenwashing attempt. Some research suggests that even simpler baselines, such as TF-IDF (Term Frequency-Inverse Document Frequency), can achieve competitive performance in certain greenwashing detection tasks, occasionally outperforming more complex Transformer models, which implies that specific topics might possess highly distinguishable vocabularies that these methods can effectively capture .

The underlying assumptions of traditional NLP methods are generally that greenwashing manifests through identifiable linguistic patterns, keyword usage, or shifts in sentiment. Their data requirements are comparatively lower than deep learning approaches, often relying on structured text documents like sustainability reports, press releases, and social media data . These methods offer high interpretability; for example, keyword lists or sentiment scores are directly understandable, making it straightforward to identify why a particular document was flagged. Foundational NLP tools like PyMuPDF for text extraction and spaCy for sentence tokenization are often prerequisites for preparing data for these analyses, enabling structured processing of corporate sustainability reports .

Despite their utility and interpretability, traditional NLP approaches have inherent limitations that have motivated the development of more advanced techniques. Keyword matching and lexicon-based methods are often rigid and prone to false positives or negatives due to their inability to understand context, irony, or subtle nuances in language. For instance, a company genuinely engaged in sustainable practices might use many "green" keywords, leading to a false positive, while a sophisticated greenwasher might employ evasive language that bypasses simple keyword filters. Rule-based systems, while more flexible, require extensive manual effort to create and maintain rules, making them less scalable for the vast and evolving landscape of corporate disclosures. Furthermore, these methods often struggle with polysemy (words with multiple meanings) and synonymy (different words with the same meaning), which can lead to imprecise detection. The inability to capture complex semantic relationships and deeper contextual meaning is a significant drawback. This limitation becomes particularly pronounced when identifying sophisticated greenwashing tactics that rely on vague language, misdirection, or the omission of crucial information rather than direct falsehoods. The need to move beyond simple pattern detection and develop more nuanced, domain-specific models, capable of understanding the context and intent behind corporate statements, has driven the shift towards deep learning approaches. While some studies show that unsupervised text mining and keyword identification can serve as foundational elements, they implicitly highlight the necessity for more sophisticated methodologies that can learn intricate patterns and relationships from large datasets .

3.1.2 Advanced Neural Network Architectures

The application of advanced neural network architectures, particularly deep learning models, has significantly enhanced the detection of greenwashing in ESG disclosures. These architectures, including Convolutional Neural Networks (CNNs) and Long Short-Term Memory networks (LSTMs), exhibit strengths in feature extraction and pattern recognition within complex text data, moving beyond the limitations of traditional rule-based or simple machine learning methods .

Initially, traditional NLP methods often relied on lexicons, keyword matching, or shallow machine learning algorithms (e.g., SVM, Naive Bayes) for greenwashing detection. While these methods offered interpretability due to their transparent feature engineering, they struggled with capturing nuanced semantic relationships, contextual dependencies, and implicit deceptive language prevalent in greenwashing practices. Their effectiveness was often limited by the quality and exhaustiveness of predefined rules or features, requiring extensive domain expertise and manual effort.

Deep learning architectures, conversely, offer an end-to-end learning approach, automatically extracting hierarchical features from raw text data. LSTMs, a type of Recurrent Neural Network (RNN), are particularly adept at processing sequential data, making them suitable for capturing long-range dependencies and contextual information within text. Their ability to remember information over long sequences through their gate mechanisms (input, forget, output gates) allows them to model the temporal flow of language, which is crucial for identifying complex narrative inconsistencies or subtle linguistic cues associated with greenwashing. For instance, LSTMs can discern how specific claims are presented in relation to broader corporate narratives, highlighting discrepancies that might indicate misleading practices.

CNNs, while traditionally used for image processing, have also found utility in text classification. In NLP, CNNs can identify local patterns (n-grams) within text through various filter sizes, effectively capturing phrases or segments that indicate specific themes or sentiments. Their ability to learn spatial hierarchies of features allows them to detect relevant textual patterns regardless of their position in a document. The strength of CNNs lies in their capacity for parallel computation and efficient feature extraction, making them suitable for identifying recurring deceptive phrases or structural anomalies in ESG reports.

However, the primary advancement in greenwashing detection using neural networks has been driven by Transformer-based architectures, such as BERT and its variants (e.g., RoBERTa, DistilBERT), which have largely superseded LSTMs and CNNs in many complex NLP tasks . These models leverage the attention mechanism, allowing them to weigh the importance of different words in a sequence when processing other words, thereby capturing global dependencies more effectively than LSTMs. The survey by Sun et al. highlights the prominence of Transformer-based architectures for tasks like topic detection, risk classification, and claim detection in greenwashing contexts, noting their high performance when sufficient labeled data is available .

The underlying assumptions of these deep learning models differ significantly from traditional methods. Traditional methods often assume that greenwashing can be identified through a predefined set of keywords or simple semantic rules, requiring explicit feature engineering. In contrast, deep learning models operate on the assumption that complex, abstract features indicative of greenwashing can be automatically learned from large datasets through multi-layered neural networks. This shift reduces reliance on manual feature engineering but increases the demand for large, high-quality labeled datasets for training.

Data requirements for advanced neural networks are substantial. Unlike traditional methods that can perform adequately with smaller, meticulously curated datasets, deep learning models, especially Transformer-based ones, require vast amounts of text data for pre-training to learn general language representations. For example, specialized models like FinBERT-ESG-9-Categories, based on the BERT architecture, are pre-trained on extensive financial text corpora to capture the nuances of financial documents relevant to ESG themes, including Climate Change, Natural Capital, and Corporate Governance . Similarly, models like ClimateBERT are fine-tuned on climate-related texts specifically for greenwashing risk classification . The development of domain-specific models and the necessity for pretraining NLP models on climate-related corpora are crucial for achieving accurate performance in climate-related tasks . This contrasts with traditional methods, which might require less raw data but more human expertise for feature definition.

Interpretability remains a challenge for deep learning models compared to traditional methods. While traditional methods offer clear insights into why a particular classification was made (e.g., specific keywords triggered a rule), the complex, non-linear transformations within deep neural networks make their decision-making processes less transparent. Researchers are actively working on explainable AI (XAI) techniques to mitigate this, but it remains a critical difference.

These advanced architectures significantly improved upon traditional techniques by moving beyond superficial lexical analysis to capture deeper semantic meanings and contextual relationships. For instance, the use of "valuable third-party models" like DistilBERT-SST2 for sentiment analysis in document structure and semantics extraction demonstrates the integration of sophisticated neural networks into broader NLP tools for corporate sustainability reporting . Furthermore, the shift towards pre-trained models, particularly transformer-based architectures like BERT, represents a paradigm shift from traditional methods . These models are pre-trained on vast text datasets, learning rich, general-purpose language representations, which are then fine-tuned on smaller, task-specific datasets for greenwashing detection. This transfer learning approach leverages the extensive knowledge embedded in large pre-trained models, significantly reducing the data requirements for fine-tuning and improving performance compared to training models from scratch.

Moreover, the advancement of these architectures has led to specialized applications, such as multimodal deep learning that integrates both text and visual information for quantifying sustainability messaging . While some studies focus on developing custom models like ClimateQA for question-answering systems in sustainability reports , the general trend leans towards leveraging existing powerful language models. The evolution from simple neural networks to sophisticated Transformer-based Large Language Models (LLMs) represents a leap in NLP capabilities, offering unparalleled contextual understanding and generation abilities. These LLMs, discussed in detail in subsequent sections, represent the current frontier, further advancing greenwashing detection by providing even more nuanced understanding of complex linguistic patterns and deceptive strategies. They build upon the strengths of earlier deep learning architectures, particularly in representation learning and pattern recognition, while offering enhanced capabilities through their massive scale and emergent reasoning abilities.

3.1.3 Transfer Learning and Pre-trained Models

The application of transfer learning and pre-trained models has emerged as a pivotal advancement in the analysis of specialized ESG text, particularly for identifying "greenwashing." This approach leverages models pre-trained on vast datasets, allowing them to capture intricate linguistic patterns and semantic nuances relevant to corporate disclosures. A key benefit of transfer learning is its ability to significantly improve efficiency and accuracy in greenwashing detection, especially in scenarios characterized by limited annotated data .

Domain-specific pre-trained models, such as ClimateBERT, FinBERT-ESG-9-Categories, ClimateGPT-2, and EnvironmentalBERT, are particularly instrumental in this context . ClimateBERT, for instance, has been extensively trained on over 1.6 million climate-related paragraphs, equipping it with a specialized understanding of climate-specific terminology and discourse . This domain adaptation is crucial because general-purpose language models, while powerful, may lack the nuanced understanding required to discern subtle forms of greenwashing embedded in highly specialized ESG reports and communications. The REPORTPARSE tool, for example, explicitly utilizes NLP models tailored for the climate change and sustainability domain, integrating annotators based on existing models for climate policy engagement and climate sentiment . Similarly, the pre-training of specific E, S, and G models on large corpora, such as over 13.8 million texts, further exemplifies the utility of transfer learning in enhancing transparency and accuracy in ESG evaluation .

The process typically involves fine-tuning these pre-trained models on smaller, task-specific datasets. For instance, fine-tuning the entire ClimateBERT model, rather than freezing certain layers (e.g., RoBERTa layers), has demonstrated superior performance in greenwashing detection, yielding higher mean validation accuracy and F1 scores . This indicates that allowing the model to learn and adjust all its layers to the nuances of the target task significantly enhances its ability to capture relevant information. This approach contrasts with zero-shot learning, where models are used directly without further training, as fine-tuning has been shown to significantly improve classification accuracy for ESG activity detection . The underlying assumption is that the knowledge acquired during pre-training on a large, general or domain-specific corpus can be effectively transferred and adapted to new, related tasks, even with limited target data.

Compared to standalone deep learning architectures trained from scratch, transfer learning offers several distinct advantages. Firstly, it substantially reduces the computational resources and time required for training, as the models have already learned foundational linguistic representations. Secondly, it mitigates the common challenge of data scarcity in specialized domains like ESG, where obtaining large volumes of meticulously annotated greenwashing examples can be prohibitively expensive and time-consuming. By leveraging knowledge from broader datasets, transfer learning models can achieve robust performance even with relatively small task-specific datasets . The interpretability of these models often stems from their foundation in transformer architectures, which allow for insights into feature importance and attention mechanisms, though full transparency remains an ongoing research challenge.

The data requirements for pre-trained models involve access to large, diverse corpora for the initial pre-training phase, followed by smaller, task-specific annotated datasets for fine-tuning. The quality and relevance of the pre-training data are critical, as they dictate the breadth and depth of knowledge the model acquires. For instance, ClimateBERT's efficacy is directly linked to its training on extensive climate-related texts . In contrast, training deep learning models from scratch for greenwashing detection would necessitate collecting and annotating enormous volumes of highly relevant text data, a task that is often impractical.

The success of transfer learning with pre-trained models has demonstrably paved the way for the emergence and widespread application of Large Language Models (LLMs) in various NLP tasks, including greenwashing detection. These models exemplify the power of large-scale pre-training on massive text datasets, demonstrating that models can learn rich, transferable representations of language. The ability to leverage such pre-trained knowledge and fine-tune it for specific, complex tasks like identifying subtle deception in corporate disclosures highlights a paradigm shift from traditional, task-specific model development to an approach that capitalizes on vast computational resources and data for initial general-purpose learning. The continuous development of domain-specific models like ClimateBERT and the fine-tuning of general LLMs on ESG data underscore a growing recognition within the field of the indispensable role of pre-training and transfer learning in addressing the complexities of sustainability communication and combating greenwashing.

3.1.4 Specialized and Large Language Models (LLMs) and Their Applications

The application of Large Language Models (LLMs) and specialized models has become increasingly prominent in the detection of ESG activities and greenwashing, offering advanced capabilities for contextual understanding and nuanced analysis of financial and corporate disclosures. The landscape of these models encompasses both generalist LLMs and domain-specific adaptations, each with distinct advantages and methodological considerations.

A key distinction in the application of LLMs for greenwashing detection lies between generalist models and those specialized through pre-training or fine-tuning on domain-specific datasets. While general LLMs like Llama (3B, 2 7B, 3 8B), Gemma (2B, 7B), Mistral (7B), and GPT-4o Mini are versatile, their direct application in zero-shot or few-shot learning scenarios for highly specialized tasks such as identifying intricate greenwashing tactics can be limited . In contrast, specialized models, such as ClimateBERT and FinBERT-ESG-9-Categories, demonstrate superior performance due to their pre-training on extensive climate-related or financial and ESG texts, respectively . These models are not general LLMs in the broader sense but represent highly effective applications of transformer-based architectures tailored for specific domains. The development of domain-specific LLMs like ClimateGPT, trained on climate-related data, further underscores the efficacy of specialized models, showing superior performance on specific climate benchmarks compared to their generalist counterparts .

The efficacy of LLMs in greenwashing detection is significantly influenced by the chosen learning paradigm: zero-shot versus fine-tuning approaches. Zero-shot learning, where a model is prompted to perform a task without explicit examples, leverages the pre-trained knowledge of general LLMs. However, for the intricate and context-dependent nature of greenwashing, fine-tuning often yields superior results. For instance, the fine-tuning of relatively small open-source models like Llama 7B has been shown to achieve excellent performance in identifying text related to environmental activities within ESG taxonomies, sometimes even surpassing larger proprietary models . This fine-tuning process frequently involves techniques such as Low-Rank Adaptation (LoRA), which efficiently updates a low-rank subspace of the model's pre-trained weight matrices, thereby maintaining efficiency while adapting the model to specific tasks like binary classification for ESG activity detection . The use of synthetically generated data, combined with original data, is crucial in overcoming data scarcity challenges inherent in this specialized domain, further enhancing fine-tuned model performance .

Prompting strategies also play a vital role in the performance of LLMs, especially in zero-shot or few-shot settings. While specific details on optimal prompting are noted as important for LLM applications, their detailed methodologies are often proprietary or subject to ongoing research . However, the concept of a unified NLP tool, as suggested by REPORTPARSE, allows users to select various NLP methods, some of which may be LLM-based, highlighting the potential for integrating diverse semantic analysis models for comprehensive greenwashing detection .

LLMs offer significant strengths in understanding complex contextual information within corporate disclosures. Their advanced natural language processing capabilities, including sentiment analysis, Named Entity Recognition (NER), topic modeling, and relationship extraction, enable them to analyze extensive data, uncover hidden patterns, and identify discrepancies in corporate claims . For example, sentiment analysis can detect overly positive language, while topic modeling can reveal broad, unsubstantiated claims versus actionable commitments. NER helps link claims to measurable outcomes, and relationship extraction identifies mismatches between stated policies and actual practices . This sophisticated contextual understanding represents a significant advancement over previous deep learning approaches that might have relied on more rigid feature engineering or less adaptable architectures.

Despite their strengths, LLMs and specialized models are not without limitations. Challenges include the potential for hallucination, where models generate factually incorrect or nonsensical information, and biases inherited from their training data. These issues can compromise the reliability of detection in sensitive areas like greenwashing, where precision and factual accuracy are paramount. Furthermore, prompt sensitivity—where minor changes in input phrasing can lead to vastly different outputs—poses a challenge for consistent and reliable application. The need for continuous updates and retraining of AI models to accurately detect evolving greenwashing practices is also a critical consideration, as corporate deception strategies are dynamic . Moreover, while AI and NLP tools can serve as "perfect assistants" for ESG raters, human oversight remains essential. AI-driven critical decisions must be thoroughly investigated and combined with human expertise to mitigate the risks of subjectivity, limited context, and the absence of verifiable evidence .

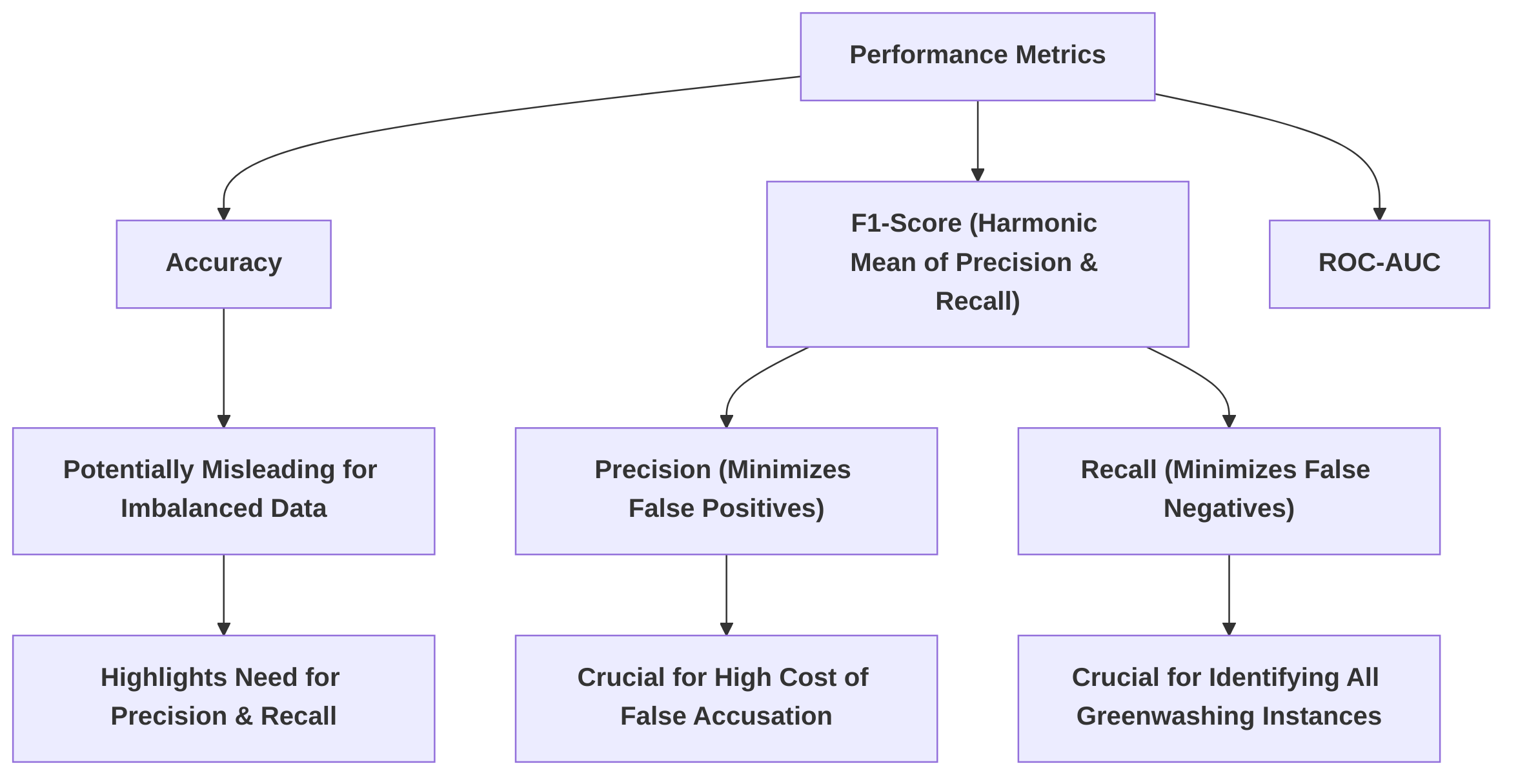

Comparing LLMs to previous deep learning approaches reveals several shifts in underlying assumptions, data requirements, and interpretability. Earlier deep learning models for text analysis, such as those relying on traditional word embeddings or simpler recurrent neural networks, often required extensive labeled datasets for supervised training and struggled with capturing long-range dependencies and nuanced contextual meanings. While deep learning models like "text and vision transformers" are broad categories, their application prior to the advent of large pre-trained models required substantial domain-specific data and careful architectural design . LLMs, by contrast, are pre-trained on vast amounts of diverse text data, allowing them to develop a generalized understanding of language, which can then be adapted to specific tasks with less labeled data through fine-tuning or even zero-shot learning. This pre-training enables LLMs to offer enhanced contextual understanding, capturing semantic relationships and nuances that were challenging for prior models. However, this enhanced understanding comes with new challenges: LLMs often act as "black boxes," making their decision-making processes less interpretable than simpler models. Their performance is also highly sensitive to prompting strategies, and the potential for generating misleading or biased information (hallucinations) necessitates robust validation frameworks. The transition towards LLMs has thus shifted the paradigm from purely data-driven model training to a combination of pre-training on massive datasets and subsequent fine-tuning or sophisticated prompting for domain-specific applications, demanding greater attention to model robustness and ethical implications.

3.1.5 Multimodal Approaches

While many existing approaches to greenwashing detection primarily rely on textual data, a more comprehensive assessment can be achieved through multimodal deep learning, which combines information from various sources, particularly text and visual content . The integration of text and vision transformers allows for the mapping of diverse textual and visual sources of information onto sustainability axes, enabling a more holistic analysis of corporate claims . This approach is advantageous because greenwashing often manifests not only through deceptive language but also through misleading imagery, logos, and campaign designs that evoke a false sense of environmental responsibility. By simultaneously processing both modalities, multimodal models can capture subtle inconsistencies and contradictions that might be missed by purely text-based methods. For instance, a company's report might use positive environmental language in its text while featuring images of highly polluting industrial operations, a discrepancy that a multimodal model could identify.

Multimodal deep learning for greenwashing detection presents several advantages over traditional text-based methods. Primarily, it offers a more comprehensive view by leveraging complementary information from different data types. Textual data can provide explicit claims, statistics, and narrative descriptions, while visual data can convey implicit messages, brand imagery, and contextual cues that reinforce or contradict the textual content. For example, a company might use sophisticated NLP techniques to analyze the language in annual reports or press releases , but if those reports are accompanied by manipulated or misleading images, the textual analysis alone would be insufficient. The synergy between modalities can reveal a more complete picture of a company's environmental performance and communication strategy, helping to uncover more sophisticated forms of greenwashing.